Google is turning its chatbot into a songwriter. The company has begun rolling out a beta feature in the Gemini app that generates original music from simple prompts, powered by DeepMind’s latest Lyria 3 model. Users can describe a vibe or scenario and get a 30-second track with lyrics and cover art, plus options to tweak the result—all without leaving the chat interface.

How music generation works in Google’s Gemini app

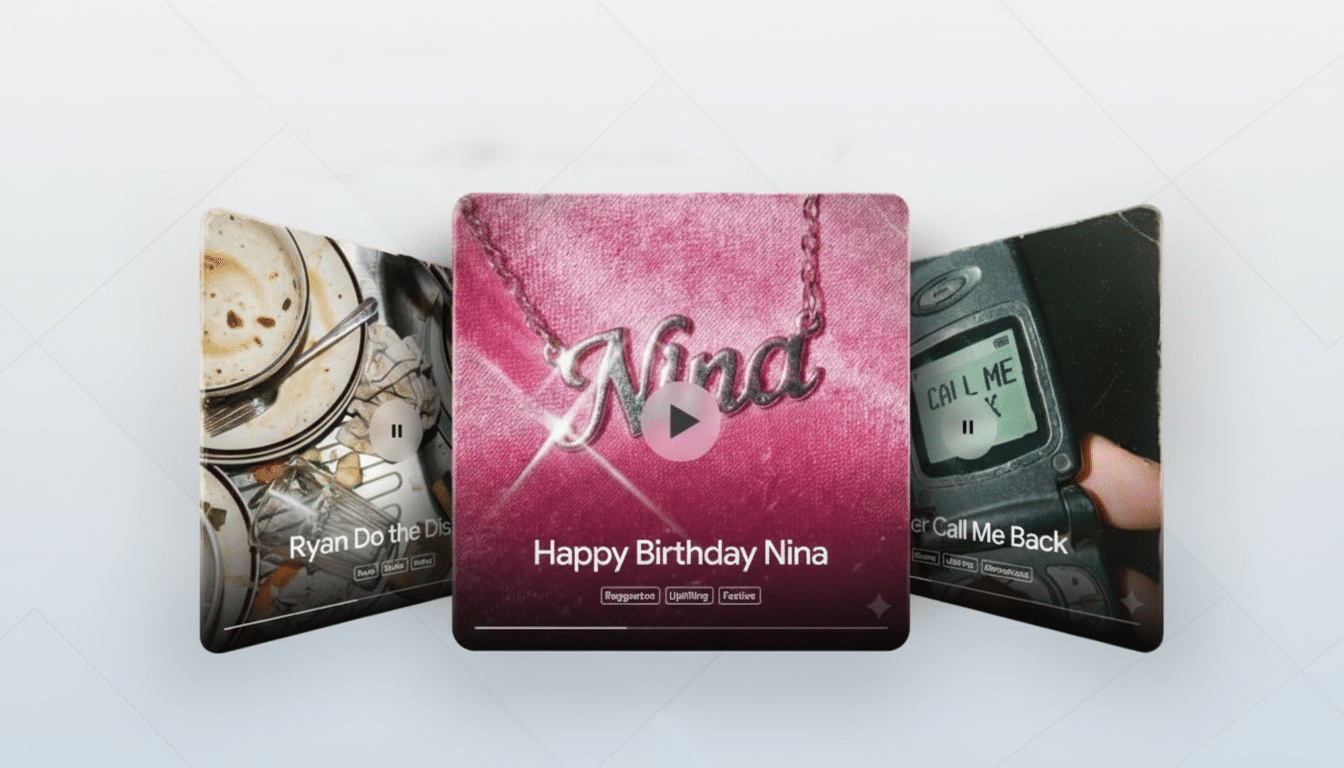

The experience is built around natural language. Type something like “a comical R&B slow jam about a sock finding its match,” and Gemini will produce a short song complete with vocals and a cohesive structure. Visual prompts are supported, too: upload a photo or video and the app will compose a piece that matches the mood of the media. Cover art is auto-generated as well, with Google crediting Nano Banana for artwork output in this beta.

Beyond one-shot generation, users can guide style, vocal presence, tempo, and other musical elements through iterative prompts. That loop—describe, listen, refine—brings familiar creative workflows into a conversational AI setting, lowering the barrier for non-musicians while still giving tinkerers room to shape a sound.

Lyria 3 and creative controls for custom songs

Lyria 3 is DeepMind’s newest music model, and Google says it improves realism and complexity over prior generations. In practice, that means fuller arrangements, clearer vocals, and smoother transitions between sections. The model supports style conditioning, letting users steer toward genres or moods, and can respond to prompts that ask for specific tempos, instrumentation hints, or vocal treatments.

While the model is capable, Google is positioning it as a co-creation tool rather than a replica machine. If a prompt names a well-known artist, Gemini treats it as broad inspiration and generates something in a similar style or mood—not a direct imitation. Google says it employs filters that compare outputs against existing content to reduce lookalike risks.

YouTube Dream Track expands globally for creators

Alongside the Gemini update, Google is extending access to Lyria 3 for creators on YouTube through Dream Track. Previously limited to select creators in the U.S., the feature is now expanding globally, opening AI-assisted song creation to a far broader set of Shorts and long-form producers. For YouTube, which already sits at the crossroads of music discovery and creator culture, the move could accelerate an emerging genre of AI-native content.

This ties directly into platform strategy: faster production cycles, more soundtrack options for videos, and new creative hooks that don’t require a DAW or studio time. Expect to see Dream Track pieces used as scratch tracks, meme fodder, or even the seeds of more polished releases.

Watermarking and AI detection built into Gemini

Every Lyria 3 output will carry a SynthID watermark, Google’s imperceptible marker designed to flag AI-generated media. Just as important, Gemini will include a built-in checker: users can upload a track and ask whether it was AI-generated, with SynthID aiding the determination. Provenance has become a central demand from labels, publishers, and policymakers, and embedding detection into both generation and discovery workflows is a pragmatic step.

The beta is available to Gemini users who are 18+ and supports multiple languages at launch, including English, German, Spanish, French, Hindi, Japanese, Korean, and Portuguese. That multilingual reach matters for music, where cross-border hits increasingly start on social platforms before spilling into streaming charts.

Why this move matters for AI music and creators

Generative music is at an inflection point. On one side, platforms like YouTube and Spotify are experimenting with AI tools and label partnerships to capture new formats and monetize fresh inventory. On the other, music companies and rights holders are pressing AI developers over training data and artist likeness, with high-profile lawsuits—such as cases brought by the Recording Industry Association of America against AI music startups—testing the legal boundaries.

Google’s approach attempts to thread the needle: enable original expression, restrict mimicry, and stamp everything with a watermark. That won’t resolve every rights question, but it sets clearer norms for provenance and responsible use, which regulators and industry groups have been calling for. The U.S. Copyright Office’s ongoing study into generative AI and copyright underscores just how unsettled the framework remains.

The timing is also strategic. Streaming now delivers roughly two-thirds of global recorded music revenue, according to the IFPI, and social-first discovery is dictating what breaks. If creators can spin up bespoke tracks on demand—tagged, traceable, and usable at scale—it could reshape how soundtracks, memes, and even indie releases are made and shared. Meanwhile, services like Deezer are deploying AI detection to curb fraudulent streams, signaling that tooling on both the creation and policing sides is maturing in tandem.

For everyday users, the promise is simple: describe a song and hear it come to life. For the industry, the questions ahead revolve around attribution, consent, and compensation. Gemini’s new music features won’t answer them all, but they push the conversation forward—and put powerful composition tools into millions of pockets.