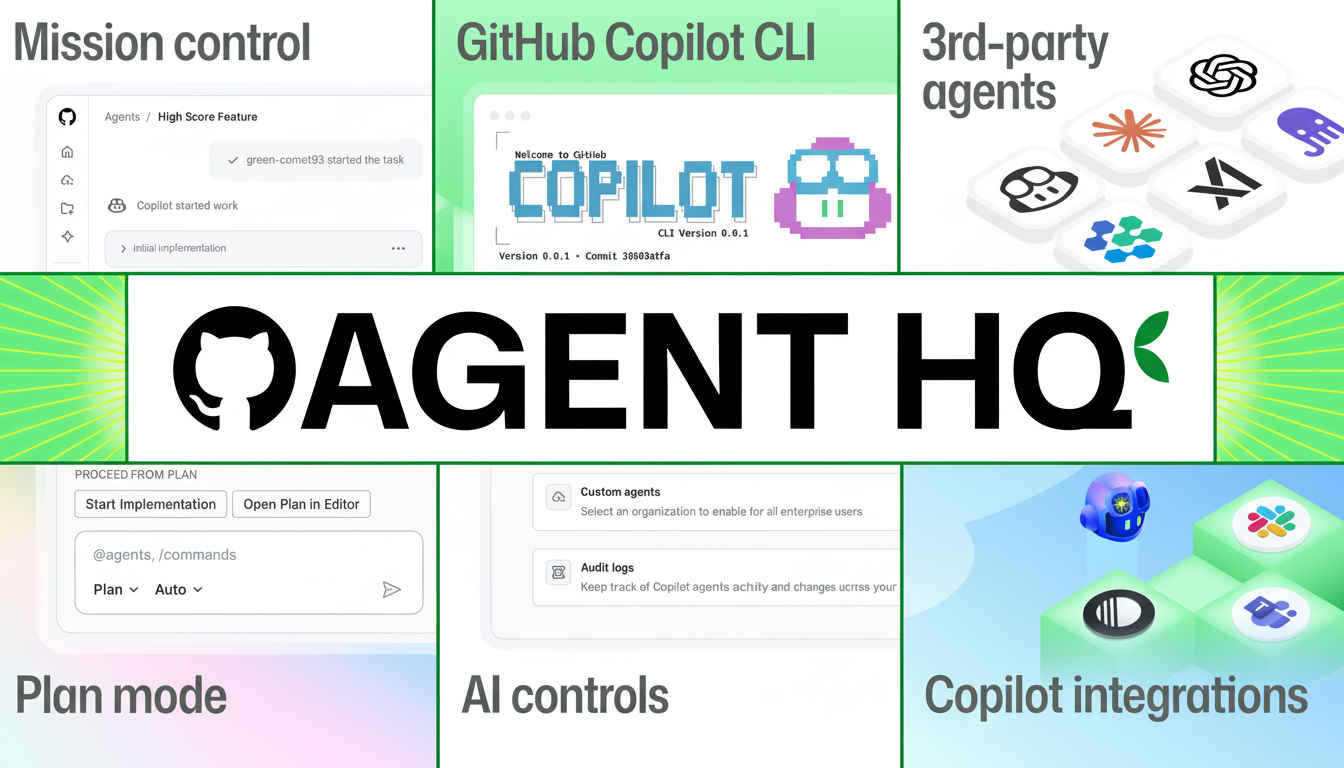

Now it is launching Agent HQ, a central hub where developers can manage several AI coding agents as if they were teammates within the tools they already use.

It’s that control center that connects agents like Codex, Claude, and Jules directly to repos, issues, branches, and pull requests—plus a new Mission Control view that stretches across GitHub, VS Code, and the terminal.

It’s a larger swing than simply another feature from an AI. By integrating agent orchestration as part of the normal development workflow, GitHub is converting fragmented point solutions into a single accountable system. If it executes at scale, Agent HQ might change how software teams are planned, built, and shipped.

What Agent HQ Is Really Doing for Developers

Agent HQ treats AI models as first-class collaborators. Agents can spin up issues, open pull requests, commit code, provide and accept changes on pull requests, and run CI-related tasks—all within the very same governance guardrails currently used by human contributors. Through a single queue, Mission Control superimposes this so you can distribute work across agents, reserve bandwidth for others to take action, and monitor their progress.

Crucially, the system is model-agnostic. GitHub is asking several coding agents to plug in, beginning with OpenAI’s Codex and Anthropic’s Claude, offering more in the future. Jules from Google Labs is also slotted as a native assignee. That means teams can direct certain tasks to the agent that’s most qualified for the job—tests to one, refactors to another—without bouncing between different apps.

Why a Unified Command Center Is Vital for Teams

They operate in silos. Most AI coding assistants live in silos—a chat here, an IDE extension there, something else over there. It is difficult to gain system-wide insights about the codebase across these disparate tools. Agent HQ minimizes the context-switching tax by deploying agents where code and conversations already exist. It also moves away from one-off prompting to ongoing, trackable work streams that more closely resemble how teams interact in real life.

It’s the consolidation play we saw with CI/CD moving from ad hoc scripts to integrated pipelines. A single pane of glass for agent work is less glue code, fewer manual handoffs, and clearer accountability when slowness or outright failures arise.

Open Ecosystem and the Lock-In Question for AI

But the elephant in the room for AI development is vendor lock-in. The more a model learns your codebase and style, the harder it becomes to change. In treating Agent HQ as an open integration layer, GitHub is saying that developers should be free to bring their own custom models to the party without tearing the house down.

That said, impartiality will be tested over the years. If a subset of features only works with some models or if agent “memories” aren’t portable, there’s room for subtle lock-in to creep back in. The promise here is that of interoperability—the proof will be in how seamlessly teams can swap models and retain context and workflows.

Governance You Can Audit Across Agent Workflows

Agent HQ will be watched closely by enterprises for how it approaches oversight. GitHub is stacking identity for agents, creating branch protections customized to AI-generated code, and policies that mimic human permissions. Paired with GitHub Enterprise’s existing features, such as audit logs, required reviews, and CODEOWNERS, teams get an auditable trail of who or what changed what, and why.

The level of traceability is crucial for regulated industries and any team going after ISO 27001, SOC 2, or internal risk controls. It pushes agentic coding from “neat experiment” to “ready for production” in organizations that require approvals and evidence, not just haste.

The Data-Driven Case for Productivity in Software Development

Developers working with Copilot finish tasks up to 55% faster, according to GitHub. Survey data indicate that 88% are more productive with the help of AI. According to Stack Overflow’s most recent developer survey, more than 70% of developers are currently using or intending to use AI tools on a regular basis. Agent HQ seeks to leverage that momentum by synchronizing many agents in a single workflow, in theory getting more throughput than using a lone agent.

Consider a team triage pattern: Claude drafts a refactor for the flaky module, Codex auto-generates missing tests, and Jules updates documentation—all from issues and followed in Mission Control. Reviewers will be able to see agent identities. Diffs will be restricted to protected branches, and CI gates are guarded by policy. That’s not just faster coding; it’s faster, safer shipping.

What to Watch Next as Agent HQ Rolls Out

Three questions will determine the impact of Agent HQ.

- Extensibility: It should be easy for a new agent to integrate into the repository and responsibly access its context.

- Portability: Are agent memory and task history portable across models without significant loss of fidelity?

- Economics: With many actors in the mix, how “price predictable” are costs of usage and how well does auto-scaling match up to actual work?

There are already more than 100 million developers using GitHub, the company reports in its Octoverse. If Agent HQ holds up across that spectrum—which ranges from open source to enterprise workloads—it will feel less like a feature drop and more like a new layer of the software development stack.

The upshot is that agentic training and development are moving from chat windows to the literal center of the toolchain. By providing teams with a model-agnostic command center that has governance integrated, GitHub is betting that the future of building software will be humans and AI agents working together with accountability and audit trails to keep up with the speed.