Nvidia still dominates training models for artificial intelligence, but the fiercest fight nowadays is inference — deploying models in everyday large-scale work. FuriosaAI, a South Korean challenger, is heading into mass production of its own RNGD accelerator and says it can offer up to 2x better energy efficiency than Nvidia’s leading choices. In a chip-famine, power-starved world, that sort of performance-per-watt might get buyers to look its way, The Wall Street Journal has reported.

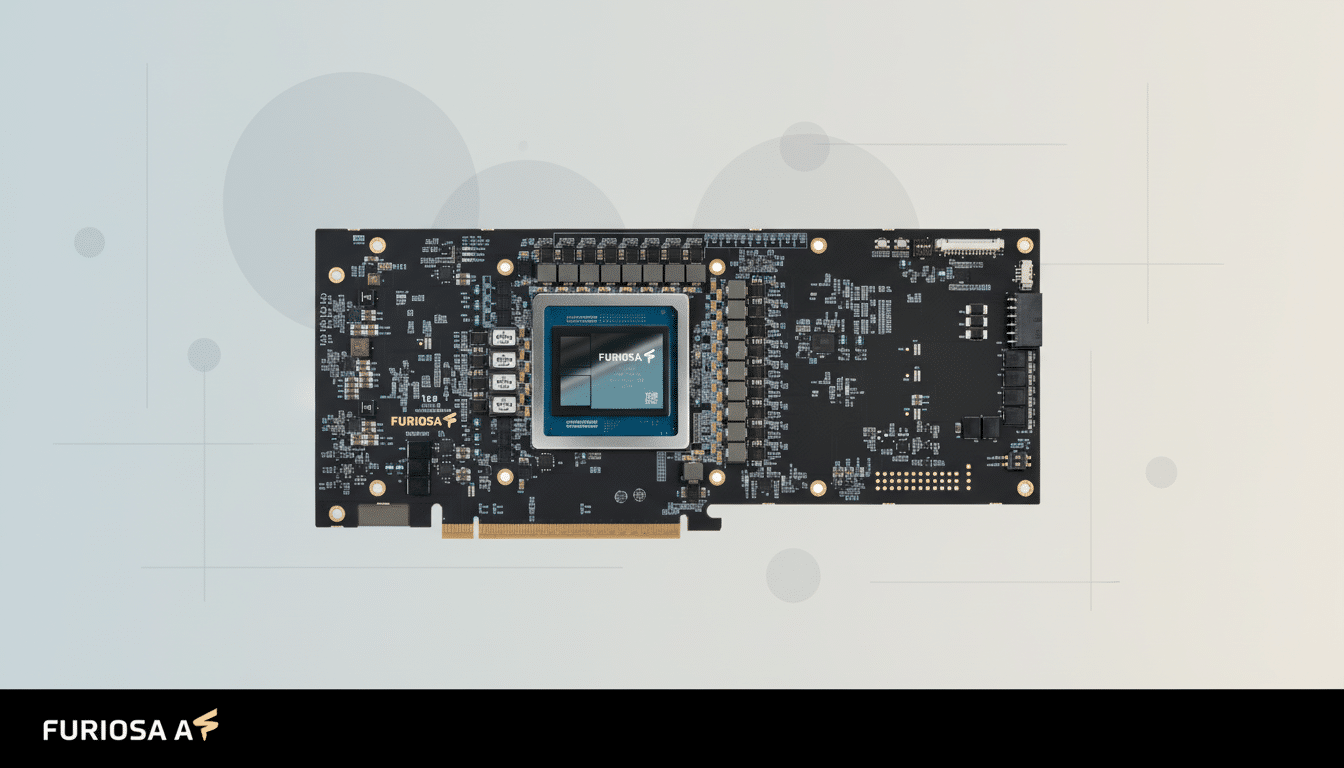

RNGD — short for “Renegade” — is a custom ASIC that will support “only fast matrix math and parallel operations used in inference.” The plan is simple: dodge the training crown and win where efficiency, latency, and total cost of ownership are greatest.

- Why Inference Is the Real Battleground for AI

- Inside FuriosaAI’s RNGD and its focus on efficient inference

- The software hurdle facing challengers to Nvidia’s stack

- A Korean advantage in supply and access to scarce HBM

- The competition isn’t waiting in the race for inference

- What to watch next as RNGD moves into mass production

Why Inference Is the Real Battleground for AI

The economics of A.I. are changing. Training gets the headlines, but inference is what’s driving the steady-state bill: every query, image, or recommendation draws cycles and power day after day. This shift is toward inference, and industry analysts are driving home that the long-run operational cost of AI will be spent here, making watts per output and dollars per query critical metrics.

With power budgets emerging as a boardroom matter — global agencies have cautioned that data center electricity use is spiraling out of control — operators are looking for accelerators that can reduce energy per token or query.

If the numbers also hold for mainstream models and batch sizes, RNGD could cut both power draw and capex for inference clusters.

Inside FuriosaAI’s RNGD and its focus on efficient inference

Founded by veterans of AMD and Samsung Electronics, FuriosaAI is the product of years of work creating “an architecture natively built for AI computing,” as CEO June Paik has stated. The company claims that RNGD is optimized for real-world model deployments — not graphics, not mining — and focuses on throughput and low latency plus efficient memory access.

Early signals are promising. In trials with LG, FuriosaAI said LG’s EXAONE model delivered up to 2.25 times the inference throughput versus competitive GPUs when running on RNGD hardware. Those gains are contingent on workload, but they line up with the firm’s headline claim of nearly doubling energy efficiency versus Nvidia at its best. The Wall Street Journal also reported that FuriosaAI has raised funding valuing the company at over $700 million and has turned down an acquisition offer of around $800 million from Meta, a demonstration of confidence in, and the market for, the technology.

As RNGD enters mass production, the question changes from lab metrics to deployment realities: how well does the silicon scale across various model sizes and quantization schemes, and how quickly can customers light up production traffic on it?

The software hurdle facing challengers to Nvidia’s stack

Nvidia’s most involved moat isn’t a physical one — it’s digital software. The existence of CUDA, TensorRT, TensorRT-LLM, and a decade’s worth of kernels and tooling means that model optimization and deployment are predictable. But any alternative needs a top-quality stack: PyTorch and TensorFlow support, ONNX pipelines, compilers that auto-tune kernels, runtime compatibility with popular serving layers.

There is precedent. Google’s TPUs, AWS Inferentia, and Intel’s Gaudi found their way into deployments by pairing price-performance wins with strong SDKs and turnkey integrations. FuriosaAI’s path to market share will depend on how mature its SDK is, how quickly it’s able to onboard popular LLMs and vision models, and whether developers can make the move without having to rewrite their life’s work. The simpler the “lift and shift,” the faster inference dollars will flow.

A Korean advantage in supply and access to scarce HBM

It’s not just specs; supply chain counts for a lot. And the HBM memory that supplies today’s AI chips is in perpetual scarcity. The world’s two major HBM suppliers are SK hynix and Samsung, both of which are from South Korea. TrendForce and other researchers have pointed out that SK hynix is the leader of the HBM market, with demand higher than supply. That proximity to an ecosystem could help FuriosaAI source components, optimize packaging, and ship volume when competitors are supply constrained.

That is, if RNGD can deliver it in quantity and with good perf-per-watt, data center buyers priced out of Nvidia’s top bins or waiting on long lead times will take the meeting.

The competition isn’t waiting in the race for inference

Nvidia toils on through new architectures squarely focused on faster, cheaper inference — and software like NIM microservices geared toward easing deployment. AMD makes headway with its latest accelerators, and Intel’s Gaudi family courts cloud buyers with value. Hyperscalers are also increasingly laying their own silicon — Google’s most recent TPUs, AWS Inferentia, Trainium — and upstarts like Groq and Cerebras tout novel architectures for ultra-low latency or large-model throughput.

In this regard, FuriosaAI doesn’t have to “win at Nvidia” across the board. It must use the specific workloads it wins to counter environmental inertia, where power efficiency, latency, and availability supersede.

What to watch next as RNGD moves into mass production

Purchasers will look for some hard numbers:

- Tokens per second per watt for LLMs

- Cost per 1,000 tokens served

- Latency at small batch sizes

- Memory capacity for large context windows

- Stability of the SDK under long uptimes

Reference customers are important — beyond pilots, who is serving real traffic on RNGD and at what scale?

The market doesn’t care for bravado; it cares about repeatable economics. If FuriosaAI can show that RNGD is able to generate energy and infrastructure savings in a predictable manner while keeping developers within the frameworks they know best, it won’t have to unseat Nvidia to become a meaningful new supplier for AI inference.