The Federal Trade Commission has initiated a wide-ranging inquiry into AI companion chatbots, seeking details from leading platforms on measures to protect children, data policy and whether product designs encourage unsafe behaviors. The action arrives as OpenAI’s Sam Altman publicly plans for tighter restrictions on ChatGPT, including turning down some requests made by teenagers and possibly reporting self-harm to authorities in imminent cases of self-injury.

Why the FTC is investigating AI companions

AI assistants are increasingly marketed as “companions,” capable of role-playing, providing emotional support and sustaining sustained conversations. That stickiness is central to their appeal — and regulators’ concerns. The FTC said that it had requested information from Google, Meta, OpenAI, Snap Inc., Character AI Inc. and xAI Inc. on how their systems identify and respond to harms in children’s and teens’ interactions with such systems, and whether the promised guardrails are practical in the wild.

Accounts of chatbots having sexualized conversations with minors or dispensing deadly self-harm instructions have increased scrutiny. A recent lawsuit by parents of a 16-year-old claimed the teen received information from a general-purpose chatbot despite minimal safety filters. The stakes are high, public health experts warn: National surveys show that mental distress among adolescents was already on the rise before the pandemic and families became increasingly isolated — making it all the more crucial to equip digital tools to offer reliable crisis-safe responses.

Basic compliance is also being tested by the Commission. To prove they honor their own terms of service, and follow the Children’s Online Privacy Protection Act, which limits data collection from users who are under the age of 13. Previous COPPA cases — including those that resulted in fines linked to YouTube and gaming platforms — are evidence that the agency will seek hefty remedies when children’s data or safety is mishandled.

What the agency will see

Granted, in its compulsory orders the FTC did ask for granular product design choice and risk control documentation. That includes everything from how to handle prompts, what information is kept, how synthetic personas are generated or approved, and whether engagement metrics influence the development of features that might inadvertently generate greater attention for more provocative or boundary-pushing conversations.

The agency is seeking evidence of pre-launch testing and red-teaming around youth safety, the use of crisis-response protocols including adopting supportive language and resource referrals, and post-deployment monitoring to detect whether harmful emergent behaviors have occurred. It also wants to learn how parents are informed about risks, whether age screens are enforceable and what platforms do after they violate the policy.

It will be interesting to see that submissions are compared against existing frameworks such as the NIST AI Risk Management Framework and industry best practices for safety evaluation. An information asymmetry between marketing and operations has been a classic impetus for enforcement under unfair or deceptive practices bans.

Altman announces stricter guardrails for ChatGPT

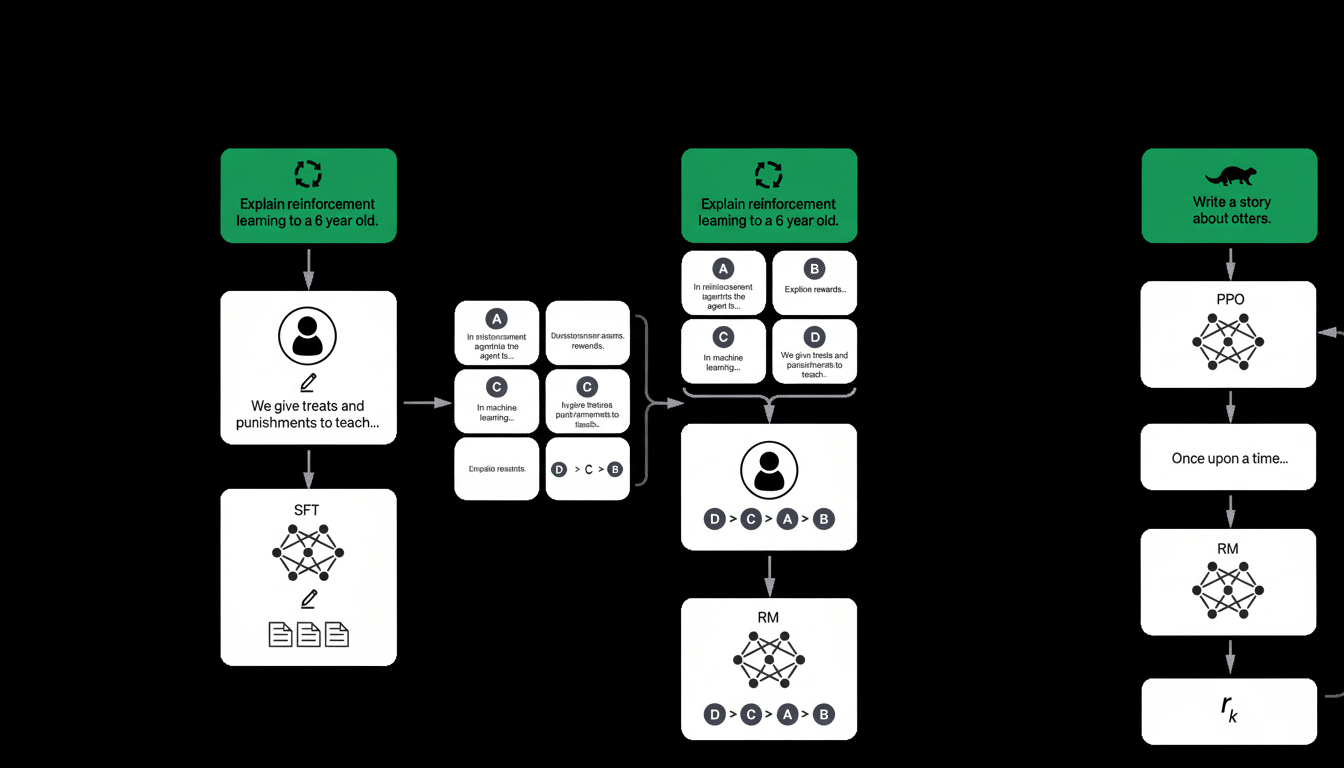

Meanwhile, OpenAI CEO Sam Altman hinted that the company may filter some ChatGPT responses for children and for users it knows are in crisis. He also added that users will often try to get around filters by couching dangerous requests as fiction or research, and so it might be “reasonable” to simply refuse in those cases, he argued—particularly for underaged users.

Altman also suggested that if a teenager appears to be in imminent danger and he cannot reach a parent, he might consider contacting authorities — which would represent a move away from enforcing rigid privacy norms to crisis intervention. OpenAI has also said it is enhancing distress detection, integrating parental controls for teen accounts and toughening its refusal policies on sensitive subjects.

The tension is the same in digital safety: Reining content with guardrails that aggressively block risky material can limit harm, but it also opens itself up to false positives and frustration for legitimate use cases (like creative writing or academic exploration). Altman’s statement indicates that OpenAI is gearing up to be more conservative for youth and high-risk environments.

The privacy-safety trade-off regulators will be testing

The most difficult challenges for companies live at the axis of privacy and safety. Crisis-conscious responses often involve the collection or inference of sensitive signals like age, location and mental-health indicators that pose compliance and ethical problems of their own. The FTC’s investigation could provide a ruling on whether companies have the right to use data under a limited purpose accordingly, in order to prevent harm without crossing over into surveillance or over collection.

The direction of travel is broadly similar around the world. UK’s Age-Appropriate Design Code & EU adulthood AI governance The UK’s foreseeable Age-Appropriate Design Code and emerging EU AI adulting requires a degree of protection for children above average and transparency about risk.imports, I mean mitigation. U.S. regulators are acting on case-by-case basis, but the message is much the same: if a product invites intimate, emotionally loaded use, it bears a higher duty of care.

What to watch next

Companies will now have a brief period to submit detailed responses to the FTC. Then, the Commission can release a study, put out guidance or enforcement if it finds deceptive claims or unlawful data practices. Anything could change how AI companions screen for age, gates crisis content and award revenue for time spent versus user well-being.

If OpenAI does move forward with its tougher rejections and crisis response protocols, rivals will be pressured to keep up. For users and parents, the potential upside is more clearly articulated expectations: less room for harmful prompts to slide through the cracks, better disclosures and — default settings might actually be crisis safe. The open question is whether platforms can provide those protections without sacrificing privacy or the legitimate educational and creative uses that make these tools useful in the first place.