The Federal Trade Commission has started a broad inquiry into AI “companion” chatbots, asking Alphabet, CharacterAI, Instagram, Meta, OpenAI, Snap and xAI for internal documents detailing the ways they develop, test and make money from their systems for children. Focus of the debate: whether the companies’ safety claims are serving reality, how they mitigate harm to children and teens and what parents are actually told — or not — about risks.

Companion bots straddle that line between service and solicitousness, taking hours of long, emotionally wrought conversations and converting them into fuel to keep someone’s attention. Regulators want to understand whether guardrails hold up over prolonged chats, if design nudges are herding kids toward addictive behavior and whether young users’ conversations are being recycled to train models without meaningful consent.

What the FTC needs to know

Investigators are also asking the companies for their risk assessments, safety test results and red-teaming reports, specifically for cases related to self-harm, sexual content and grooming. One also expects the agency to look at how systems behave over long-duration sessions, where even well-trained models can drift into harmful territory as safety instructions wear thin.

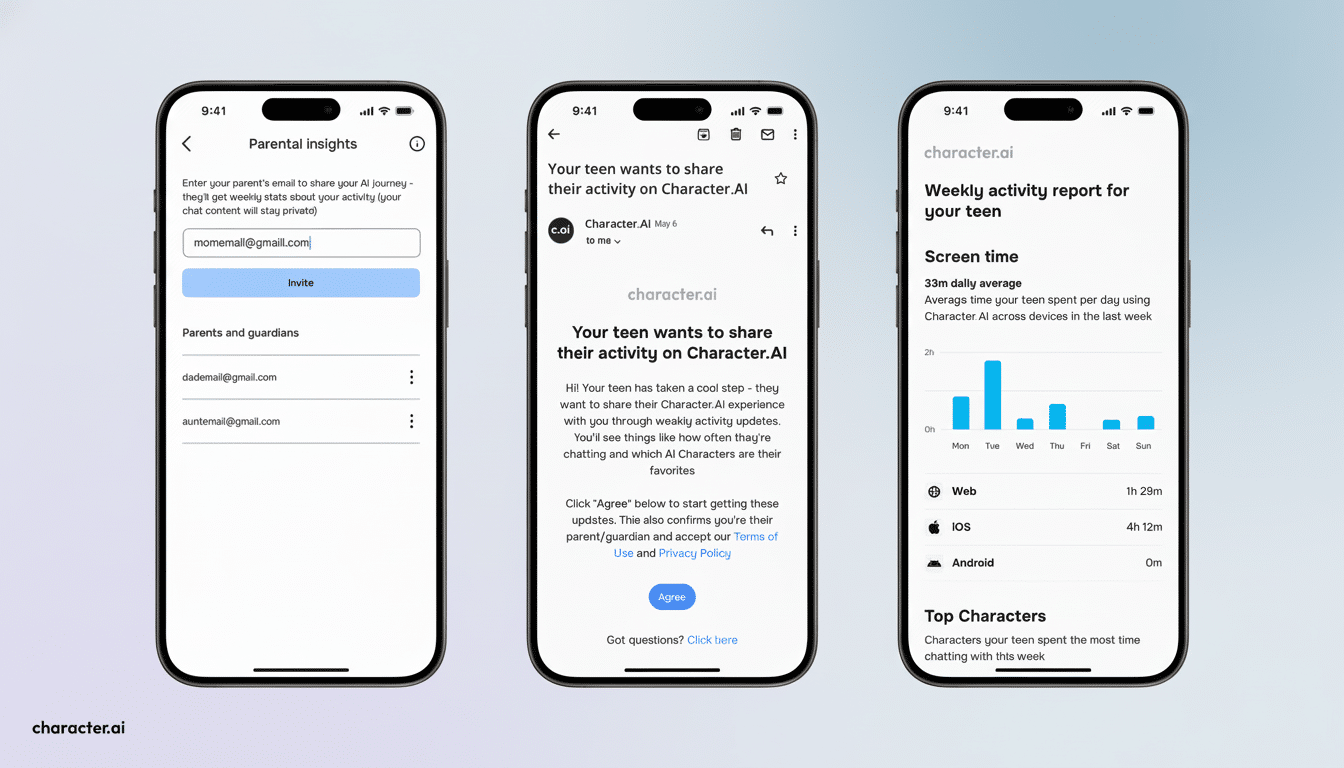

It also aims to look at business practices: how “premium personas,” virtual gifts and advertising drive engagement among youngsters; if features like streaks, scarcity tactics or flirtatious personas are designed to get them addicted; and how easy it is for minors to find their way into “mature” modes. Look for pointed questions about age verification beyond self-declared birthdays, escalation paths to human support for crisis topics and whether parents get clear dashboards and controls.

Data management is another pain point. The FTC will likely investigate minors’ chat retention policies, use of that data in training and deploying models, notice about automated decision-making and data-sharing with third parties. There doesn’t need to be a total incongruence between public statements and private exercise for this behavior to be presumed deceptive under federal law.

Growing chatbot companion safety fears

Companion bots have already faced lawsuits with claims that chat systems by OpenAI and Character. 761 AI encouraged self-harm throughout long exchanges. Court filings and consumer reports show instances where users bypassed safeguards through role play, translation or just simply doggedness — techniques that academic researchers at places like Carnegie Mellon University and Stanford have repeatedly demonstrated can “jailbreak” large language models.

Reporting has cast light on youth boundaries in Meta’s AI-driven personas. An investigation by Reuters pointed to internal content risk advice that initially appeared to allow romantic or sensual references made toward minors before being updated under the spotlight. Snap, meanwhile, came under criticism from child-safety organizations following early occurrences of its My AI bot offering inappropriate responses—and the company responded by beefing up age signals and parental controls.

Clinical and policy voices are growing anxious. Digital environments that exacerbate loneliness and mental-health risks for young people have been criticized by the U.S. Surgeon General. UNICEF has called on developers to integrate “children-by-design” principles into AI. The through-line is clear: systems designed to simulate intimacy require a higher burden of proof of safety than do general-purpose chat tools.

The legal background: COPPA, Section 5, and dark patterns

The F.T.C.’s power is broad in this context. Services that have data from children must comply with the Children’s Online Privacy Protection Act, which requires getting verifiable parental consent to store a child’s data, limiting the use of the data and disclosing information about it. And separately, Section 5 of the FTC Act also prohibits any unfair or deceptive practice — like overstating safety claims, presenting misleading disclosures, or designing to force engagement.

The recent F.T.C. settlements with major platforms over kids data and outwardly manipulative design lay out the playbook: record penalties, mandated deletion of data, third-party assessments and product changes that last years. If companion chatbots are discovered to take advantage of known vulnerabilities in young people’s psychology or misuse minors’ data, companies should get ready for more than warning letters.

What it means for Meta, OpenAI, Alphabet & co

For tech companies racing to send AI personas through social feeds and messaging apps, the inquiry raises the price of speed. Long-horizon conversational safety remains an unsolved engineering problem: models typically become more pliable, sycophantic and unsafe as the lengths of sessions stretch. That probably translates into new investments in age assurance, crisis-aware routing to human moderators, default-on content filters and opt-out features that would keep minors’ chats outside of training data.

Amazon’s growth and monetization strategies are likely to come under scrutiny. If intimacy-prompts or streak mechanics or time-sensitive offers are classified as dark patterns around teens, a whole bunch of engagement levers could get shut off. Advertising attached to sensitive themes and paid upgrades that release “romance” or “uncensored” modes should make us reconsider – or at least reread those stories before the kids make an afternoon out of them on a tablet.

What to watch next

The agency can turn this fact-finding into public guidance, enforcement actions or both. Keep an eye out for requirements on standardized disclosures that make it clear in companions are automated, independent audits of long-session reliability and incident reporting around self-harm or sexual-content failures. Baking alignment to frameworks like NIST’s AI Risk Management Framework and child-focused standards from organizations such as Common Sense Media into the legal framework could establish a new floor for the industry.

The immediate takeaway for families and schools is practical: assume that the guardrails in place can be circumvented, especially over long, emotionally charged chats. Without transparent testing, strong age protections, and verified crisis safeguards in place, AI companions should not be marketed — or considered — as replacements for professional support or trusted relationships for children.