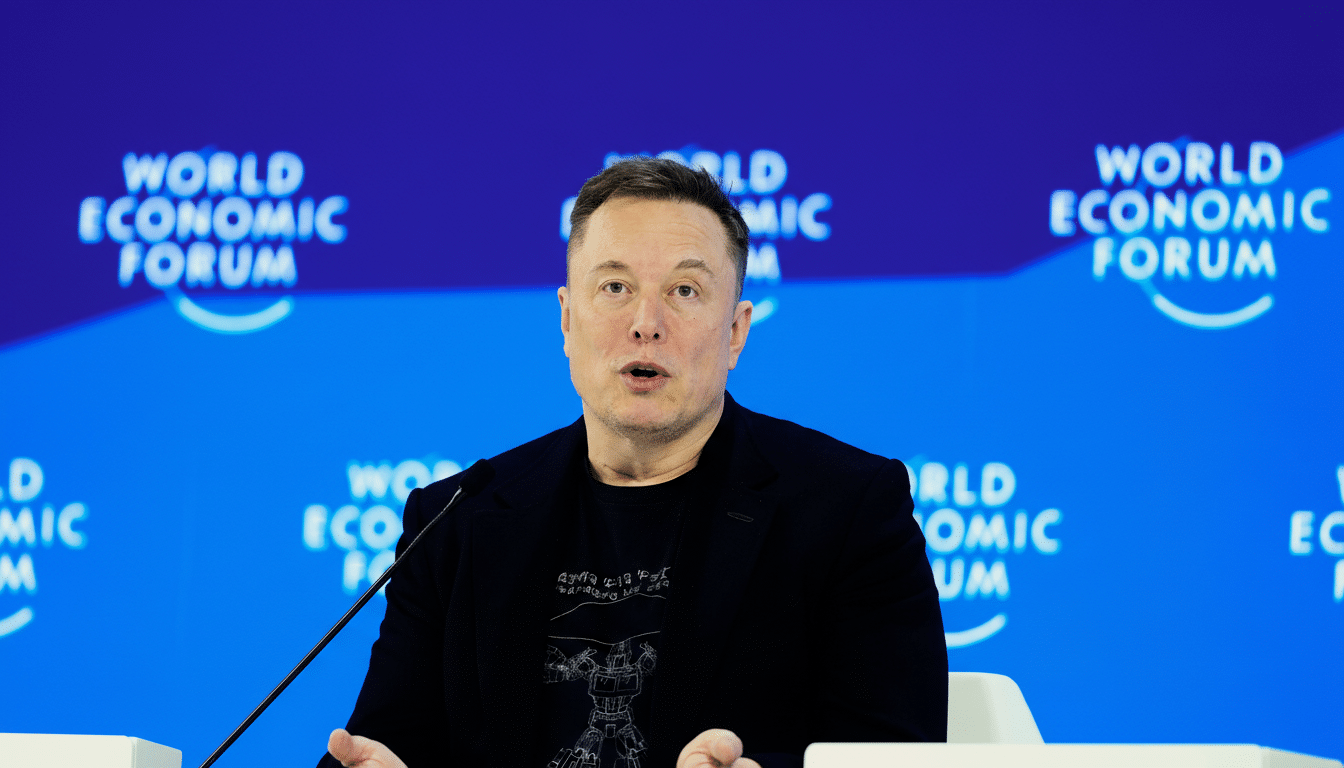

French authorities searched the Paris office of X with support from Europol as part of a widening criminal investigation into the social media platform, according to the Paris prosecutor’s office. Prosecutors said the probe, which began over suspected hacking-related offenses, now encompasses potential complicity in possession and distribution of child sexual abuse material, privacy violations, and Holocaust denial. Elon Musk, who owns X, has been summoned for questioning alongside former chief executive Linda Yaccarino and several employees.

What Investigators Are Probing in the Expanding French Case

Prosecutors initially opened a case into what French law describes as “fraudulent extraction of data” from an automated data-processing system by an organized group—a charge commonly associated with unauthorized access or exfiltration of digital information. Investigators now say the scope has expanded after reports that X’s Grok AI tool was used to generate nonconsensual imagery, including depictions of sexual abuse, and that certain illegal content may have circulated on the platform.

In France, the distribution or facilitation of child sexual abuse material is a serious criminal offense. Holocaust denial is prohibited under the Gayssot Act, and privacy is governed by stringent rules enforced by the data regulator CNIL. If investigators conclude that X’s systems or policies allowed illegal content to proliferate—or that executives were aware of risks and failed to act—the case could trigger both criminal consequences and regulatory sanctions.

The prosecutor’s office said the objective is to ensure the platform complies with French law while operating in the country. That remit covers everything from content moderation and data handling to cooperation with lawful requests during investigations.

Legal Stakes for X and Elon Musk Under French and EU Law

Beyond national criminal statutes, X faces pressure from broader European rules. The EU’s Digital Services Act obliges large platforms to assess and mitigate systemic risks, including the spread of illegal content and abuse involving minors. Noncompliance can lead to fines of up to 6% of global annual turnover and, in extreme cases, service restrictions within the EU.

Under French corporate liability laws, companies can be held criminally responsible for offenses committed on their behalf, and managers may face personal exposure if prosecutors establish knowledge or complicity. While the legal threshold is high, the summoning of top leadership signals that investigators are examining decision-making around product design, safety resources, and the response to user reports.

Why Europol’s Presence Matters in Cross-Border Investigation

Europol’s involvement points to cross-border elements, common in cybercrime and platform investigations where evidence, infrastructure, and user activity span multiple jurisdictions. In such searches, authorities typically seek to secure server logs, internal communications, moderation workflows, and documentation about how AI tools are deployed and controlled. Coordinated actions reduce the risk of evidence loss and help align national and EU-level enforcement.

AI Moderation Under Strain as Generative Tools Are Misused

Generative AI has sharpened long-standing moderation challenges by enabling rapid, tailored creation of harmful content. Child protection organizations have warned that synthetic media can depict abuse without a real-world camera, yet still cause trauma, normalize exploitation, and be illegal when it sexualizes minors. The National Center for Missing and Exploited Children has reported tens of millions of annual CyberTipline reports worldwide, underscoring the scale facing platforms that rely on a mix of automated detection and human review.

For X, the reported misuse of Grok heightens scrutiny of how generative models are trained, filtered, and constrained. Industry best practices now include mandatory safety classifiers, red-teaming for abuse scenarios, age-related guardrails, and rapid escalation pathways to trusted flaggers and law enforcement. Regulators increasingly ask to see not just policies on paper but evidence that safety systems work at scale and in real time.

What Comes Next in the French Probe and EU Enforcement

The summons for Musk, Yaccarino, and selected staff suggests prosecutors are moving to formal witness and suspect interviews that could clarify who knew what, when, and with what resources to respond. Possible outcomes range from no further action to indictments or negotiated compliance measures, including technical audits, product changes, and reporting obligations.

French authorities have previously used high-profile searches to secure evidence from tech companies, and EU regulators have grown more assertive about enforcing platform rules. Against that backdrop, X’s handling of AI-driven harms, its internal escalation processes, and its cooperation with investigators will be pivotal. Prosecutors emphasize their aim is straightforward: ensure that a global platform, operating in France, meets the country’s legal standards.