Figma acquired Weavy, a young AI company known for image and video generation tools — and will house the team under a new brand, Figma Weave. The deal adds a 20-person group to Figma and brings model-agnostic media creation directly into the design platform’s orbit.

Terms weren’t disclosed, and Weavy will continue to operate as a standalone product before deeper integration.

- Why Figma wants Weavy for AI-assisted media creation

- Inside Weavy’s node-based workflow for images and video

- What changes for designers as Figma Weave rolls out

- Enterprise priorities: model choice, policy, and logging

- The broader rush into AI-native design and tool stacks

- What to watch next for Figma, pricing, and content safety

Why Figma wants Weavy for AI-assisted media creation

Design teams are moving from static comps to motion-first storytelling and AI-assisted iteration. Figma already sits at the center of product design workflows, and Weavy gives it a native engine for generating high-quality images and short-form video without forcing designers to bounce across multiple apps.

For Figma’s enterprise customers, adding creation to collaboration in one place cuts friction, improves governance, and keeps brand consistency high as assets evolve. It is also a doubling-down on controllable AI. Figma has had to tiptoe around the risks of generative features that can mimic copyrighted styles. By bringing in a tool built around fine-grained edits and model choice, Figma can point users toward safer, more auditable pipelines and keep what you buy but change vs. complete black-box surprises.

Inside Weavy’s node-based workflow for images and video

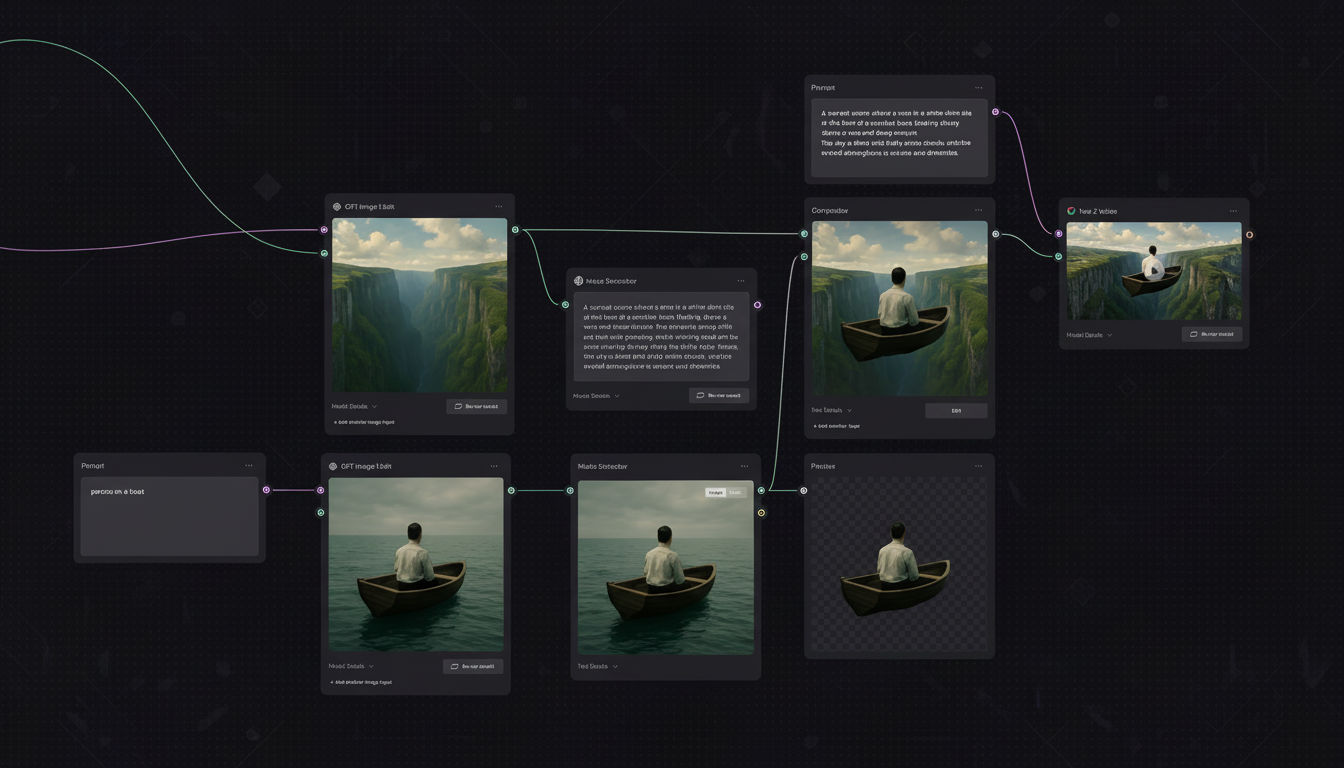

Weavy operates in an interface centred on a node graph implemented on an infinite canvas. This enables designers to chain prompts, switch models, branch experiments, and remix results — practically all that VFX writers are familiar with in-app such as Nuke or shader architecting in Unreal. However, it is more of a non-destructive approach: every change is a node, and multiple teams can fork, compare, and roll back without tracking down file versions.

Weavy models for video include Seedance, Sora, and Veo, while for images, it uses Flux, Ideogram, Nano Banana, and Seedream. A designer or artist starts with a text prompt, views results across models, commits to one, and then generates motion from selected stills. The entire time, pro-grade controls allow for layer-level edits, lighting, colour, and cameras, all forms of adjustments via prompts or direct swaps.

To illustrate: a brand team can produce product hero shots in Flux, explore different colourways and materials with layered edits, then pass the chosen frame to Veo, which makes a five-second motion loop. If a stakeholder requests a softer key light or a distinct background, that can be done on the same graph, maintaining the artist’s stance.

What changes for designers as Figma Weave rolls out

In the short term, nothing breaks; in the near term, Weavy stays as its own distinct service. Naturally, the longer-term Figma Weave would feel native to the canvas, with media generation reflecting a first-class plane due to on-canvas nodes, histories that know AI branches, and hand-offs that conserve the editability as an asset reaches from idea to creation.

Connecting AI results to tokens and components in design structures can be the next obvious stage in latitude.

Enterprise priorities: model choice, policy, and logging

Enterprise buyers will be interested in model choice or lack thereof, and policy controls. Across the board, enterprises stipulate:

- They should choose which models to use based on clear licensing.

- They shouldn’t have to train models on in-house or sensitive data repositories.

- They should be able to log how every asset was created.

A model-agnostic graph meets all those demands by routing tasks to various engines based on compliance, cost, or visual style considerations.

The broader rush into AI-native design and tool stacks

Creative tool consolidation is nothing new. Perplexity, for example, acquired the team behind Visual Electric to boost its design capabilities, and Krea disclosed an $83 million fundraise after spinning out of the Naval Research Lab. Investors included Bain Capital, a16z, and Abstract Ventures. Elsewhere, Adobe’s Firefly and Canva’s Magic Studio are creating a splash by demonstrating clear demand for fast, brand-safe media generation within familiar workflows.

Analysts at Gartner and IDC have remarked that marketing and design are among the earliest heavy adopters of generative AI. McKinsey has reported that generative AI could unlock several trillion dollars in economic value each year, with content creation and personalization at the top of the list. That structural tailwind is exactly what’s driving product teams to adopt multi-model pipelines as a standard, decentralizing power from experts into node-based systems like Weavy that offer transparency and repeatability.

What to watch next for Figma, pricing, and content safety

The million-dollar questions are: how quickly Weavy’s node graph makes it into the Figma canvas; what pricing or compute model Figma adopts; and how it addresses IP, safety, and content provenance at scale. If Figma can gracefully incorporate AI generation into its multiplayer design paradigm, it might establish a new high for how product teams go about prototyping, iterating, and shipping media-rich experiences.

For Weavy, the Figma deal means immediate distribution of its technology to millions of designers. For Figma, it is a bet that the potential of design is more than just the ability to draw rectangles more quickly; it is about conducting pixels, offers, and action on a board powered by the software to keep creation strenuous and mutual.