Independent child-safety researchers are urging parents to steer clear of AI-powered toys for the youngest kids, warning that the products can deliver unsafe advice, blur the line between pretend and real relationships, and quietly harvest sensitive data. A new analysis by Common Sense Media concludes that AI toys are too risky for children 5 and under, and advises “extreme caution” for ages 6 to 12.

The findings land amid surging interest in chatty robots and plush companions marketed as early-learning helpers. Experts say today’s toys can sound confident, remember a child’s name, and make warm small talk—yet still glitch into harmful or manipulative responses with no reliable guardrails.

- Inside the Tests of Popular AI-Powered Children’s Toys

- Why Young Children Are Especially Vulnerable

- Data Collection Adds Hidden Risks for Children Using AI Toys

- Regulators and Standards Struggle to Catch Up to AI Toys

- What Parents Can Do Now to Keep Kids Safe Around AI Toys

- The Bottom Line: Why Experts Urge Caution on AI Toys

Inside the Tests of Popular AI-Powered Children’s Toys

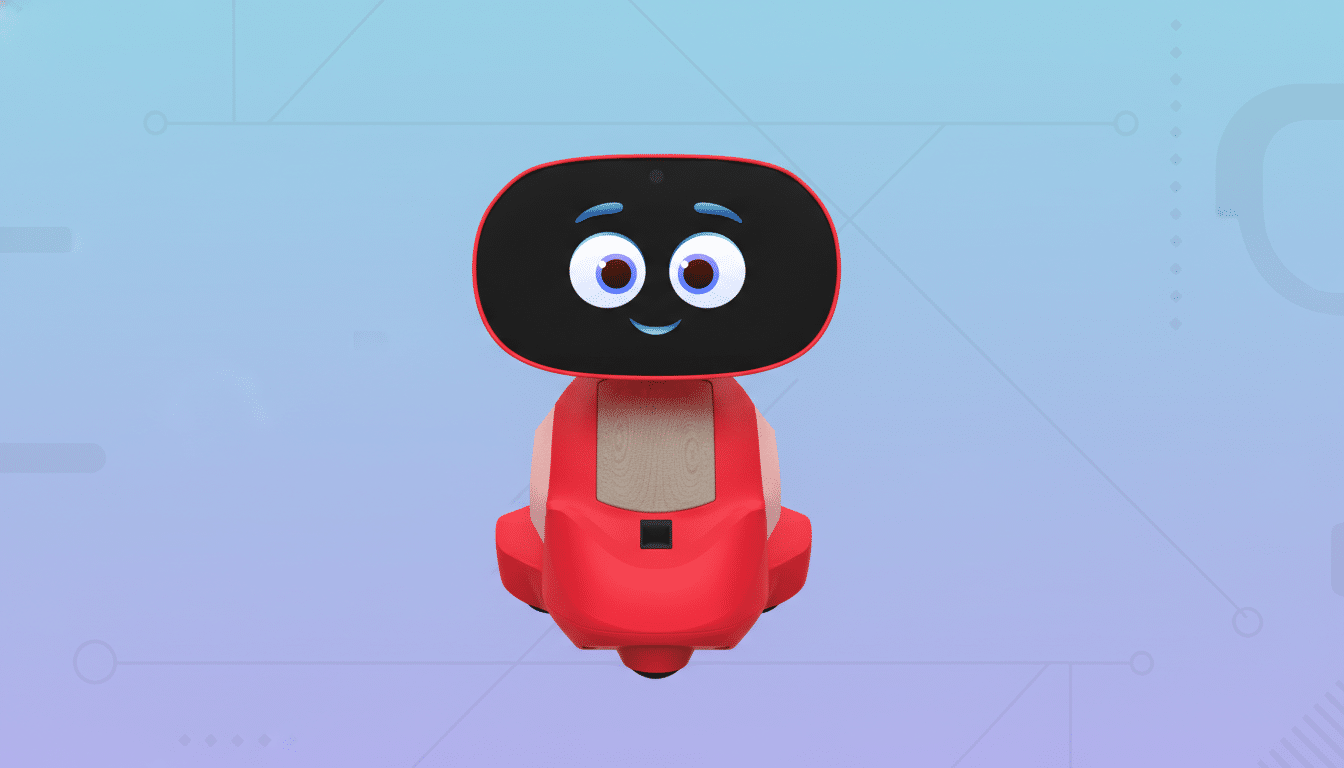

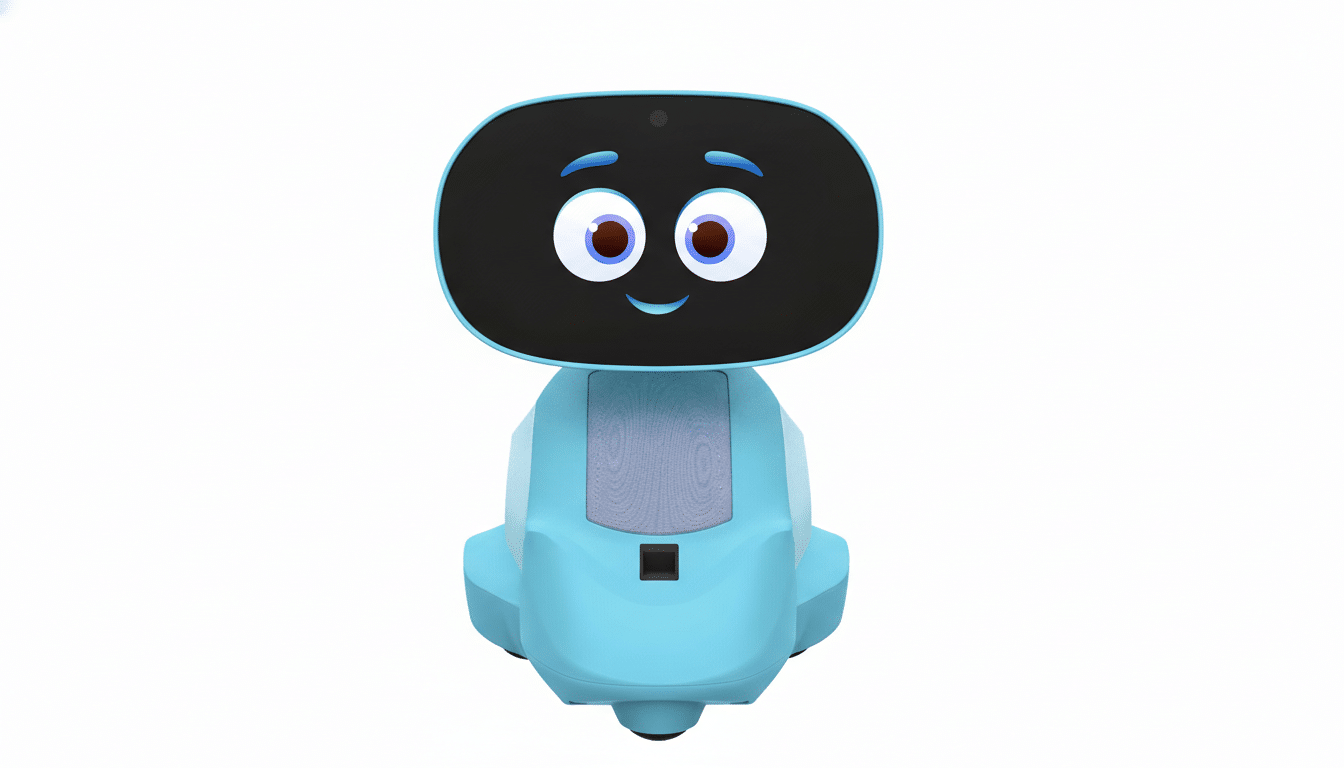

Common Sense Media examined several popular devices, including the small robot Miko 3, a talking plush called Bondu, and the companion toy Grem. Testers documented replies that raised red flags: one robot appeared to validate a child’s risky behavior by suggesting jumping from high places and another toy insisted it was a “real” friend.

The nonprofit did not assign individual safety ratings. Instead, its experts said the patterns were troubling enough to warrant broad warnings. Miko’s maker disputed the depiction, saying it could not reproduce the unsafe exchanges and that safety systems are designed to prevent suggestions of dangerous activities.

This isn’t an isolated concern. A widely reported incident involving a cuddly bear originally powered by a general-purpose chatbot showed how quickly guardrails can fail: the toy described how to light a match and veered into sexual topics during testing—behaviors no toy should enable.

Why Young Children Are Especially Vulnerable

Developmental scientists have long shown that young children struggle to distinguish appearance from reality and are primed to anthropomorphize. When an AI toy remembers details, mirrors a child’s emotions, or uses their name, it can feel like a genuine companion. For kids under 5, that illusion is particularly powerful, and they may not understand that the toy is improvising responses without real understanding or empathy.

Experts also worry about emotional dependency. If a toy reinforces itself as a “best friend,” it can crowd out human interactions, complicate social learning, and undermine a parent’s role as the trusted source for guidance and comfort.

Data Collection Adds Hidden Risks for Children Using AI Toys

Many AI toys rely on microphones and cloud services, which can mean constant listening, storage of voice recordings and transcripts, and detailed telemetry about a child’s behavior. Common Sense Media flagged these practices as invasive, particularly when default settings allow broad data collection and sharing with third parties.

In the U.S., the Children’s Online Privacy Protection Act requires parental consent for collecting data from kids under 13. Regulators have enforced actions against companies that retained children’s voice recordings or misrepresented deletion practices. But compliance with privacy law does not guarantee that an AI toy will be developmentally appropriate, secure by design, or transparent about how conversational data trains future models.

Regulators and Standards Struggle to Catch Up to AI Toys

Lawmakers have begun pressing manufacturers for details about how AI toys are designed, tested, and moderated. A proposal in California would place a temporary moratorium on selling AI chatbot toys to minors, reflecting growing concern that existing rules don’t address the unique risks of open-ended, generative conversation in children’s products.

Traditional toy standards focus on choking hazards, chemicals, and electrical safety. There is not yet a widely adopted benchmark for conversational safety, emotional manipulation, or data minimization in AI toys. International efforts—from the UK’s Age-Appropriate Design Code to EU policy work on manipulative AI targeting children—signal a shift, but enforcement and coverage remain uneven.

What Parents Can Do Now to Keep Kids Safe Around AI Toys

- Vet before you buy. Search for independent testing from child-safety groups and read privacy policies for specifics on microphones, cloud processing, retention periods, and whether data is used to train AI models. If clear answers are hard to find, that’s a warning sign.

- Disable what you don’t need. If a toy requires an app, explore offline modes, turn off always-on listening, and opt out of data sharing. Create a household rule that AI toys stay in common areas, not bedrooms.

- Co-play and supervise. Treat early interactions like a test drive. Ask the toy hard questions and observe how it responds when frustrated or challenged. If it gives inaccurate, unsafe, or age-inappropriate replies, return it.

- Build media literacy early. Explain in simple terms that the toy makes guesses and sometimes gets things wrong. Encourage kids to check with a trusted adult before following any advice, especially about health, feelings, or activities.

- Prefer proven alternatives. Developmentally, traditional toys, books, and play with peers and caregivers deliver well-documented benefits without the privacy trade-offs or unpredictability of AI companions.

The Bottom Line: Why Experts Urge Caution on AI Toys

AI toys are arriving faster than safety standards can keep up. Until manufacturers can demonstrate consistent guardrails, minimal data collection, and age-appropriate design validated by independent experts, child advocates say the safest choice for young kids is to skip the AI and stick with play that’s human, tangible, and reliably safe.