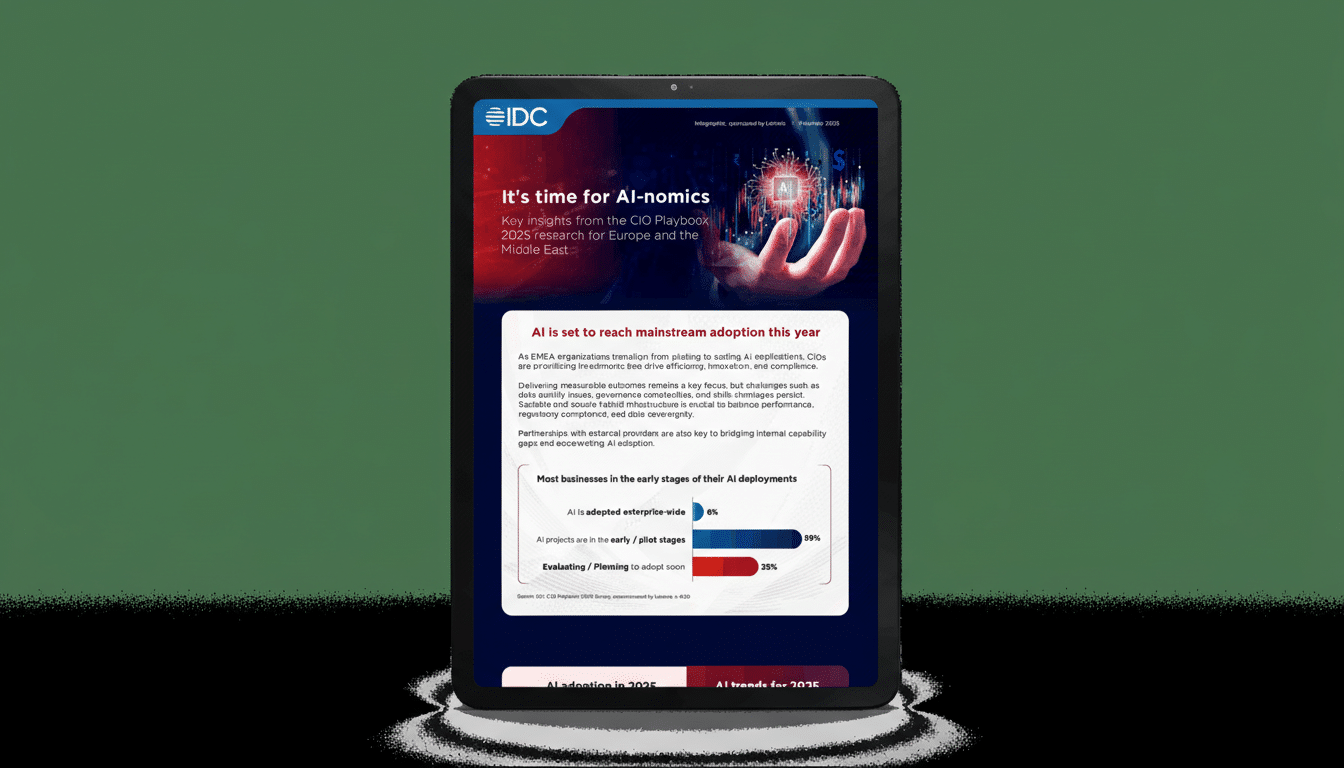

The pilot era is ending. Across industries, executives are shifting from proofs of concept to production AI that moves revenue, cost, and risk metrics—not slide decks. Research from IDC’s latest CIO playbook indicates nearly 60% of organizations are already piloting or systematically adopting AI, yet governance and infrastructure gaps threaten to slow lift-off. Here are five concrete moves leaders can make now to stop testing and start scaling responsibly.

1. Tie AI To Hard Outcomes With Single-Threaded Owners

Start by translating business priorities into a short list of AI use cases with named owners, baseline metrics, and time-bound targets. Pick high-frequency workflows where impact is visible—customer triage, claims adjudication, collections, demand forecasting—and set two or three measurable goals such as first-contact resolution, cycle-time reduction, or revenue per interaction.

Assign a product owner for value and a risk owner for safety. Define AI service-level objectives that matter: response accuracy bands, allowed hallucination rates, latency, and escalation thresholds. McKinsey estimates generative AI could add $2.6T to $4.4T annually in value, but only if deployments are tied to accountable outcomes rather than novelty.

Example: A support organization can target a 10-point improvement in customer satisfaction by combining retrieval-augmented generation with human-in-the-loop triage, measuring deflection, resolution quality, and handoff accuracy weekly.

2. Build Production-Grade Data And Hybrid Infrastructure

Models are only as good as the data path and runtime they ride on. Invest in a production pipeline: governed data products, lineage and quality checks, PII minimization, and robust retrieval evaluation for knowledge-grounded systems. Layer in MLOps/LLMOps for versioning, prompt and model registries, canary releases, and continuous evaluation.

IDC reports 82% of enterprises plan to run AI on-premises or at the edge as part of hybrid environments. The drivers are clear: sovereignty, latency, and cost predictability. Design for portability—mix foundation models from cloud APIs with open-weight models on your own GPUs where data residency, privacy, or unit economics demand it.

Treat cost as a first-class constraint. Implement token budgets, caching, GPU scheduling, and autoscaling. In industrial settings, running vision models at the edge for inspection or safety reduces downtime and bandwidth while keeping sensitive footage local.

3. Operationalize Governance And Security In The Release Train

Responsible scale is a process, not a policy PDF. Only about 30% of CIOs report having rigorous AI governance in place, while more than half lack an organization-wide approach, according to IDC. Bake risk controls into delivery: pre-deployment model evaluations for bias, toxicity, jailbreak resilience, and privacy leakage; domain-specific guardrails; and red-teaming before and after launch.

Anchor governance to recognized frameworks. The NIST AI Risk Management Framework and ISO/IEC 42001 provide structure for roles, controls, and continuous monitoring. The EU AI Act raises the bar on documentation and oversight for higher-risk use cases; plan for model and system cards, impact assessments, and traceable decision logs.

Security needs equal weight. Adopt least-privilege access to data and model endpoints, secrets isolation, content filtering on inputs and outputs, and supply-chain hygiene for datasets and model artifacts. OpenAI and other labs have warned that weaponized AI risk is high; organizations should pair agent capabilities with containment strategies—rate limits, safe tool use policies, and environment sandboxes.

4. Redesign Work And Upskill For Human AI Teams

AI value shows up when work changes. Map who does what, before and after AI, and set explicit human-in-the-loop moments. Create playbooks for exception handling, rejection sampling, and escalation so frontline staff know when to trust, verify, or override the system. Incentives should reward quality outcomes, not raw automation rates.

Train different cohorts for different needs: developers on secure prompt engineering and evals; analysts on retrieval hygiene and bias checks; managers on interpreting AI metrics and managing risk. IDC notes a 65% surge in organizations preparing for agentic AI—autonomous systems that can plan and act across tools—particularly in security operations, finance workflows, and customer service. Balance ambition with caution: an industry survey found 96% of IT pros view AI agents as a security risk, underscoring the need for approval workflows, audit trails, and fine-grained permissions.

5. Prove Value Fast Then Scale On A Shared Platform

Replace endless pilots with short, production-intent launches that prove or disprove value in weeks. When a use case clears the bar, port it to a shared AI platform so teams don’t rebuild the basics. Standardize prompt libraries, retrieval connectors, evaluation suites, guardrails, and policy-as-code once, then reuse everywhere.

Adopt a champion–challenger approach to models and prompts so you can swap components without re-architecting. Instrument every deployment with business and model health dashboards. Pair platform SRE with FinOps to keep reliability and unit costs visible. Organizations that treat AI as a product portfolio—rather than one-off experiments—scale faster and with fewer surprises.

The takeaway: Responsible scale is achievable when outcomes, infrastructure, governance, people, and platforms advance together. With hybrid architectures maturing and guidance from bodies like IDC, NIST, and EU regulators sharpening, the window is open to turn AI from a promising pilot into a durable growth engine—without losing control.