Companies hiring their first “AI employees” are discovering a familiar truth from human onboarding: skills aren’t the problem, context is. Even the most capable agent stumbles without the institutional knowledge your people absorb over months. The fix now taking hold is context engineering — a disciplined approach to feed AI agents the culture, processes, and app logic they need on day one.

Vendors advertise ever-larger context windows, with some models claiming token limits that stretch into the millions. In practice, that’s still a thimble against the ocean of enterprise knowledge. One moderately complex cloud app configuration or a handful of process maps can blow past practical limits. Selecting and shaping what the agent sees — and when — matters more than dumping everything into a prompt.

The goal isn’t to “teach” the model your business; it’s to deliver just enough verified, role-specific context to let it reason accurately, avoid hallucinations, and act within guardrails. That shift is driving a new playbook for AI onboarding.

Why Context Beats Training For AI New Hires

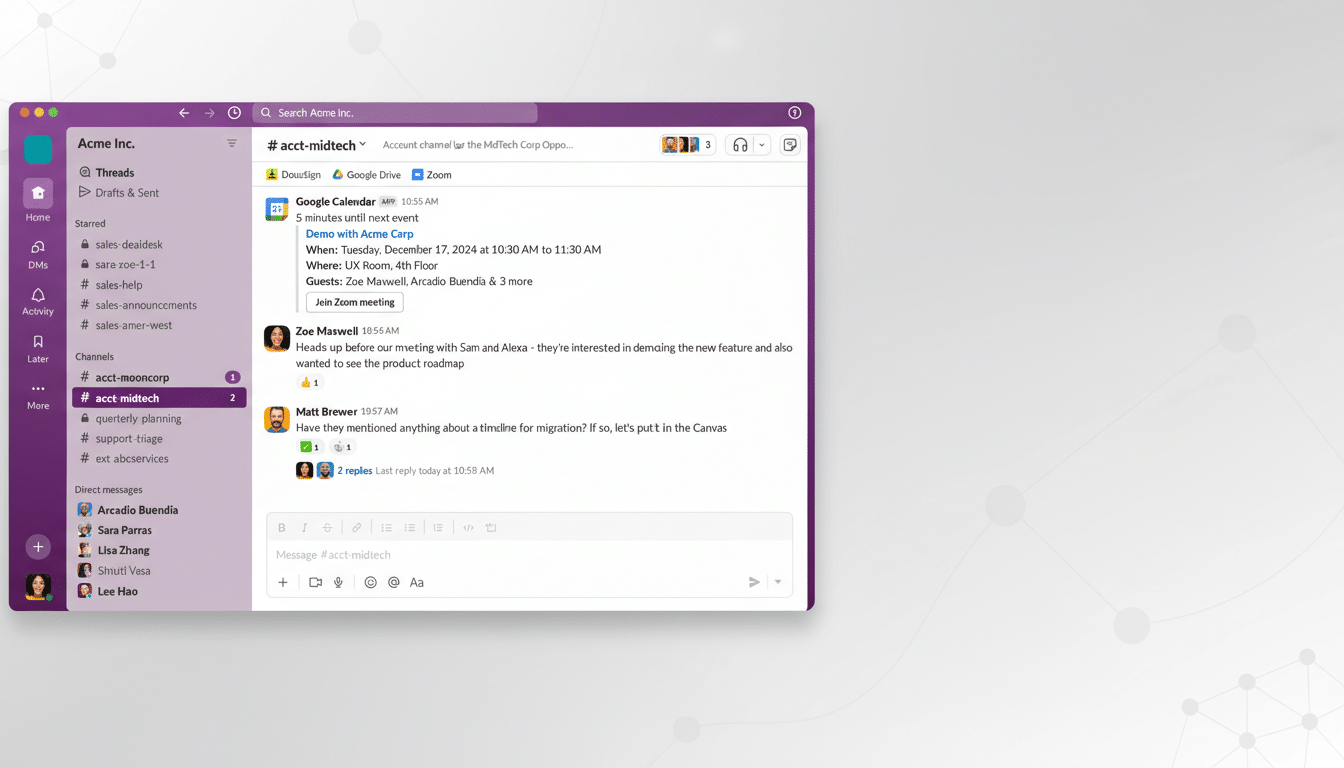

Human teammates use judgment to fill gaps across unstructured artifacts — brand guidelines, tribal norms, offhand Slack debates. AI is improving at parsing unstructured text, but it still fails when sources conflict or rules are implied rather than stated. This is where misfires originate: not weak models, but weak context.

Research communities such as Stanford HAI have shown that retrieval-based grounding improves factuality and consistency compared with naive prompting. Standards bodies like NIST stress that data lineage, provenance, and scope are as critical to trustworthy AI as model choice. In short, the context around your data — what it means, when to use it, and why — is the control surface.

Enterprise architecture underscores the scale challenge. Consider a CRM stack: customer records (structured), entitlement rules (metadata), process diagrams for case management (often outdated), and brand tone guidance (unstructured). Platforms such as Salesforce Data Cloud, MuleSoft, and Tableau exist precisely to wrangle this mosaic. Your AI agent needs the same integration — curated for its job.

A Three-Step Context Engineering Plan For AI

Step 1 Scope the Role and Map the Process. Define one end-to-end outcome for the agent — for example, “resolve priority-2 support cases within entitlement.” Draft a lightweight process map covering inputs, handoffs, SLAs, exception paths, and escalation rules. Identify the minimum viable context for that outcome: which objects, fields, macros, approval policies, and knowledge articles truly matter. Resist the urge to include every related artifact; treat the context window as a product with hard trade-offs.

Concrete example: A service agent embedded in a CRM does not need the entire codebase. It needs the entitlement matrix, macro library, top-decile resolution notes, tone and compliance guidance, and a dependency graph for the 10–20 automations most likely to trigger during case handling. In many orgs, just 20 complex Apex classes can exceed hundreds of thousands of tokens; dependency analysis helps you pull only what intersects the agent’s path.

Step 2 Build Context Pipelines and Guardrails. Treat context as a living dataset. Ingest from sources such as Confluence, ticketing systems, ERPs, and HR systems; normalize and chunk with stable IDs; attach metadata tags for authority, recency, sensitivity, and applicability-by-step. Store in a retrieval layer that supports hybrid search (semantic plus keyword) and, where possible, a knowledge graph to encode relationships across processes, data, and permissions.

Add “context for the context.” For each item, codify when to use it (stage), how much weight to give it (priority), and what to do if sources conflict (tie-break rules). Enforce access controls and redaction policies before retrieval. Many teams succeed with a RAG pattern augmented by tool use: the agent first retrieves, then runs scoped checks (for example, querying the entitlement service) before composing an answer or action.

Step 3 Test, Measure, and Iterate. Build task-level evaluations that mirror the job: solve a case with conflicting entitlements, draft a compliant outreach under a complex preference set, or update a quote across two systems. Measure outcome quality, time to resolution, escalation rates, and the fraction of responses grounded in retrieved sources. Red-team for sensitive failures — over-collection of PII, unauthorized actions, or confident answers with low grounding.

Operationalize the loop with versioned “context packs,” change logs tied to process updates, and drift monitoring. When the business changes, ship a new pack, not a new model. This keeps your AI onboarding aligned with reality without costly retraining.

What Good Looks Like In Practice For AI Agents

Picture a sales-ops assistant tasked to generate quotes. Its context pack includes the CPQ rule matrix, discount policy by role, product eligibility tables, playbook excerpts for negotiation tone, and a graph linking customer segments to contract clauses. Before proposing terms, the agent retrieves the customer’s entitlements, confirms approval thresholds, and cites the specific policy paragraph in its recommendation. If sources disagree, the tie-break rule favors the most recent signed policy over internal wiki notes.

The same pattern applies to IT, finance, and HR agents. The differences are the artifacts, not the method: map the outcome, codify the minimal context, automate retrieval with guardrails, and prove performance with job-level evaluations.

The Payoff And Pitfalls Of Context Engineering

Teams that approach AI onboarding as context engineering report faster time to value, fewer hallucinations, and simpler governance. Industry leaders emphasize that value creation hinges less on the frontier model and more on clean handoffs, stable identifiers, and enforceable policies — the same operational rigor that underpins mature data programs.

The risks are predictable: shoveling in stale documents, overloading the window with irrelevant detail, skipping provenance, or letting access controls live only in the app layer. Solve these, and your “AI hire” stops guessing and starts performing like a well-briefed teammate. In an era of agentic systems, context isn’t decoration — it is the job.