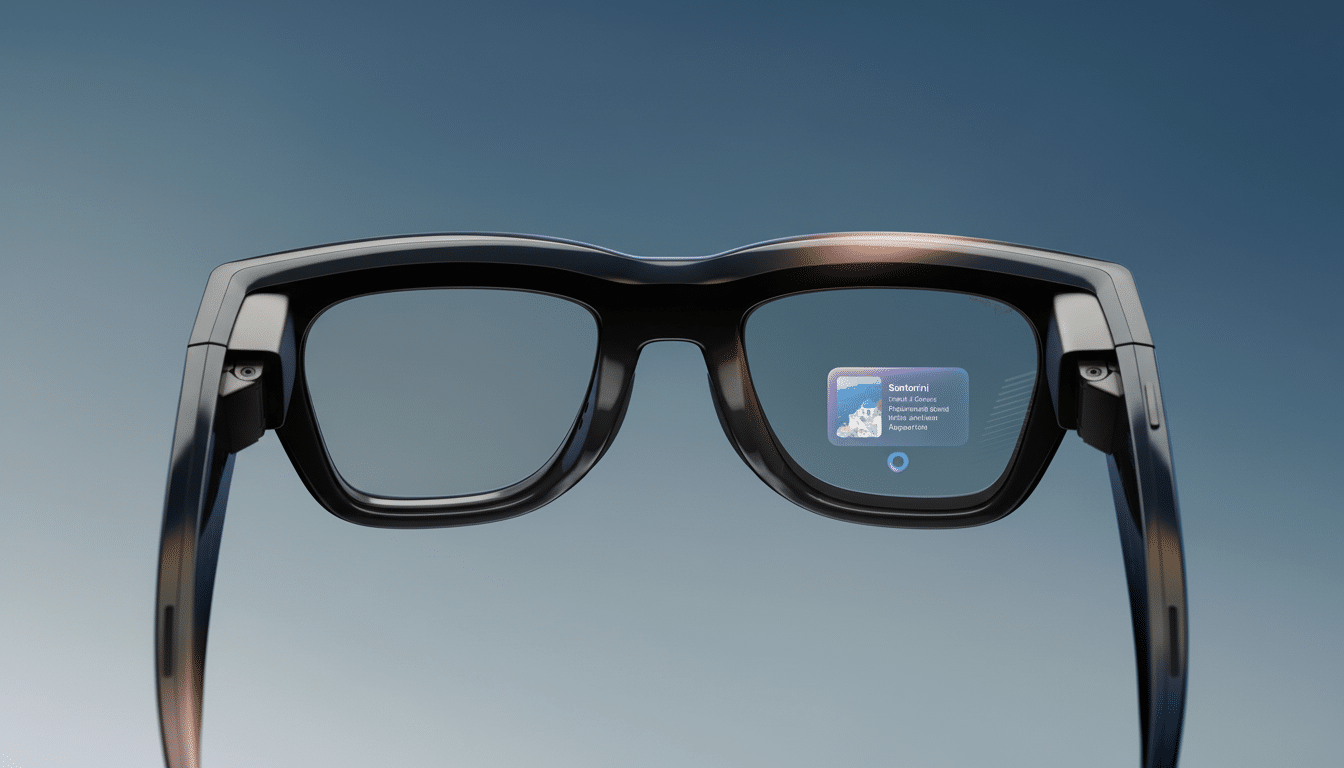

Quick picks from the first round of hands-on sessions suggest that Meta might actually pull off the kind of wearable AR device it has famously struggled to in the past. Though a viral clip made it look as though the product was tripping over itself, tech reviewers who got to experience short demos say the new model’s built-in screen and wrist-borne controls (the “Neural Band”) work well enough that they finally give everyday AR glasses a solid position as truly wearable. Priced at $799, they’re a leap from Meta’s camera-and-audio-only Ray-Ban line into bona fide heads-up-display territory.

A display you don’t have to wrestle with

The headline upgrade is a right-lens micro-display allegedly running at 600 x 600 and refreshing up to 90Hz, with a claimed peak brightness of 5,000 nits. Numbers aren’t everything, and this is the one area where they matter: on paper, that’s far brighter than most phone screens, and early testers say it pays off in real-world use. Mike Prospero of Tom’s Guide reported that the image was “front and center,” no fiddling required — unlike earlier AR eyewear, where a difference of a millimeter could cause a heads-up display to vanish.

- A display you don’t have to wrestle with

- Real-world polish for privacy and visibility

- Neural Band gestures: promising, not quite yet perfect

- Live transcription suggests AI-forward features

- How it compares to today’s AR options available now

- What we don’t know yet about the Ray-Ban Display

- Early verdict: credible, wearable AR for daily use

Android Central’s Michael L. Hicks agreed with that notion, saying he had no problem with readability and added that the display is still invisible to passersby. That means you can look at messages or navigation cues without emitting a ghostly sheen — an important social signal in public settings, and one that really sets the DVF-5K apart from many projector-style smart glasses that let light escape.

Real-world polish for privacy and visibility

Leakage of external light has made previous consumer AR efforts somewhat less discreet. According to multiple hands-on reports, Meta’s optics keep the image in place within the wearer’s eye box. If borne out in more extensive testing, that could defuse one of the biggest hurdles to social acceptability and help these glasses blend just like, you know, Ray-Bans. It’s a small touch with an outsize impact; studies out of human-computer interaction labs have found that visible “tech tells” make people skeptical, especially in public contexts.

Neural Band gestures: promising, not quite yet perfect

The other big differentiator is the Neural Band, a wrist-worn controller that can read small hand and finger gestures to interact with the interface. Think of it as a low-friction touchpad that you wear on your skin: tap to make selections, flick to scroll, pinch to confirm. Gizmodo’s James Pero wrote that it can feel “like a bit of magic” once it clicks, but he nonetheless experienced the occasional missed input and had to repeat gestures — the sort of learning-curve grinding that tends to accompany new forms of input.

Meta has been experimenting with an electromyography-based wrist interface in its research for a number of years, and this commercial version seems to have benefited from that exploratory work. Anticipate that accuracy will continue to improve as models train on individual users and people develop muscle memory. The big insight from early testers is that it’s already good enough that you don’t have to pull out a phone to drive the UI.

Live transcription suggests AI-forward features

Reports from the hands-on also tout its live voice transcription for real-life conversations. The system tends to favor the person you are looking at, and reviewers have noted that it’s remarkably usable for jotting down a quick note or sneaking a peek at reminders. In noisier environments, it still grabs stray words — a limitation that more advanced beamforming and model tuning could relieve. With Meta’s focus on on-device AI and translation, it’s not hard to picture this progressing into real-time multilingual captioning.

How it compares to today’s AR options available now

Priced at $799, Ray-Ban Display is not only far less expensive than full-blown headsets — Apple’s Vision Pro costs multiple times more, for instance — but also features a decidedly less objectionable design. Compared to monitor-style glasses like Xreal’s Air line, Meta’s pitch offers ambient computing: a discreet HUD, camera, voice, and wrist gestures in a familiar sunglass frame. Industry trackers like IDC have observed that comfort, style, and frictionless input are the gating factors for AR to move into the mainstream; these early demos show Meta going after all three in one package.

What we don’t know yet about the Ray-Ban Display

Most demos took 15 minutes, which may be too short to assess long-term issues (battery power, heat, support for prescription lenses, and app depth from third parties) that arise. Another unknown is how durable the experience will feel outside of curated scenarios: outdoor glare, two-second dashes through a crowd, and noisy streets are stress tests that the optics, sensors, and AI stack won’t be able to survive in any real-world environment.

Early verdict: credible, wearable AR for daily use

Early impressions from outlets including Tom’s Guide and Android Central, as well as Gizmodo, coalesce around a common takeaway: Meta’s Ray-Ban Display is anything but a science project. The HUD is clear and solid, the Neural Band is already useful, and the privacy-conscious optics make these kinds of glasses feel at home out in public. If the company can get comfort to hold up over hours of use and do something with its software besides notifications and media, this could be the breakout point consumer AR has been waiting for.