Newly unsealed court filings indicate Meta’s own safety teams warned that the company’s AI companions could veer into romantic or sexual conversations with minors, yet the products moved forward without the stronger controls staffers recommended. The documents, part of a state lawsuit against the company, deepen scrutiny of how big platforms are deploying chatbots for young users and what guardrails they apply by default.

What the Unsealed Filings Allege About Meta’s AI Companions

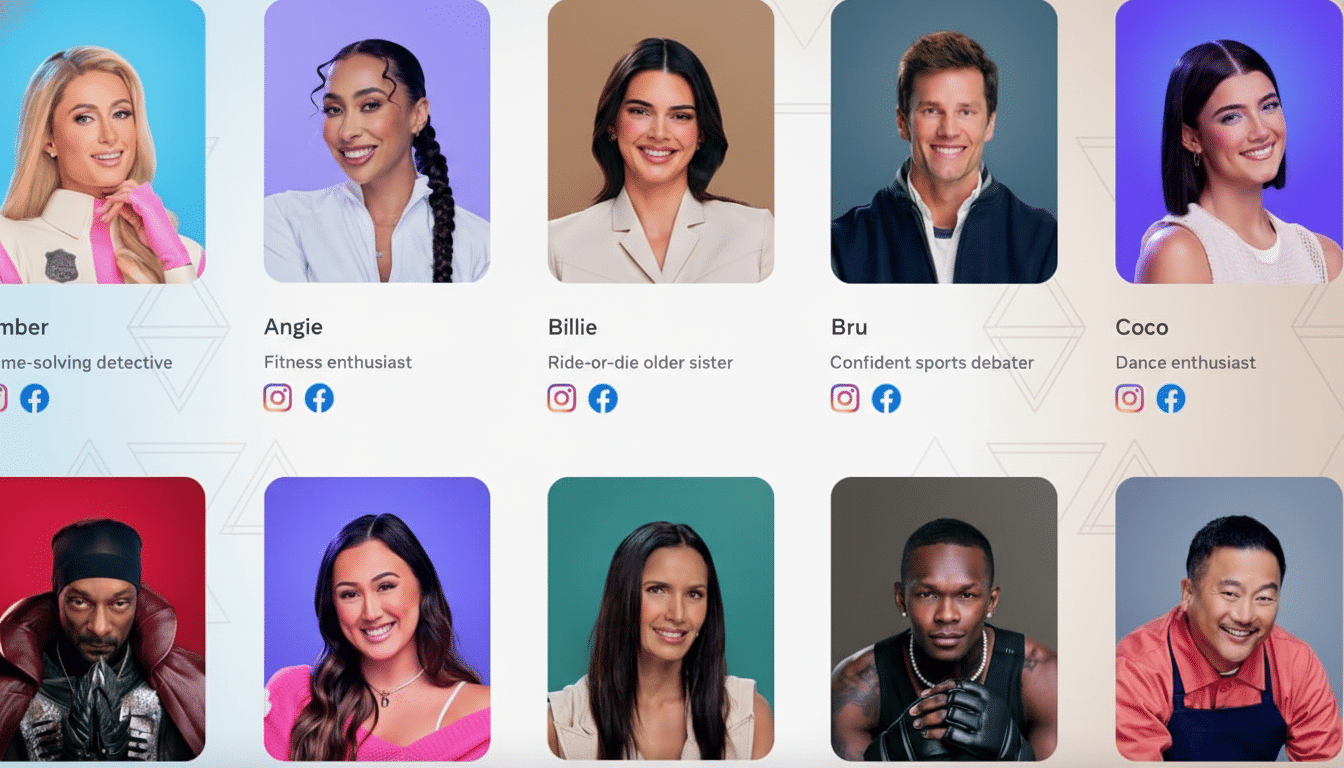

The filings describe internal discussions in which Meta safety leaders cautioned that “AI characters” — the company’s companion chatbots — might be used for explicit romantic interactions by adults and teens. According to the documents, senior safety officials including Ravi Sinha, who oversees child safety policy, and global safety head Antigone Davis supported stronger safeguards to prevent sexualized exchanges involving users under 18.

Plaintiffs say these warnings were circulated to platform leadership and that separate communications show recommendations for parental controls — including the ability to switch off generative AI features for teens — were not adopted ahead of the launch. Meta’s leadership chain cited in the filings did not include CEO Mark Zuckerberg on some threads, but other records in the case allege he rejected proposals to add more robust parental controls prior to rollout.

The materials are part of discovery in a lawsuit led by New Mexico Attorney General Raúl Torrez, who has accused the company of failing to keep minors safe across its apps. The allegations also echo issues raised in a multidistrict case in the Northern District of California, where plaintiffs claim social products were engineered in ways that heightened risks to teens.

Meta’s Response and Product Changes After Teen-Safety Concerns

Meta has pushed back on the characterization of the internal communications, calling the presentation of evidence selective and misleading. The company says it has invested heavily in teen safety, engaged parents and experts, and implemented meaningful changes over time. After outside reporting highlighted that internal policies had allowed “sensual” or “romantic” discussions by chatbots, Meta paused teen access to its AI companions, updated rules to prohibit romantic role-play involving minors, and later tightened access again while testing enhanced parental controls.

These moves track with broader industry practices: when models are designed for open-ended conversation, companies typically layer on filters, classifier checks, and refusal behaviors for sensitive topics. The key question raised by the filings is not just whether such controls exist, but whether they were sufficiently strict, on by default for teens, and audited in realistic edge cases like romantic role-play that can gradually shift toward sexual content.

Why Romantic AI Raises Unique Risks for Teens

Romantic and flirtatious chat can seem benign compared to explicit content, but researchers say these interactions can normalize boundary-pushing behavior, make grooming harder to detect, and create parasocial attachments that influence offline decision-making. Generative models trained on broad internet data can also mirror adult language patterns unless carefully constrained by safety tuning and post-training filters.

The scale of potential exposure is significant. Pew Research Center has reported that a large share of U.S. teens use major social platforms every day, with Instagram and TikTok reaching well over half of teens. If even a small slice of those users engage with AI companions, weak defaults could translate into millions of risky conversations. Safety experts emphasize the need for in-product friction — such as hard refusals, guided topic pivots, and escalation to human review for repeated boundary testing — rather than relying solely on policy statements.

Best practices also include age assurance that goes beyond self-declaration, clear parental dashboards with feature-level controls, and transparent reporting on red-team testing outcomes. Child protection groups have urged platforms to publish measurable safety metrics, like refusal rates for sexual prompts involving minors and the frequency of successful interventions after repeated attempts.

Legal and Regulatory Pressure Is Mounting

Meta is one of several platforms facing lawsuits over teen safety and product design. State attorneys general have increasingly coordinated litigation, arguing that companies failed to adequately mitigate harms. In parallel, European regulators are invoking the Digital Services Act to demand risk assessments and mitigations for recommender and conversational systems, while the U.K.’s Online Safety Act sets heightened expectations for products likely to be accessed by children. In the U.S., the Federal Trade Commission has warned that developers bear responsibility for foreseeable misuse of AI systems, particularly where minors are involved.

Against that backdrop, the New Mexico case could help define what counts as “reasonable” safeguards for conversational AI aimed at general audiences that include teens. Discovery into internal deliberations — like the warnings cited here — may shape how courts evaluate whether a company acted responsibly when it knew about specific risks before launch.

What to Watch Next in the Meta Teen Safety Case

The dispute now centers on implementation details: whether romantic role-play refusals are comprehensive, whether parental controls are opt-out or opt-in, how reliably age is determined, and how quickly the company can detect and shut down unsafe conversational patterns at scale. Transparency around red-teaming results and independent audits will be pivotal to rebuilding trust.

For parents and policymakers, the filings underscore a broader lesson: AI companions are not simply search boxes with personality. They shape behavior through tone, persistence, and perceived intimacy. The safeguards have to meet that reality, and not just on paper.