Anthropic launches Claude Haiku 4.5 — more responsive, less costly, and with stronger guardrails.

The company claims that Haiku 4.5 runs nearly frontier speed on practically anything you would like to do with it in everyday computing, offers coding at the level of Claude Sonnet 4, and runs roughly twice as fast at one-third of the price.

- What’s new in Haiku 4.5: speed, cost, and coding gains

- Pricing and availability for Anthropic’s Haiku 4.5

- Safety claims, guardrails, and evaluation levels for Haiku 4.5

- Market positioning in the lightweight AI model race

- What developers can build with Haiku 4.5 starting today

- The bottom line on Claude Haiku 4.5 performance and value

What’s new in Haiku 4.5: speed, cost, and coding gains

Haiku 4.5 is designed to wear like a big model without the weighty price tag.

According to internal benchmarks released by Anthropic, the model still maintains high reasoning accuracy across common enterprise workloads—coding, chat, data extraction, and structured summarization—while prioritizing use cases with low latencies that require real-time responses.

One of the really big claims here is coding parity with Claude Sonnet 4 at a fraction of the cost. In practice, that translates to quicker autocomplete and live debugging for IDE copilots, less lag during refactoring, and fewer “stall-outs” when juggling long chains of edits. For a team that is building AI agents which need to make decisions and then act in tight loops, this speed bump can directly translate into better user experience and higher task throughput.

Pricing and availability for Anthropic’s Haiku 4.5

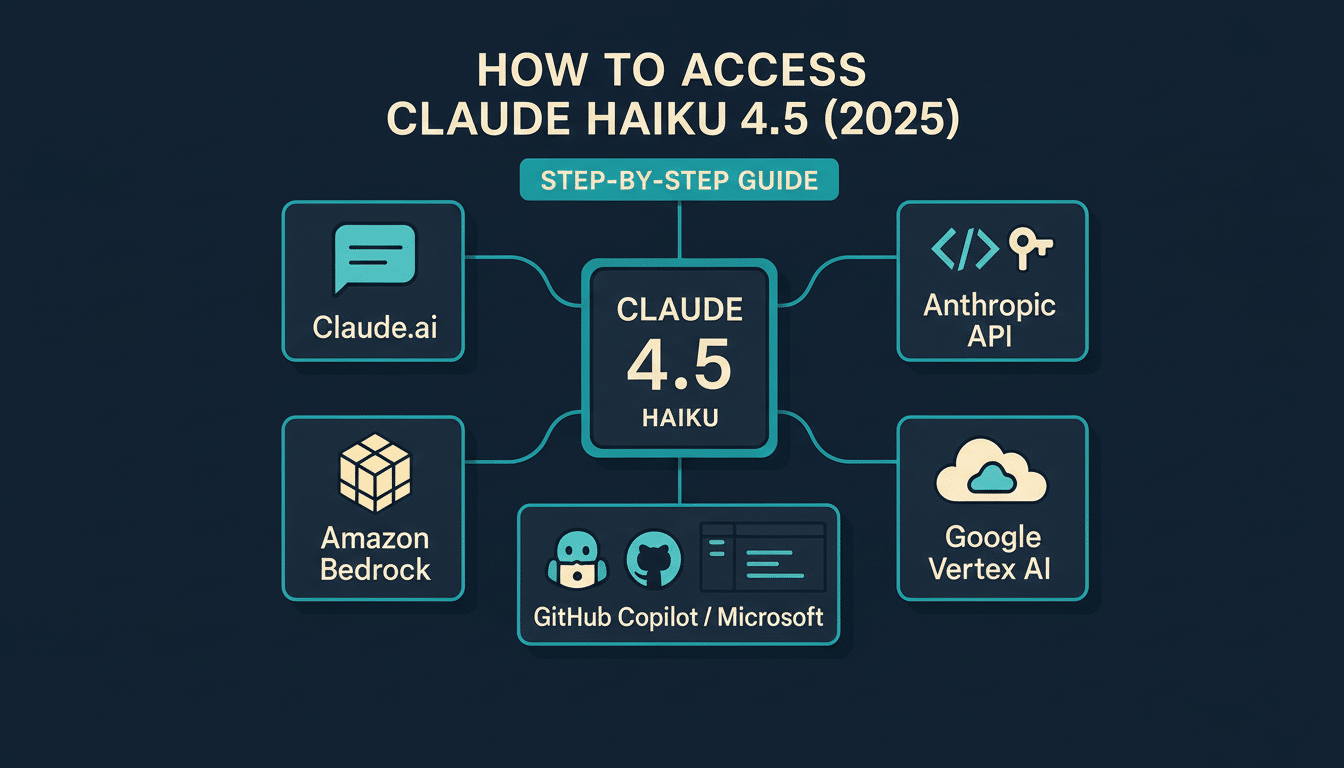

Haiku 4.5 is available in Anthropic’s apps, on Claude Code for developers, and as managed services on Amazon Bedrock and Google Cloud Vertex AI. While far from revolutionary as a model, the price is very right for Haiku at $1 per million input tokens and $5 per million output tokens, cheaper than most mid-tier models out there and making it an obvious choice for those wanting an efficient and economical solution in their heavy-load projects.

For businesses with big chat surfaces, batch analytics, or other agentic workflows, the math is simple: lower per-token cost and faster responses mean less infrastructure and more room to grow usage without bumping into unplanned budget ceilings.

Safety claims, guardrails, and evaluation levels for Haiku 4.5

Anthropic dubs Haiku 4.5 its safest model yet based on “internal evaluations” featuring decreased misaligned behaviors and an AI Safety Level 2 label.

It is less strict than ASL-3, which Anthropic applies to some of its most capable models, and is targeted at wide-scale deployment in consumer and enterprise settings with policy-controlled outputs.

The company says Haiku 4.5 was adversarially tested and red-teamed, and that it has beefed up its refusal strategies for potentially risky queries. That tracks with broader industry trends that frameworks from NIST and oversight bodies like the UK’s AI Safety Institute have been advocating, where structured evaluations and transparent risk categorization are increasingly becoming table stakes for deployment at scale.

Market positioning in the lightweight AI model race

Lightweights are the most competitive corner of the AI market. Anthropic’s pitch positions Haiku 4.5 in a showdown against models like OpenAI’s GPT-4o mini, Google’s Gemini Flash, and power-efficient models by Meta and Mistral touting low-latency performance for inference. The differentiator Anthropic is driving at isn’t only cost but speed, how these models are programmed, and the reliability with which they think in a bind.

For product teams, the real question is whether a small model can adequately replace a big one for 80 percent of user interactions. If Haiku 4.5 does indeed have the coding parity and conversational quality gains as advertised, companies can save their heavier models for rare massive reasoning spikes while pointing most traffic to Haiku in a push to keep latency and spend at bay.

What developers can build with Haiku 4.5 starting today

Haiku 4.5 draws a bullseye around real-time, high-volume AI: live chat assistants that can reason against policy and product data without getting added to the slow part of the system; coding copilots in the IDE that barely miss a beat with your editor as you rapidly edit; or customer support tools that triage, summarize, and hand off to actual agents within moments.

On the data front, teams on Haiku 4.5 for transactional workloads (schema-aware extraction from documents, lightweight classification at scale, and streaming summarization of conversations) will love predictable costs and responsiveness over peak benchmark scores. Available now on Amazon Bedrock and Google Cloud Vertex AI, enterprises can plug Haiku into their existing observability, key management, and governance pipelines without having to build out bespoke ops.

The bottom line on Claude Haiku 4.5 performance and value

Claude Haiku 4.5 signals a clear strategy: make little feel large, particularly where speed and dependability are nonnegotiable. At $1 per million input tokens and $5 per million output tokens, with an emphasis on safety consistency, Anthropic is building on its dev-first approach toward real-time AI. If its claimed performance holds up in independent trials, Haiku 4.5 will be one of the easiest pitches for teams looking for fast, affordable, and trustworthy AI at scale.