OpenAI CEO Sam Altman says ChatGPT now powers 800 million weekly active users, a number that demonstrates how swiftly conversational AI went from novelty to a utility for consumers, developers, and large organizations.

Altman disclosed the number during the company’s developer keynote, also noting that more than 4 million developers have developed using OpenAI’s tools and that its API currently processes over 6 billion tokens per minute.

What 800 Million Weekly Users Say About ChatGPT

Weekly active users are a high engagement bar, and to hit 800 million means this is where people go to get work done. The figure also reflects a notable rise: company editorial saw about 500 million weekly users by the end of March and around 700 million by late summer. If it continues on this trajectory, it will be one of the fastest-scaling software services of the last decade.

It’s worth noting the divergence between consumer and developer use. “It’s kind of a two-tier thing: the weekly user count is indicative of an end-user product, token throughput reflects the role we play for infrastructure. That duality — chat plus API as a front end and back end, in other words — helps explain the widespread adoption from education to customer support to content creation, data models and even coding.”

Why Adoption Is Accelerating Across Use Cases

OpenAI has been expanding its ChatGPT further toward software that can be directed to take actions. At the event for developers, the company highlighted new tools for building apps within ChatGPT and creating even more complex agentic workflows. That approach, plus other proactive tools like personalized briefings, transforms the assistant into an everyday co-worker for research, drafting, planning, and data work.

Enterprises, too, have leaned in. Microsoft’s Azure OpenAI Service provides big companies with a managed onramp for deploying models with extra governance; out-of-the-box connectors into productivity suites make it easier to pilot and scale. Generative AI pilots are moving quickly into production elsewhere, such as in customer operations, marketing, and software engineering, according to analyst firms Gartner and IDC. McKinsey has suggested that generative AI could unlock trillions of dollars in annual economic value as such deployments deepen.

The Scale Challenge Behind the Scenes of ChatGPT

Supporting hundreds of millions of weekly sessions requires some serious compute. OpenAI has also been racing to lock down more AI accelerators and expand data center capacity, echoing wider industry bottlenecks. Bloomberg, Reuters and other outlets have charted chronic shortages of Nvidia’s most sought-after chips and a race by cloud providers worldwide to increase supply.

The company’s ambitions aren’t limited to chat, but extend also to media generation — and agents that act on the user’s behalf; this additionally ratchets up the compute bar. OpenAI recently moved forward with its Sora video-generation model and joined forces with Stripe on experiments in agentic commerce — both computationally intensive fields. The infrastructure is no longer academic: It’s deploying at huge scale, but accessibility, latency, and cost per event become strategic capabilities as usage scales.

Developers and the Platform Effect Accelerating Growth

Altman’s 4 million developer count highlights a dynamic of the platform that blooms growth. When builders can construct workflows, plug in proprietary data and provide mini-apps within ChatGPT, the service is a distribution channel as much as it is a destination. That’s like the way that smartphone app stores turbocharged the adoption of smartphones by constantly making devices do more than they could before.

Independent benchmarks provide some external context.

The Stanford HAI AI Index has tracked blistering growth curves for enterprise AI capabilities and dataset sizes, while developer surveys from Stack Overflow and GitHub have registered year-over-year increases in the use of AI-assisted coding. Those trends partly explain why token usage on OpenAI’s API is skyrocketing alongside the headline user number.

Reliability, Safety, and Oversight at Massive Scale

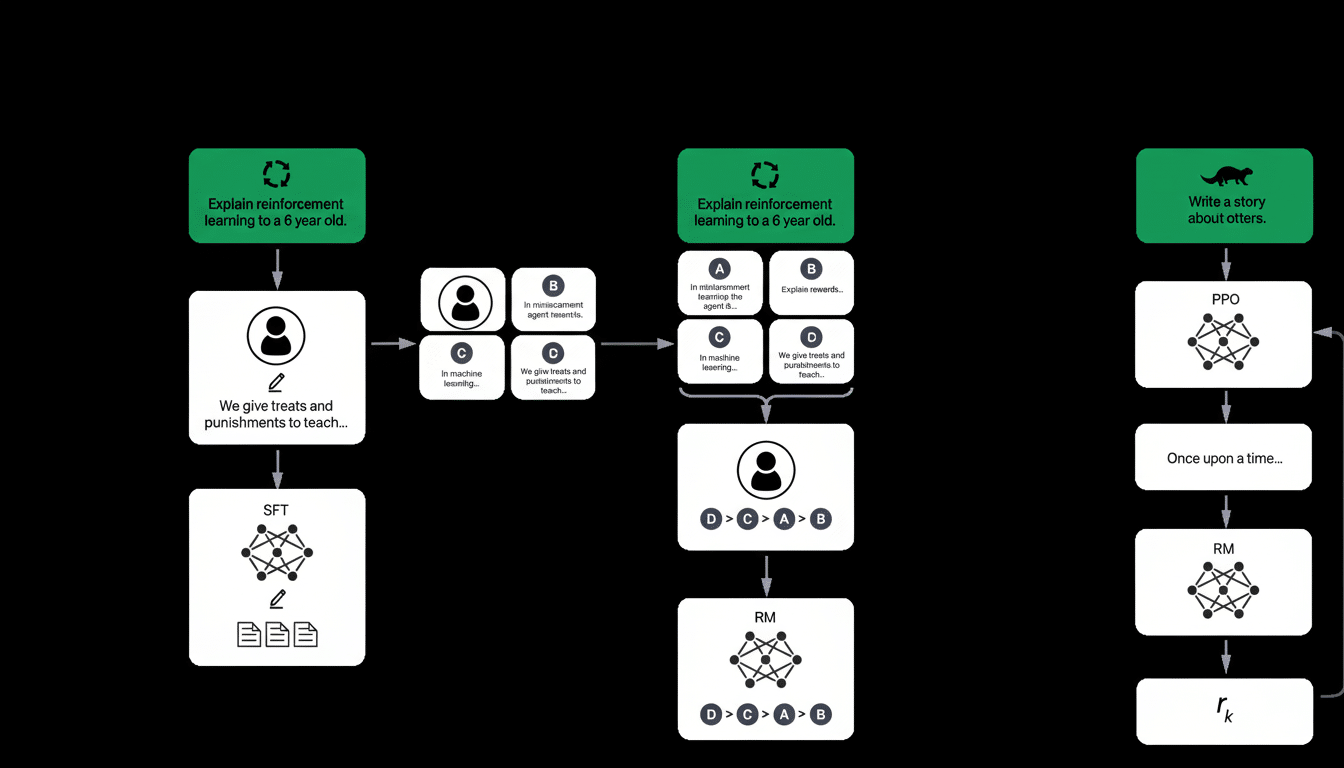

Explosive scale amplifies risks. Other concerns, such as hallucinations and sycophancy (where a model reflects back its user’s assumptions) have been documented by researchers. One such high-profile case saw a user mistake an AI-generated explanation as convincing proof of their discovery of a new mathematical result — only for experts to later find fundamental issues. Red-teaming, benchmarking and retrieval-grounded methods are improving results; yet quality still varies by prompts, domains and stakes.

Governance is evolving in parallel. OpenAI remains an organization with capped profits associated with a nonprofit, even as regulators from the European Union to U.S. federal agencies are forging new oversight regimes for AI. As models get more capable and have more agency, we can expect the requirements around provenance, consent for training data, and safe deployment to tighten.

The Competitive Context Among Major AI Providers

ChatGPT’s momentum unfurls against a backdrop of fierce competition. Google is pushing Gemini across consumer and Workspace surfaces, Anthropic’s Claude is establishing itself as a source of greatness in instruction-following and safety research, and open-weight models driven by Meta’s Llama ecosystem are getting good quickly — particularly for cost-sensitive use cases. In such an environment, other concerns aside from raw model quality (for the service performing ML, not to the user) can start to matter: how much you trust your users, level of developer tooling, and infrastructure efficiency.

For now, 800 million weekly users is a pretty clear sign: generative AI isn’t a side project anymore. If OpenAI can maintain reliability at this scale while enabling developers to ship differentiated experiences, the next era of ChatGPT’s growth could be characterized less by novelty and more by the everyday jobs people and businesses have come to depend on ChatGPT to fulfill.