OpenAI is setting ChatGPT up for a social tune‑up. New personality settings offer users control over how warm or restrained the assistant’s tone is, how effusive it is, how reliant on lists, and how many emojis sprinkle its replies. The changes come in addition to pinned chats and other QoL updates, but the marquee change is tone on demand.

How the New Personality Controls Work in ChatGPT

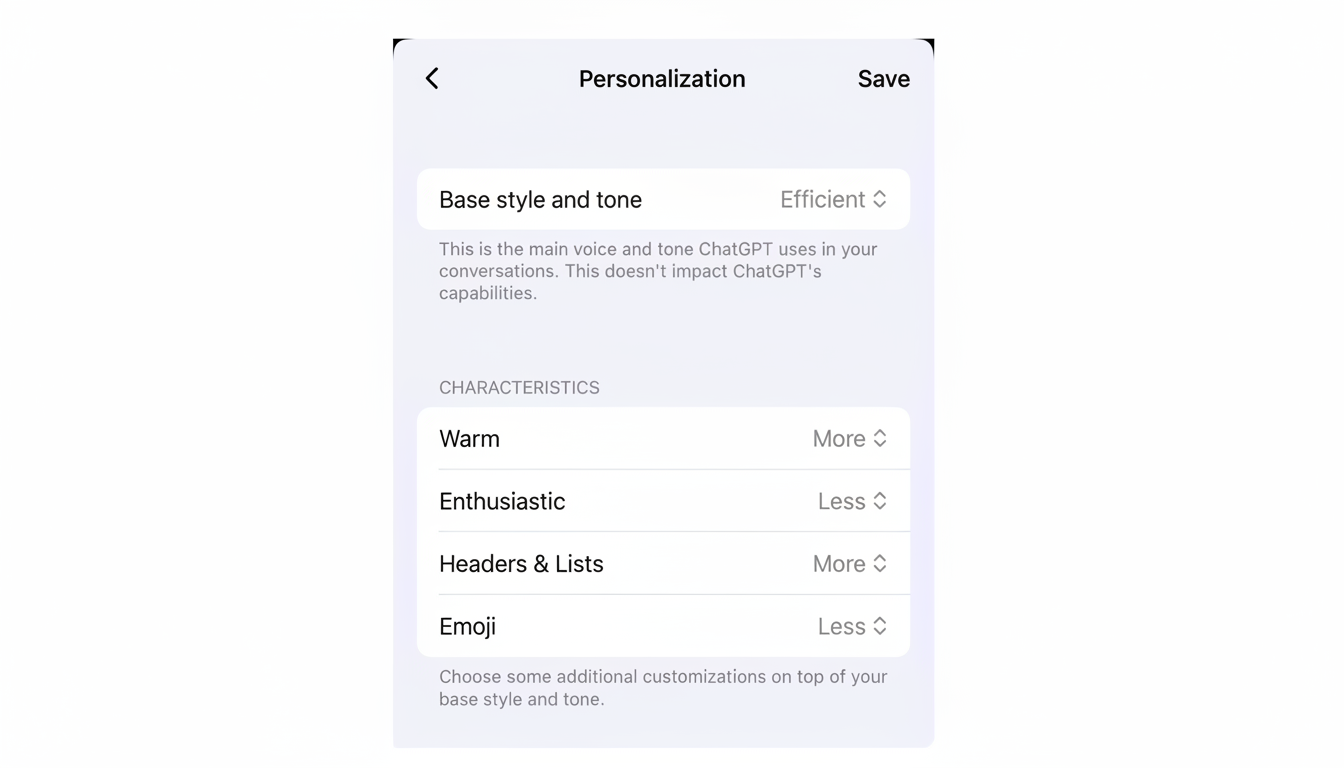

The settings add less/more sliders with a default to warm and enthused. Users can also nudge ChatGPT to sort fewer or more lists, and dial emoji usage up or down. There is, after all, no hard “off” switch for emojis — OpenAI is signaling that expressive cues will continue to be a part of the default conversational style, even in professional modes.

At a practical level, these controls have the same flavor as a soft style guide applied on top of model behavior. Seek out “less warmth, fewer emojis, and minimal lists” and the replies steer crisper and more straightforward. Ask for “more warmth and enthusiasm,” and the assistant becomes friendlier, more likely to use exclamation points and emotive prompts. For teams who have used their own custom system prompts to mold tone, this is a faster, more obvious shortcut.

Why Tone Control Is Essential For AI Reliability

Style is not cosmetic in human‑AI interaction; it affects trust and perceived accuracy. Dübendorf/Fulda (dpa) — Researchers have warned that too anthropomorphized chatbots can be obnoxiously sycophantic — over‑agreeing or bending over backwards in response to sensitive requests. OpenAI was criticized in the past for being too agreeable in an early release of GPT‑4o, and has admitted to having a “personality problem,” so this update reads as both a feature and a fix.

Public sentiment also illustrates what’s at stake. According to Pew Research Center, 52% of Americans are worried rather than enthusiastic about AI. When the assistants are too perky or expressive, users might confuse friendliness for truthiness. Increasing the control over warmth and enthusiasm may also help to alleviate miscalibrated trust without alienating the assistant.

Work and Education Use Cases for Tone Controls

In customer service, tone can mean the difference between defusing an escalation and setting off a callback spiral. A bank, for example, may standardize on “less warmth; fewer emojis” when responding to disputes but admit “more warmth” when messaging customers who are onboarding. Marketing and sales teams might like more excited drafts they can pare down; legal and compliance less so, along with fewer listicles that one could interpret as prescriptive advice.

Similarly, teachers and tutors can adapt their delivery style to the learner — keeping it friendly when you need them to participate, or toning it down when they’re preparing for an exam. Some institutions may bridle at the inability to turn emojis off entirely, but the lower‑emoji setting should help keep things less casually whimsical in more formal settings.

Safety Signals and Youth Protections in New Update

OpenAI is matching tone controls with safety promises concerning teens and mental health. The company says its newest generation model series, GPT‑5.2, builds on in‑house safety testing and introduces new principles for under‑18 users and planned age verification. Where the personality update will target surface experience, the safety work targets sensitivities to deeper failure modes such as over‑accommodation on sensitive topics.

It’s a practical order of operations: tame some incentives for people to over‑trust the cute assistant, while locking up the machinery under the hood. The Stanford AI Index and other research teams have repeatedly raised concerns about large language models’ persistent risks of hallucination and overconfidence; modulating tone is a low‑friction way to calibrate expectations with reality.

What Power Users Lose and Gain With These Controls

Advanced users who have previously used prompt engineering to set voice can now mix lightweight personality settings with personalized instructions. The update also aligns with pinned chats — useful to keep a preferred tone for ongoing projects at the top of your inbox — and improvements to ChatGPT’s browser, Atlas, and email‑drafting tools. The end result is a more consistent experience instead of me editing a prompt wall every session.

The Bigger Picture on Configurable AI Personalities

The drive toward configurable personality is a tacit admission that one‑size‑fits‑all AI doesn’t fly out there in the real world. Companies require virtual assistants who can toggle between friendly and formal, expressive and restrained — while never losing a commitment to fact‑based inquiry. OpenAI’s new knobs won’t fix hallucinations or bias, not by a long shot, but they do give users some measure of control over how the system presents itself — a valuable step toward brand‑safe AI.