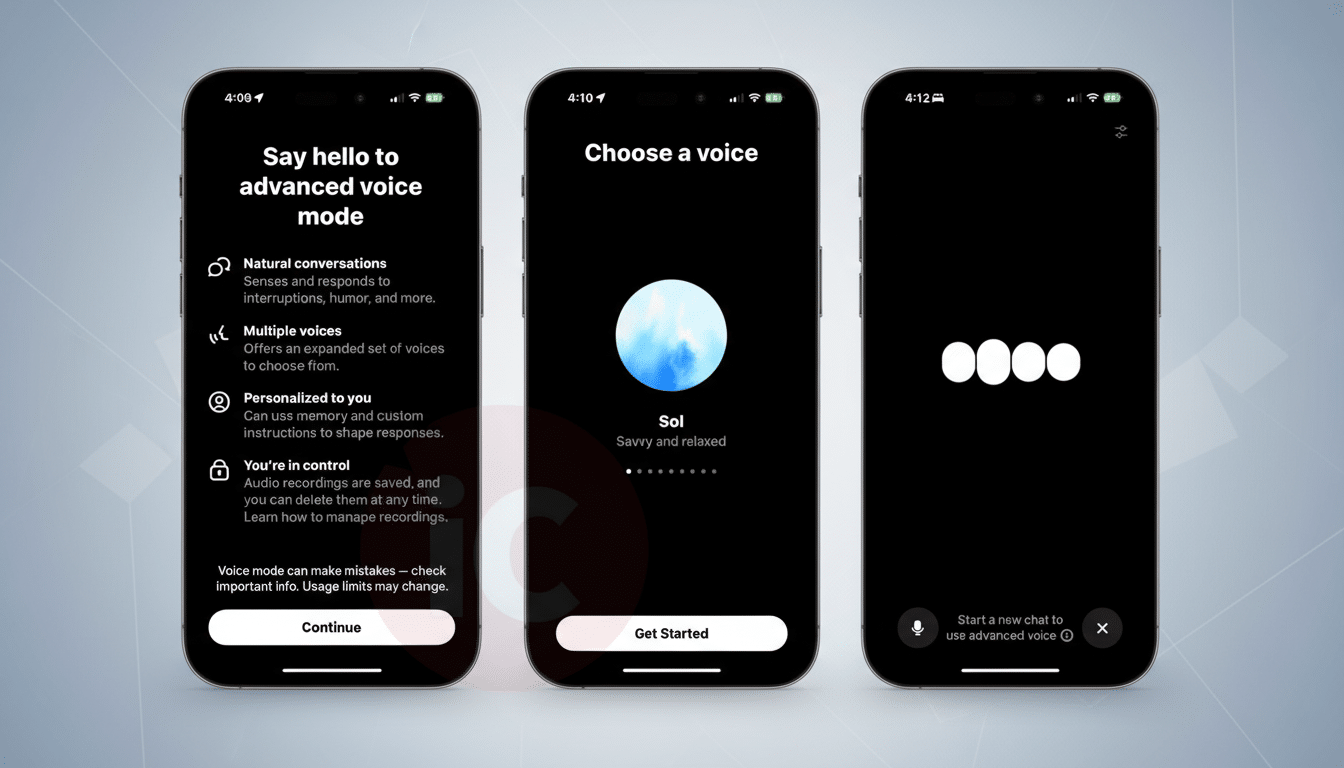

OpenAI is integrating Voice Mode right into the normal ChatGPT conversation window, doing away with the separate orb interface and allowing users to seamlessly transition between speaking and typing within the same thread. The overhaul consolidates three previously disparate elements — live transcripts, inline media, and hands-free prompts — in one place across mobile, web, and desktop.

What changed with ChatGPT’s integrated Voice Mode update

Initially, Voice Mode would launch a separate screen with orb-like controls, but no clear transcription visible. Now, voice chat lives inside the standard chat view. While ChatGPT is talking, a live transcript forms in real time and any suggested maps, photos, or links from the assistant are drawn alongside the chat.

- What changed with ChatGPT’s integrated Voice Mode update

- How the new integrated Voice Mode works in ChatGPT

- Why integrating Voice Mode into the main chat matters

- Performance context and latency for real-time voice chat

- Real-world scenarios that benefit from integrated voice and text

- Availability and user controls for the new ChatGPT Voice Mode

- Competitive landscape as ChatGPT moves to integrated voice chat

More than just cleaning up the interface, this shift achieves a few things. It takes away context switching, keeps the entire history visible, and prevents you from needing to leave a call-style view to refer back to earlier responses. Those multimodal responses from the assistant are no longer siloed — they’re part of the ongoing conversation thread.

How the new integrated Voice Mode works in ChatGPT

Select Voice Mode by clicking on the waveform icon to the right of the input box. The assistant introduces itself and starts listening when the button turns blue. You have the option to speak, type, or both, and you can include media via microphone, camera, or file upload. Press End to finish when you’re through.

Everything remains in a single pane, so you can scroll up and down to confirm quotes, copy text from the transcript, or interact with any media cards that appear.

If you prefer the old experience, there is a setting called Separate Mode in Voice Mode settings to make it look like it did previously.

Why integrating Voice Mode into the main chat matters

By bringing voice directly into the main chat, ChatGPT feels less as if it were a feature and more like a natural modality. For brainstorming, tutoring, or trip planning, you talk through ideas while the answer forms as text with references and imagery. Live transcripts offer an audit trail, which is useful for revisiting instructions or sharing results with teammates.

There’s also a speed factor. Stanford and Baidu’s research suggests that speech input on smartphones is about 3x faster than typing, so voice isn’t just practical in the context of mobile-first workflows. Combining that speed with clear, always-there context, the redesign is meant to reduce noise without sacrificing clarity.

Performance context and latency for real-time voice chat

OpenAI appears to be closing the latency gap on real-time voice, achieving sub-second response times with its latest multimodal models. That is important for natural turn-taking — interrupting, clarifying, and continuing in mid-thought behaviors that feel awkward as lag sets in. Integrating the two views is much more conducive to this back-and-forth, with typed interjections and spoken replies all living on one canvas.

The scale is significant. OpenAI said that ChatGPT has more than 100 million weekly active users and that over 92 percent of Fortune 500 companies use the product. Any UI change so fundamental has the potential to influence how and when consumers — and teams — will embrace voice-first workflows, from the simple fact check to the multimodal walk-through for customer support or training.

Real-world scenarios that benefit from integrated voice and text

A traveler can request an itinerary for two days out loud and watch as a map card appears beneath the transcript, then follow with another spoken change — “Make day two kid-friendly” — without ever leaving the thread.

A developer could walk through a stack trace while pasting something, getting step-by-step fixes and a doc card linked as they go, all written down in text for later reference.

For accessibility, live transcription makes voice sessions less ephemeral than they were in the past, making it easier to search and share them while enabling teams to record decisions in a way that was previously lost in audio-only tools. The integrated model also reduces cognitive load: one view, one history, multiple input modes.

Availability and user controls for the new ChatGPT Voice Mode

The redesign is launching on iOS and Android apps, as well as the web and desktop editions of ChatGPT. To begin, press the waveform icon in the input bar. If you prefer the old look, simply navigate to Settings and then Voice Mode and toggle Separate Mode to return to the standalone interface.

Competitive landscape as ChatGPT moves to integrated voice chat

The shift brings ChatGPT in line with a growing push toward multimodal, conversational agents. Google’s Gemini and Microsoft’s Copilot also center voice and vision, but tucking voice right into the main thread, all of it coming with live, persisting transcripts, tightens the loop between speaking, seeing, and doing. It’s a small UI choice with disproportionately large implications for how we actually put AI to use every day.

Bottom line: Voice no longer has to be a departure from ChatGPT. It’s just another way to chat — where the rest of your work already is.