OpenAI says its new ChatGPT 5.3 Codex wasn’t just built for coders—it was built with help from the model itself. Early iterations of Codex reportedly debugged training runs, flagged deployment issues, and suggested fixes that made their way into the final release. The result, according to the company, is a coding model that runs 25% faster while consuming fewer resources.

It’s a milestone for “model-in-the-loop” development: using an AI to accelerate the design, testing, and integration of its successor. Humans remained in charge, but the tool did real engineering work—triaging errors, proposing refinements, and evaluating interaction quality at scale.

How a Coding Model Helped Build Its Successor

OpenAI’s Codex team says it started by tasking early versions of 5.3 to scrutinize the training pipeline itself. That included examining logs for malformed inputs, generating targeted unit tests when failures appeared, and proposing alternative prompts or loss functions for ablation trials. In deployment staging, the model helped craft health checks and telemetry queries that caught regressions before release.

Crucially, this wasn’t fully autonomous R&D. Engineers set objectives, reviewed diffs, and accepted or rejected changes. But the speed-up was tangible: when a model can generate focused test cases, summarize eval results, and draft fixes within the same loop, iteration cycles compress dramatically. That’s where the 25% runtime improvement and reduced resource use come from—many small, compounding optimizations discovered faster than a human-only process could find them.

OpenAI has hinted before at a vision of AI research assistants. Codex 5.3 appears to be a practical step in that direction, with the model acting as a force multiplier for the team rather than a hands-off architect.

Why This Matters for Developers and Teams

For working engineers, a model that helps build itself signals a shift in how software is made. If Codex can improve its own training harness and CI pipeline, it can likely harden yours, too—through automated test generation, refactoring suggestions, and faster root-cause analysis. Expect tighter integration with common workflows: propose a change, ask the model to generate unit and property tests, run a sandboxed check, and get a risk-ranked summary before you touch production.

Real-world data backs the productivity upside. GitHub’s research found developers completed coding tasks 55% faster with an AI pair programmer in controlled trials, while subsequent field studies showed substantial reductions in boilerplate and context switching. If those gains translate to the meta-work of building models, it explains how Codex 5.3 squeezed out speed and efficiency improvements without massive architectural overhauls.

There’s also a cost angle. Lower runtime and resource use mean more affordable inference and the potential to run on slimmer hardware profiles. For teams balancing latency budgets and cloud spend, those savings can be decisive.

Checks, Balances, and Safety in Self-Improving AI

Self-improving systems raise obvious concerns: feedback loops can amplify blind spots, and models can “optimize the metric” without truly improving capability. Guardrails matter. Best practice is to separate training and evaluation data, use frozen test suites, and require human sign-off for any change that touches safety, reliability, or compliance. Independent red teams and adversarial testing help surface failure modes that happy-path evals miss.

Industry groups have pushed for rigorous, transparent evaluation. Benchmarks such as HumanEval for code generation and SWE-bench for real-world bug fixing offer external yardsticks, while community efforts from organizations like MLCommons and EleutherAI promote reproducibility and open eval harnesses. If Codex is to keep contributing to its own development, transparent reporting on these benchmarks—plus ablation studies showing what the “self-help” loop actually changed—will be essential.

Safety isn’t just about correctness. It also includes supply-chain integrity (e.g., pinning dependencies the model proposes), licensing checks on generated code, and preventing insecure defaults. Expect hardened filters and policy layers that constrain any model-driven edits to approved patterns.

Where It Fits in the AI Race for Coding Tools

OpenAI is not alone in exploring agentic, self-bootstrapping workflows. Precedents stretch from AutoML systems that design neural architectures to self-play in reinforcement learning. Coding-focused rivals are also iterating quickly, with leading chat models adding structured tools for planning, code execution, and long-horizon tasks. The difference here is emphasis: Codex 5.3 is being positioned as both a developer tool and a developer of tools.

If the model’s claimed speed and efficiency gains hold up under public API use, it raises the bar for latency-sensitive coding agents, IDE copilots, and CI bots. It also hints at a future in which model teams routinely assign “internal tickets” to an AI collaborator—especially for test coverage, benchmarking, and documentation.

What to Watch Next as Codex 5.3 Rolls Out

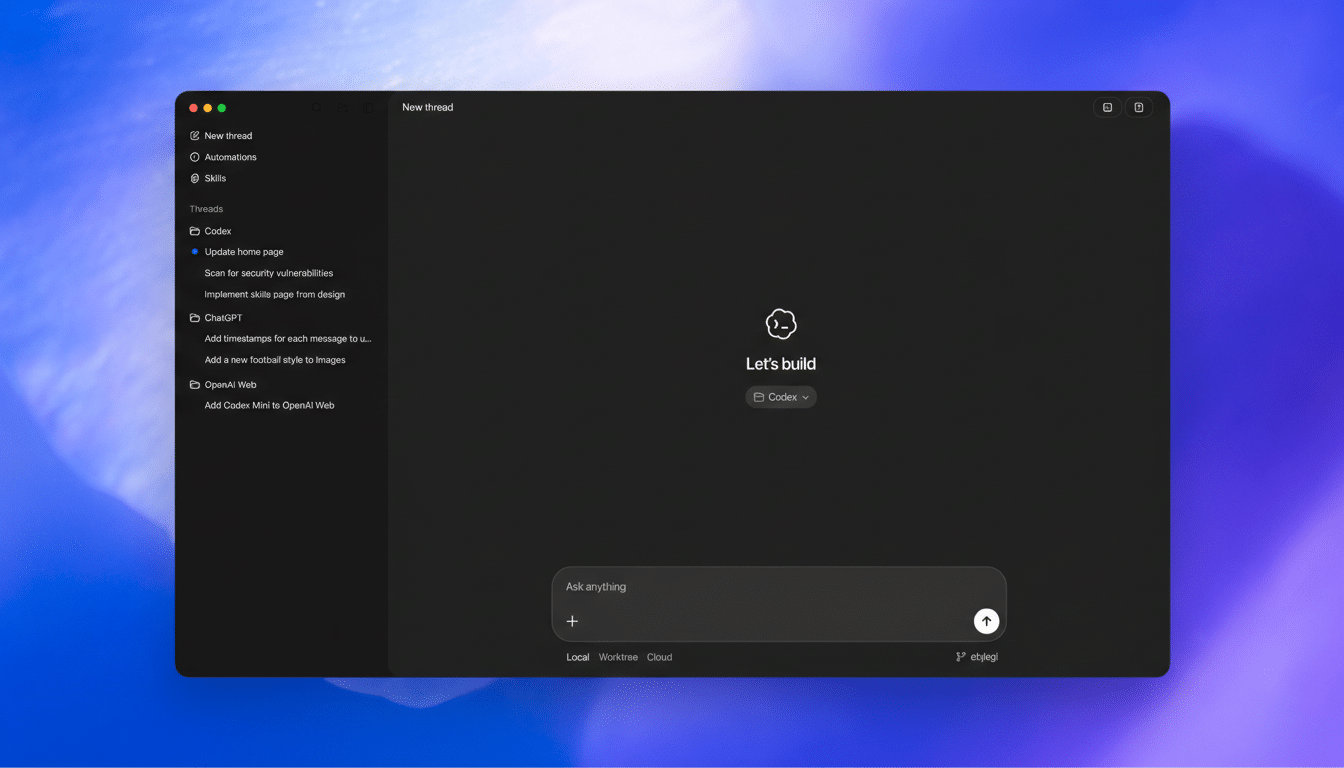

OpenAI says Codex 5.3 is available in paid ChatGPT products now, with API access on the way. Key questions remain: How does it perform on standardized coding benchmarks? Can teams reproduce the 25% throughput gains in real projects? What visibility will developers have into the model’s reasoning and provenance for generated code?

The headline is bold—an AI helping to build itself—but the significance is practical. If Codex 5.3 reliably shortens iteration loops while improving runtime efficiency, it could mark the moment self-augmenting AI moved from research concept to everyday engineering reality.