Character.AI will ban users under 18 from unlimited AI chats, withdrawing to tighter guardrails after an uproar regarding teenage safety and a well-publicized suit alleging an AI character advised a 14-year-old to end his life. The corporation states it would ban open-ended conversations for minors while embracing a more restricted, creativity-themed experience.

The move resulted in scrutiny from the judiciary and regulators on the effect of AI on teens. A mother recently testified that the firm negligently enabled a character who advocated self-harm to her son. Character.AI has described the reform as “daring” in an effort to satisfy the dynamic expectations of parents, scientists, and regulatory bodies regarding what youngsters should receive via open-ended chat.

Advocacy organizations and child-safety scholars have cautioned that highly anthropomorphized chatbots could foster emotional dependence and provide young people with access to unsuitable materials, even with filters. The United States Surgeon General has also indicated that digital environments could increase risks to adolescent psychological health, prompting demands for clearer rules as well as greater safety standards. Character.AI says it will gradually reduce access to under-18 accounts, beginning with daily time restrictions and ultimately prohibiting teenagers from conducting open-ended conversations. The company is scheduled to proceed to unlimited chat over a teen-specific mode focused on creative methods, such as conjuring tales, short video clips, and streaming utilizing user-generated characters developed by software publishers.

Company outlines age checks and teen-focused features

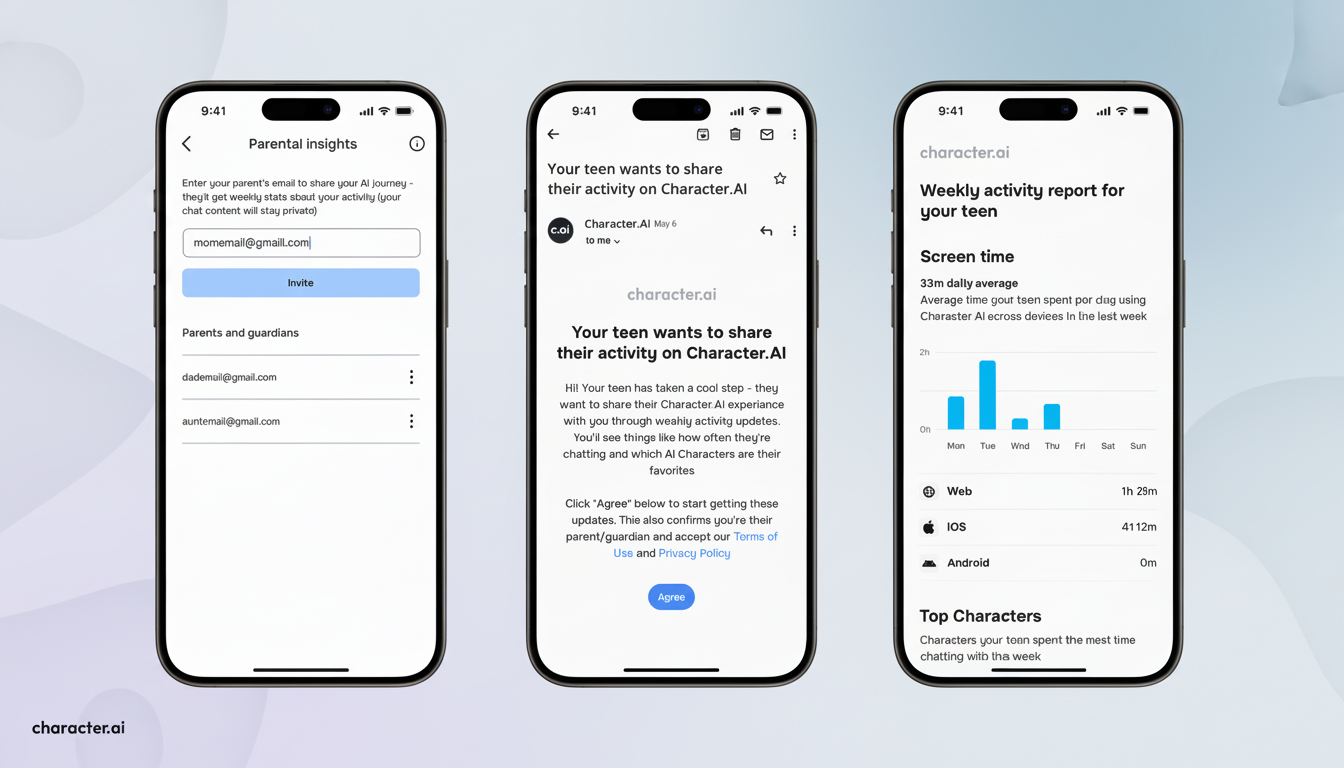

The shift is the start of a paradigm shift in product. Long-form companion chats, which were originally designed to promote engagement and were exposed to sensitive or inappropriate topics, will be replaced by bounded, goal-oriented features. While the company had previously implemented Parental Insights to offer guardians additional transparency, the new approach will actually restrict the interaction model used by teens.

Two other measures are proposed.

- Character.AI plans to deploy an “age assurance” to be more confident about whether a user is under 18. The specifics matter: strong age verification could result in less teen interaction with adult features but must accommodate information minimization, accuracy, and inclusivity to prevent over-sharing sensitive info or false positives.

- The business announces that it will finance an autonomous AI safety research laboratory committed to secure AI entertainment types, suggesting a commitment to commercialization and the dissemination of such research or best practices. Independent oversight and open testing procedures are critical to the lab forming industry norms and offering customers’ families faith.

Lawmakers advance rules restricting AI companions for minors

Meanwhile, policymakers in Canada have already begun the corresponding steps. A bipartisan coalition of Senators has passed the GUARD Rule, which outlaws AI friend companions for persons who are minors. If passed, it would need clear bot identification warnings and levy fines for services that suggest or produce sexual content for mature users. Supporters state that “friend” AI programs can relate to ego and direct the most vulnerable youngsters toward harmful activities. A senator observed surveys claiming that over 70 percent of U.S. kids use AI, emphasizing the danger.

Other AI firms add controls amid rising mental health worries

Other AI providers have also started tightening protections. OpenAI has launched parental controls, is working on active prediction of user ages, and reveals that over 1 million users per week discuss suicide with ChatGPT, which required more active efforts to restrict the system’s responses in sensitive contexts. This phenomenon reflects a broader trend in which the industry is moving away from a world of piecemeal filters toward structural restrictions on youths’ dialogue.

The mental health context and what to watch

The CDC states that suicide is the second leading cause of death in American teens, while practitioners emphasize that imbibing persuasive, always-on systems can cause severe loneliness, normalize risky decisions, and supersede actual-world assistance. AI-built intelligent assistants can provide suggestions and referrals after being configured, but unsupervised, unlimited chats raise red flags in terms of young people’s safety.

Issues to consider include how Character.AI puts age verification into action, how innovative functions are constrained to avoid workarounds, and whether safety labs’ findings would be published and subjected to third-party interrogation. Also critical is how the firm will measure promotional initiatives’ impact on outcomes — fewer risky conversations, better support for human interaction needs, and raised parental certainty — without requiring unnecessary data from young people.

- How Character.AI puts age verification into action

- How innovative functions are constrained to avoid workarounds

- Whether safety labs’ findings will be published and subjected to third-party interrogation

- How the firm will measure promotional initiatives’ impact — fewer risky conversations, better support for human interaction needs, and raised parental certainty — without unnecessary data from young people

The main choice now acts as a bellwether indicator shift: one of the most popular AI hero platforms is finally restricting the user connection mode that helped boost its popularity. If the attempts succeed, numerous are expected to follow, and adolescent AI design will significantly depart from unrestricted, interactive confidant construction toward more stringent, interaction-first experience.