AI was not just the backdrop at CES this week; AI was the show. From Nvidia’s next leap in computing to AMD’s efforts at AI PCs, to Razer boldly experimenting and the demands required of our connected future, the floor turned into a proving ground for how software intelligence melds with flesh-and-blood hardware.

What was clear was a distinct move toward “physical AI” — devices, robots and helpers that can sense, plan and act. It’s a quiet yet significant shift from demos that are confined to screens to products and devices that interface with homes, job sites and car dashboards.

- Nvidia leads the way with Rubin and real-world AI

- AMD bets big on AI PCs and divergent open partnerships

- Razer’s AI gambits, in full concept mode at CES 2026

- Robots and the emergence of physical AI across sectors

- Automotive technology and assistants find their voice

- Quirky standouts you’ll be hearing about after CES

- Why it matters: the practical stakes for physical AI

Nvidia leads the way with Rubin and real-world AI

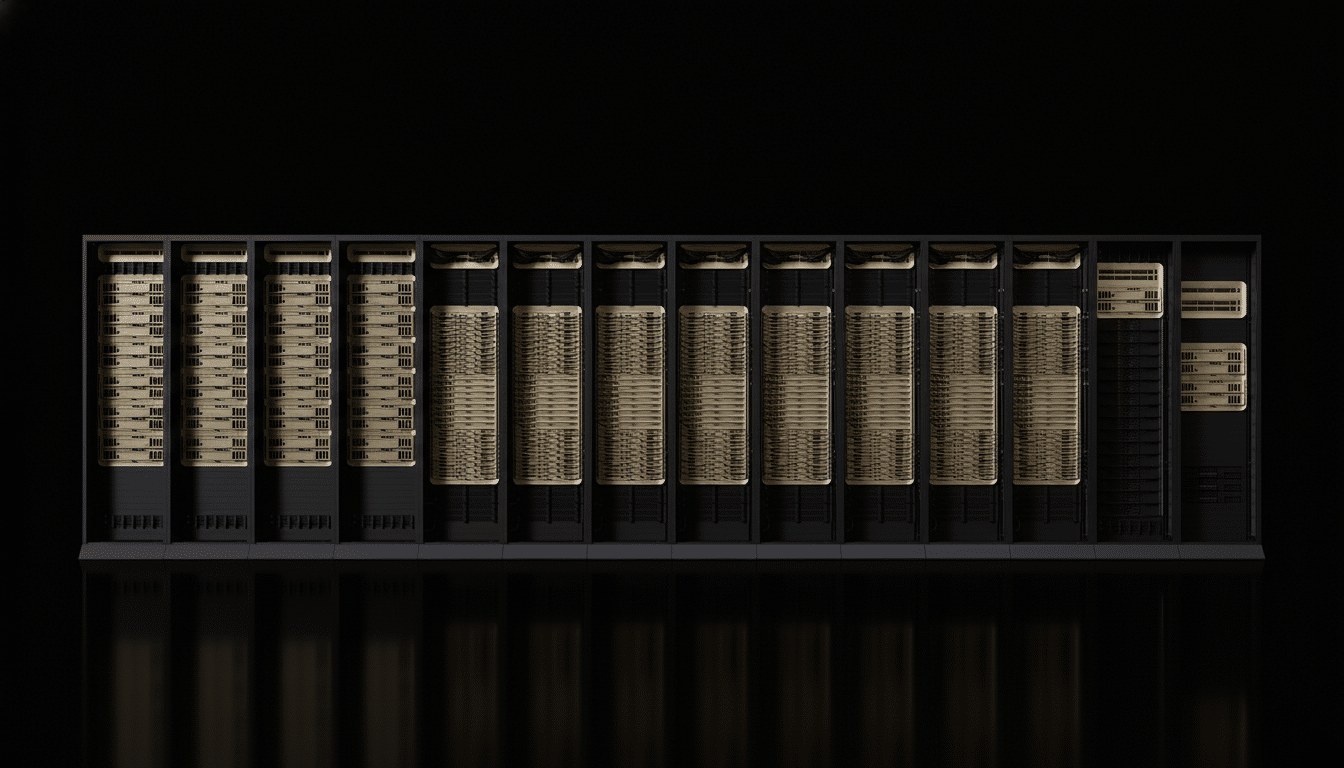

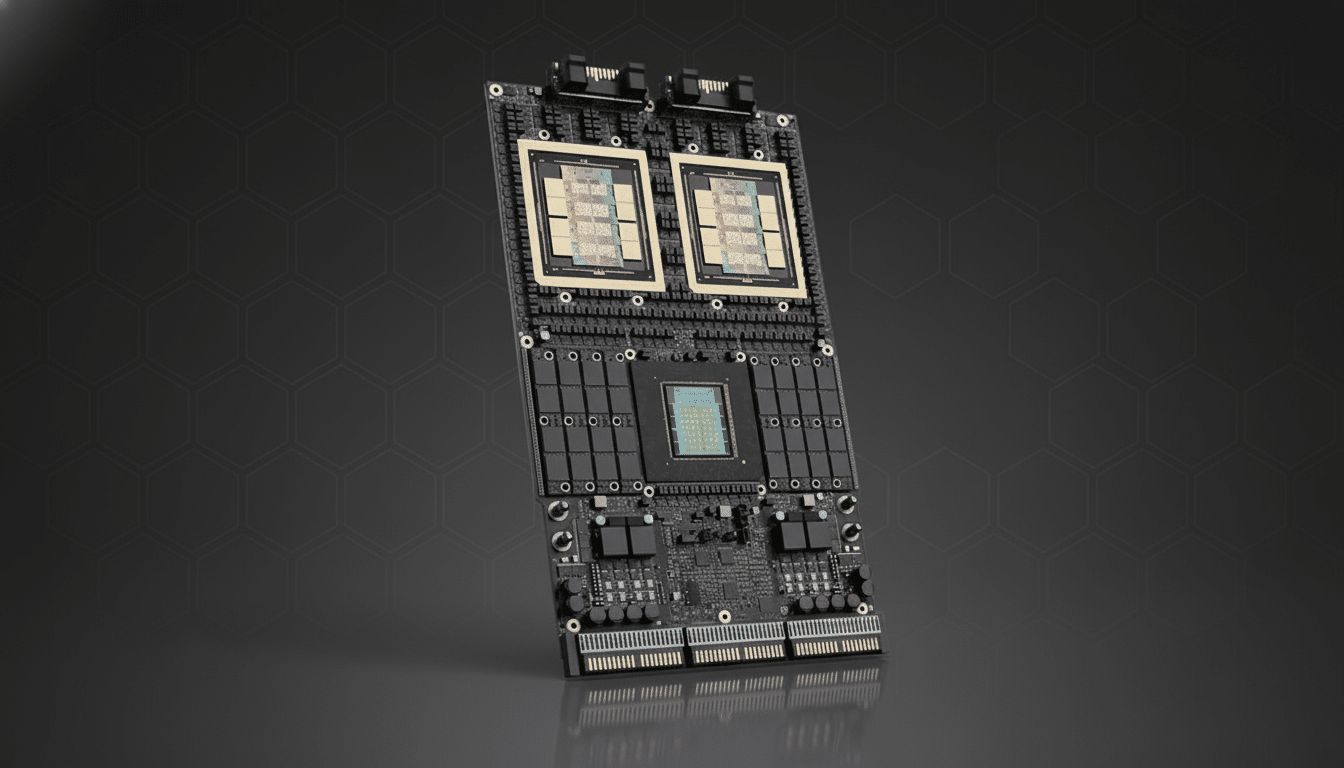

Nvidia described Rubin, a new computing architecture that is expected to eventually replace Blackwell. The emphasis is apparent: more performance per watt for training and inference, greater memory bandwidth, and better integration between GPU, CPU and networking to keep pace with multi-robot and multi-sensor workloads.

The company highlighted Alpamayo, an open family of AI models and tools that are designed for autonomous systems and vehicles. The pitch reflects a wider strategy: make Nvidia’s stack the default runtime for generalist robots, with simulation through Omniverse, on-robot deployment to embedded GPUs and fleets managed in the cloud.

Context matters here. Jon Peddie Research puts Nvidia’s share of the discrete GPU market well above 80%, and that pre-eminence in accelerated compute gives it a runway to set standards for physical AI, from sensor fusion to digital twins. If Rubin can deliver the efficiency gains expected of Cox’s robots, it could bring the cost of deployment at scale down — which had become a real bottleneck for field robots and AVs.

AMD bets big on AI PCs and divergent open partnerships

AMD’s keynote played into the surge of AI-ready laptops and desktops. The new Ryzen AI 400 Series combines Zen CPU cores with RDNA graphics and a beefed-up NPU built to enable much more inference on-device — stuff like real-time transcription, image upscaling, or creative tools without relying too heavily on the cloud.

The subtext: make PCs feel faster (by taking the everyday AI latency from seconds to milliseconds) and do it in a private way. IDC and Canalys have recently flagged on-device AI as the next battleground of differentiation in personal computing, and AMD’s approach — relying on a balance of software partners and chiplets — underscores its hopes to be the default for power-sipping AI workflows.

The competitive read is straightforward. If Nvidia dominates the data center and robotics mindshare, then AMD is driving a lane with its user-facing performance per watt. Anticipate vigorous NPU TOPS and mixed CPU/GPU utilization benchmarking when retail systems arrive.

Razer’s AI gambits, in full concept mode at CES 2026

Razer’s two jaw-droppers, Project Motoko and Project AVA, represented the most concentrated CES energy. Motoko aims to offer smart-glasses-like help without the glasses, proposing a wearable physical form factor which offloads context and notifications in an ambient way. AVA plants an AI companion avatar on your desk — part presence, part playful UI.

Are these shipping soon? Probably not. But Razer is attempting to measure the social acceptability of AI that “lives” near you, not in your phone. Where precisely that line lies, dividing useful from uncanny, may well determine how AI peripherals make the leap from concept to category, particularly given intense scrutiny over privacy and data provenance.

Robots and the emergence of physical AI across sectors

Robotics received a bigger share of the spotlight than in recent years. LG’s home bot CLOiD took tentative steps across the stage, a reminder that trusted is better than razzmatazz when you’re on your turf at home, where failure gets no sanctuary. Incremental success is still progress, and the things that motors are picking up, stowing and navigating around all remain very hard problems.

Hyundai and Boston Dynamics announced their partnership with Google DeepMind in training and controlling existing Atlas models — as well as a new humanoid version. Pair that with Caterpillar’s “Cat AI Assistant,” made in partnership with Nvidia and demonstrated in an excavator, and you’ve got the full range: from housework to heavy machinery.

In recent years, the International Federation of Robotics has reported record robot installations, with more than half a million units installed annually. What CES underscored is the next chapter: leaving fenced-off industrial arms behind for mobile, generalist systems that can operate where humans do — safely, efficiently and at a large scale.

Automotive technology and assistants find their voice

The deployment of AI in the driving experience is being woven slowly by automakers and suppliers. Ford offers a peek at an assistant that doesn’t appear in dashboards until much later down the road, powered by Google Cloud and available for its mobile app with off-the-shelf large language models doing all of the heavy lifting. The details remain skimpy, but the rollout approach is sly: try them out with real users before embedding in vehicles.

Amazon announced Alexa+ with a browser-based early access, refreshed Fire TV software and a line of new Artline TVs doubling down on voice as the primary interface. Alongside richer alerts and an app store, it came with a plan to use the momentum from new deals that ring (pun intended) in more partnerships, thus signaling an ongoing power play to turn the house into a blended mesh of AI endpoints.

Quirky standouts you’ll be hearing about after CES

Clicks Communicator resurrected the physical keyboard phone for $499, plus a hack-on slide-out keyboard accessory for other devices — pure nostalgia, but with real ergonomics needed by heavy typers.

In early hands-on time, it felt thoughtfully designed, with a contoured back and a raised screen above the keyboard to shield keys.

For creators, the eufyMake E1 UV printer offered direct-to-object printing for a fraction of the cost of legacy pro gear, potentially bringing small-batch merch to more Etsy-scale businesses. And Lego silently demoed its own Smart Play System behind closed doors, a mix of bricks, tiles and minifigures with embedded electronics and sound — an ambitious hybrid of tactile play and ambient tech.

Why it matters: the practical stakes for physical AI

CES provided a clear sign that AI’s next wave is physical, context-aware and more local. Nvidia is hardening the compute stack for robotics and autonomy, AMD is driving AI into the everyday PC, Razer is exploring the boundaries of human-machine interaction.

The stakes are practical: cost per inference, energy budgets and trust. And as standards crystallize around toolchains and safety, winners will be those who tame complexity without obscuring it — letting developers work quickly, letting users opt in with transparency, proving that intelligence belongs as much in the physical world as online.