AI was everywhere on the show floor, but the story at CES is also as much about hardware maturity as it is pony-model magic. Switching from Nvidia’s next computing platform to AMD’s NPU-in-your-PC and Razer’s inscrutably weird experiments, the talk had moved from demos to deploying, with robots, TVs and toys joining in the AI cacophony.

Nvidia Shows the Way in AI Hardware at CES 2026

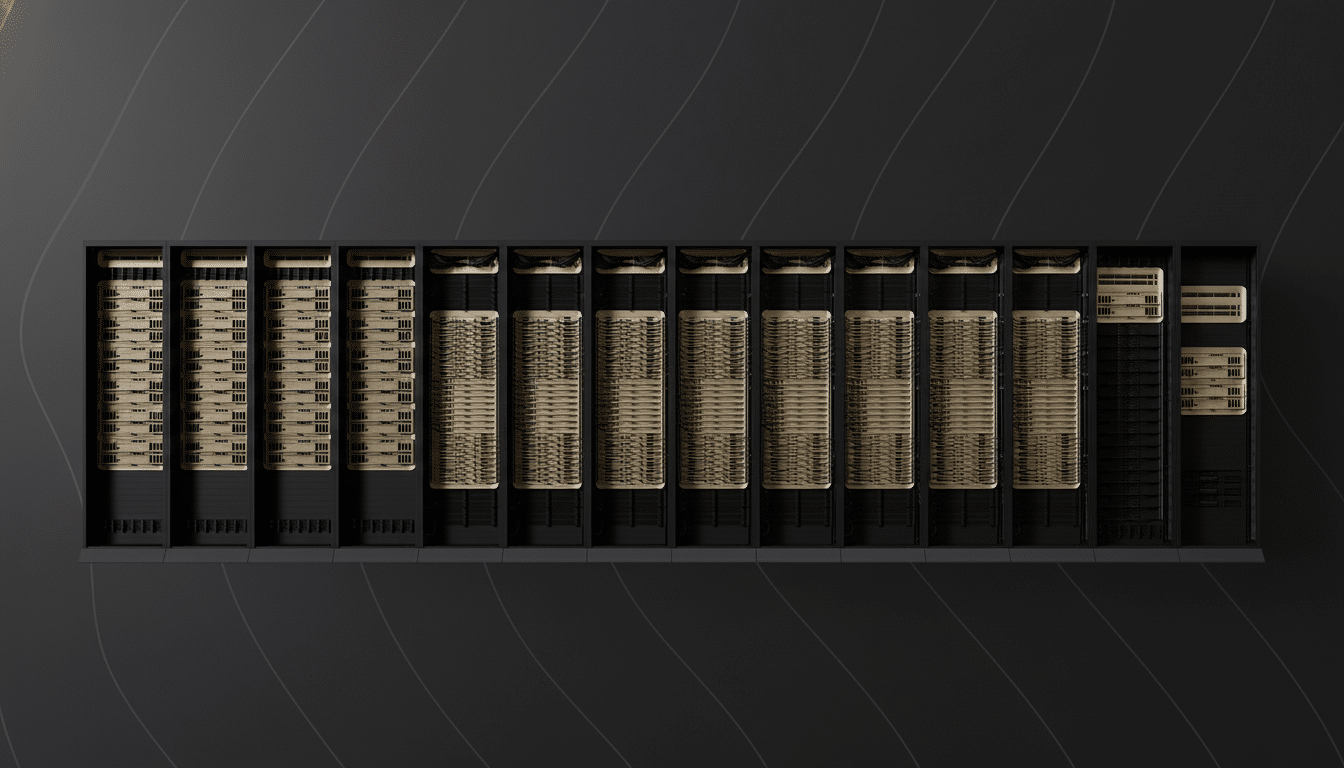

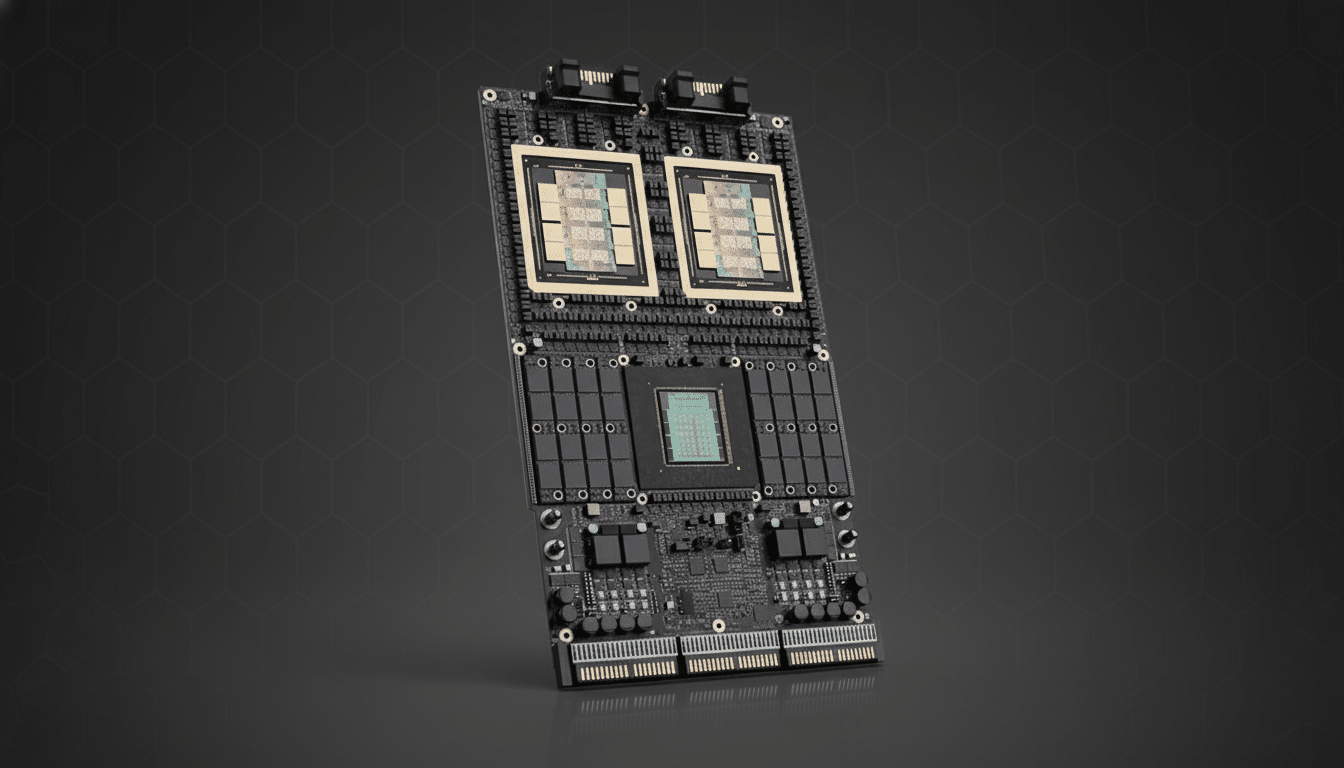

Nvidia’s keynote reiterated the idea that the AI era is won by bandwidth and integration. The company offered a preview of Rubin, the heir to Blackwell, and noted that it would have faster memory paths, denser storage and tighter system interconnect so large models could be more readily fed. The subtext: training is now bottlenecked less by raw flops than by data plumbing, a point echoed in my recent discussions at MLCommons about end-to-end throughput.

- Nvidia Shows the Way in AI Hardware at CES 2026

- AMD Pushes AI Into Everyday PCs With Ryzen AI 400 Series

- The Robot Comes Out of the Lab With New Partnerships

- Alexa Gets an AI Overhaul Across TVs, Apps and Devices

- Razer Tries AI Companions With Project AVA and Motoko

- Lego Makes Play Smart With the New Smart Play System

- What to Watch Next in AI Hardware, Assistants and Toys

In a move beyond the data center, Nvidia announced its Alpamayo family of open-source models and tooling for autonomous systems. The method — bundling together hardware, software, and a standard reference stack — appears to be an attempt at making the runtime the default one for generalist robots and vehicles in much the same way as CUDA anchored the last decade of AI. With the International Energy Agency warning that global data center electricity consumption may nearly double by mid-decade, efficiency stories will be as critical as performance ones.

AMD Pushes AI Into Everyday PCs With Ryzen AI 400 Series

AMD’s first keynote of the show highlighted Ryzen AI 400 Series processors, offering larger NPUs and smarter power management to laptops.

The bet is simple: push on-device transcription, image generation, and code-assist locally to reduce latency, preserve privacy, and minimize cloud costs for common tasks.

It’s a timely swing. IDC forecasts that AI-capable PCs will represent the majority of the market volume from here, and software ecosystems are lining up around models that can scale from NPUs to a cloud. The advantage AMD has is platform breadth — CPU, GPU, and NPU all open for business under the same roof if they make it easy for devs to hit all three with consistent runtimes and quantization paths.

The Robot Comes Out of the Lab With New Partnerships

Hyundai and Boston Dynamics announced a partnership with Google’s AI research lab to train and totally service Atlas robots, an updated version of which danced onstage. Binding locomotion, perception and policy learning together under the same toolchain suggests a future in which robots iterate within simulation and update over-the-air akin to phones. The question now is standardization: Nvidia is chasing the same market with full-stack robotics platforms, and developers will go for the path of least friction.

Alexa Gets an AI Overhaul Across TVs, Apps and Devices

Amazon broadened access to Alexa+ with a browser experience and revamped app, while centering Fire TV and new Artline TVs around the assistant’s improved conversational core. The approach mirrors a broader move toward multimodal assistants that combine speech, vision and context across devices.

Expect scrutiny as capabilities ramp. Consumer advocates, along with groups as varied as the Electronic Frontier Foundation, have long pushed for transparency about how data is used and what defaults are opt-in. If Alexa+ can continue to strike that balance between richer interaction and better privacy controls, it could set the pace for living-room AI.

Razer Tries AI Companions With Project AVA and Motoko

Never one to pass up a spectacle, Razer teased the Project Motoko concept, which is smart glasses functionality with no glasses, and the Project AVA desktop AI companion that presents as a persistent avatar. The pitch is intimacy: a visual agent you can look at, talk to and customize — more character than chatbot.

There’s method in the whimsy. In human-computer interaction research, embodied or animated agents are often found to enhance engagement, but they may also drive overtrust. On Razer’s end, there is new interaction to be had with this for streamers, creators, and gamers; the challenge will be putting a utility spin on it beyond simply being novel.

Lego Makes Play Smart With the New Smart Play System

Lego gave a behind-the-scenes look at its Smart Play System — bricks, tiles and Minifigures that interact with each other and make sounds in sets based on Star Wars — for the first time at the show.

It’s a familiar Lego play loop — but with situational reactions and modular electronics that encourage tinkering.

Education is the obvious vector. The combination of physical creativity and programmable behaviors is a good match for both STEM-minded classrooms and parents. The challenge will be whether it’s durable and affordable: smart bricks must survive the family room as well as scale without breaking the budget.

What to Watch Next in AI Hardware, Assistants and Toys

Three strands will be significant over the next year: energy efficiency as a first-class KPI for AI infrastructure, clean developer tooling across NPU-to-cloud without rewrites and responsible UX around assistants and companions that live alongside us in the home.

CES left no doubt that AI is not just a sideshow anymore — it’s the connective tissue that stretches across chips, screens, robots and toys.

With more than 130,000 people showing up at the previous show, CTA is riding quite a wave. It’s not a matter of whether AI is everywhere — it’s who strings it together coherently and sustainably.