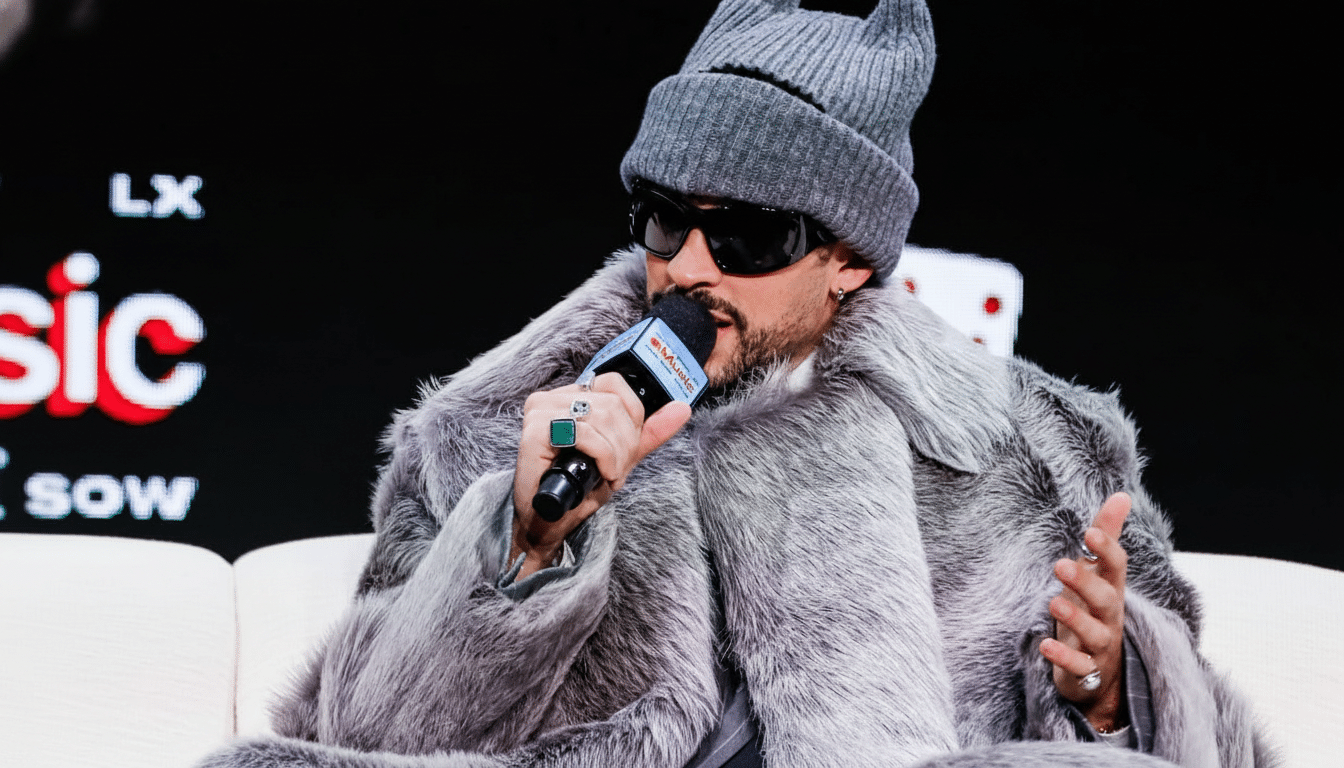

An AI-generated image falsely depicting Bad Bunny torching a U.S. flag is racing across social media just as anticipation builds for the Super Bowl Halftime Show, prompting swift pushback from fans and misinformation watchdogs. The fabricated photo, amplified by partisan accounts, attempts to cast the Puerto Rican superstar as staging a provocative stunt during pre-show rehearsals—something that simply did not happen.

What The Fake Image Shows And Why It Fails Basic Checks

The viral image portrays Bad Bunny in a pink, white, and blue dress holding a burning American flag. On closer inspection, it bears classic telltale signs of generative AI: flames that do not illuminate or deform nearby fabric, edges of the flag that blur into the background, and lighting that contradicts itself across the subject. The hands and folds in the outfit also show the “melting” teXtures common to machine-made visuals.

- What The Fake Image Shows And Why It Fails Basic Checks

- How The Rumor Took Off Across Social Media Platforms

- Verification Beats Virality When Misinformation Spreads

- Why Halftime Performers Are Frequent Targets

- How To Spot A Synthetic Stunt Before You Share

- Bottom Line: Treat The Flag-Burning Photo As A Hoax

Provenance checks add more red flags. There are no wire photos, credible news reports, or rehearsal clips showing the incident, and the claim that it occurred “on stage last night” collapses under the basic timeline of official production schedules. Halftime shows are tightly controlled, pre-visualized, and rehearsed; anything as combustible and controversial as flag burning would trigger immediate attention from show producers, network standards teams, and on-site safety officials.

How The Rumor Took Off Across Social Media Platforms

The image appears to have originated from a Facebook post framed as an eyewitness account, before being cross-posted on X and other platforms. From there, accounts with a history of culture-war content leaned in, pairing the image with commentary about patriotism and immigration. That playbook is familiar: provoke outrage, reap engagement, and let algorithms carry the story further than the correction.

Context also matters. Bad Bunny’s high-profile slot and past political commentary make him an attractive target for coordinated narratives seeking to drive partisan reactions. Misinformation researchers have long documented “pre-bunking windows” ahead of major cultural events, when rumors spike precisely because attention is at its peak and verified details are scarce.

Verification Beats Virality When Misinformation Spreads

No reputable outlet has corroborated the claim, and the timeline contradicts public performance schedules that place the artist on a short break from touring. Independent fact-checkers routinely advise a simple triage for suspicious images:

- Perform a reverse image search.

- Look for source photography from agencies such as Getty Images or AP.

- Check whether multiple mainstream newsrooms with standards teams have published the same claim.

When an eye-popping incident exists only on a few partisan posts, that’s a signal to pause.

The broader ecosystem reinforces the caution. NewsGuard reported that the number of AI-generated content farms exploded into the hundreds over the past year, with many designed to capitalize on trending topics. Pew Research Center has likewise documented rising public concern about AI-driven deception online. The combination is potent: convincing fabrications, timed to maximum attention, moving faster than corrections.

Why Halftime Performers Are Frequent Targets

The Super Bowl Halftime Show sits at the intersection of pop culture and politics, which makes it catnip for disinformation. Performers from Beyoncé to Rihanna have faced waves of pre- and post-show claims—sometimes exaggerated controversies, sometimes entirely false narratives—because the incentive structure rewards content that riles large audiences. Researchers at institutions such as the Stanford Internet Observatory have noted that emotionally charged cultural flashpoints tend to generate higher volumes of misleading posts than routine political news.

There’s also a visual vulnerability unique to modern rumor cycles: a single plausible image can travel farther than a paragraph of text. Even low-skill models can output frames that, at a glance, pass as real. Without context, casual scrollers mistake virality for verification.

How To Spot A Synthetic Stunt Before You Share

- Look for physics errors: do the flames cast light and distort fabric the way heat should?

- Check shadows: are they consistent with the scene’s light sources?

- Examine fine details such as fingers, jewelry, stitching, and logos; AI often mangles these.

- Consider plausibility: would a fire stunt occur in a rehearsal space packed with crew without anyone else filming it?

- Then triangulate: if something this incendiary happened, multiple outlets, not just anonymous accounts, would confirm it within minutes.

On the supply side, industry efforts like the Coalition for Content Provenance and Authenticity are pushing content credentials that can embed secure metadata into images and video. While not yet universal, these tools, alongside platform labels and third-party fact-checking partnerships, aim to make authenticity simpler to assess in real time.

Bottom Line: Treat The Flag-Burning Photo As A Hoax

The image of Bad Bunny burning a U.S. flag is fabricated. It exploits the attention surrounding the Halftime Show to score quick clicks and stir outrage. Until credible, independently verified evidence emerges, treat the claim for what it is: a pre-game hoax designed to goad, not to inform.