Anduril Industries, the buzzy, high-profile defense startup known for its fast-turn autonomy, is coming up against tough questions following a series of testing blunders and disappointing field performance detailed by The Wall Street Journal. The mishaps include a series of faltering naval drone boats during a major exercise, a damaged unmanned jet engine during ground trials and, in one case, the ignition of a 22-acre wildfire. Ukrainian operators also stopped using an Anduril-linked loitering munition after the weapon crashed and missed targets at the front, according to the report. Anduril says such setbacks are common in the development of weapons systems and that its own will get better.

What The Report Alleges About Anduril’s Test Failures

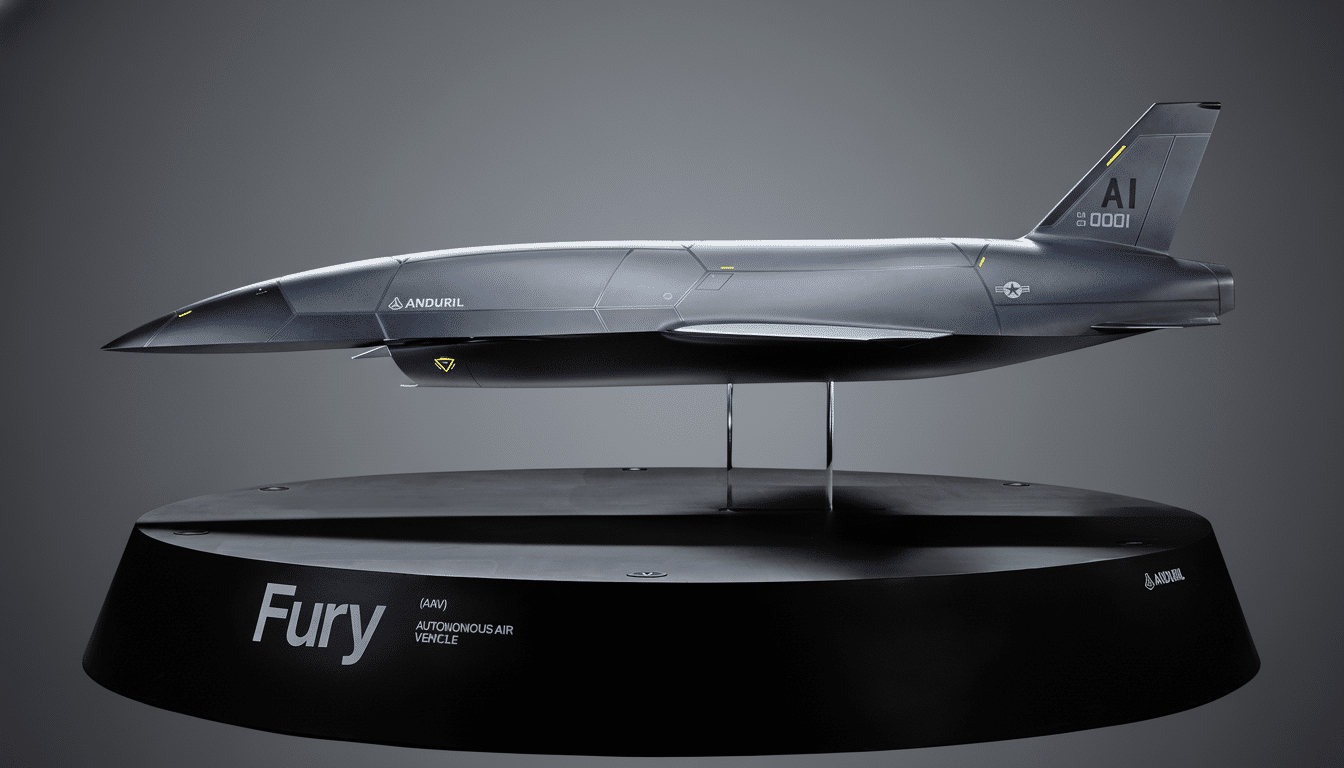

More than a dozen Anduril autonomous surface vessels, or spy boats, underperformed or were unreliable during a U.S. Navy exercise off the coast of California, according to The Journal, leading sailors on the ships to warn their superiors about safety violations and risk of serious bodily harm. In a separate test, an engine on the company’s unmanned jet, known as Fury, was damaged by a mechanical issue when it was tested on the ground. And a test of Anduril’s Anvil counterdrone interceptor reportedly set off a wildfire that burned 22 acres in Oregon.

These incidents run counter to the story that software-centric defense companies can provide combat-ready autonomy on commercial schedules. They also serve as a reminder of how unforgiving safety regimes can be when robotic systems operate in the air, on the sea, and alongside humans on land — particularly when it comes to naval evolutions, where the bar for safety is high and failure modes can escalate fast.

Battlefield Reality In Ukraine Challenges Autonomy

In addition to U.S. testing, special front-line Ukrainian units halted use of the Altius loitering munitions tied to Anduril after a series of crashes and failures to hit targets, according to The Journal. Ukraine’s SBU special service has supposedly seen the reliability degrade to the point it would not even use the system.

One of the main explanations, according to experts, is the most crowded battlefield in recent memory. Think tanks like the Royal United Services Institute have recorded Russian EW activity that interferes with GPS, data links and even forces drones into emergency mode. Under those circumstances, even established platforms suffer declines in hit rates and greater attrition. Autonomy helps, but it must also be robust to spoofing, spectrum denial and rapid, local changes in the electromagnetic environment — problems that are more software than airframe.

Why It’s Hard to Make Reliable Autonomous Weapons

Autonomous weapons add to all the usual challenges of defense programs — thorny software, unforgiving physics and no tolerance for error. Robots at sea also have to obey rules for collision avoidance, fuse radar with EO/IR in cluttered sea states, and be resilient to lost-link events. Air vehicles require strong envelope protection and fault isolation that prevent one sensor glitch from cascading into engine or control failure. Even counterdrone interceptors face challenges with kinetic effects, battery safety and post-impact risks — particularly in dry brush conditions.

Government auditors have long cautioned that software-intensive systems have difficulty scaling up in terms of integration as well as reliability. The Government Accountability Office has consistently reported delays and rework to key weapons programs resulting from concurrency, insufficient testing and requirements churn. With autonomy, that burden of testing may be heavier still: Every new edge case found in the wild means retraining models, updating safety logic and revalidating performance across a growing matrix of scenarios.

Money, Contracts And The Stakes For Anduril’s Future

Anduril has raised billions of dollars in private capital — about $2.5 billion, at a valuation of $30.5 billion — and its portfolio includes a series of Pentagon and allied contracts for autonomous aircraft, counter-UAS systems and surveillance technology. Its pitch revolves around Lattice, a software platform that binds together sensors and allows for “mission autonomy,” and a stable of systems that offer purportedly quick iteration and modular upgrades.

The company is closely watched as the Pentagon pushes projects such as Replicator, which seeks to field thousands of “attritable” autonomous systems quickly. Those programs can tolerate higher rates of loss if the units are cheap and effective, but reliability still matters. A drone that doesn’t take off, a drone that’s on its way but decides to jump ship during the mission, a drone with target identification so wrong it negates operator trust — all undermine operational concepts grounded in swarming and human-on-the-loop control.

What To Watch Next As Anduril Addresses Reliability Issues

Anduril claims the cited failures do not represent foundational problems and says its teams are addressing defects revealed during testing and on the battlefield.

Defenders of the company note that quick, iterative trials will also reveal problems sooner, when they are less expensive to fix — and point out that far more expensive disasters have befallen legacy primes over much longer time scales.

The near-term question is whether Anduril can turn iteration into quantifiable reliability: improved mission success rates, fewer aborts and safer failure modes across air, sea and counterdrone portfolios. It’ll be for real with independent testing at service test squadrons, glassy-eyed safety closeouts after mishaps and repeatable performance in a contested EW environment.

If the company can close those gaps, that makes a stronger case for software-led autonomy across DoD programs. If not, The Journal’s coverage will stand as a cautionary tale about how far Silicon Valley speed can take you when machines are required to work, unattended, on the edge of safety and war.