A California appellate lawyer has been ordered to pay a $10,000 sanction after submitting a brief filled with fake citations—21 of the 23 authorities were made up or misquoted, according to the court.

The lawyer said he had used generative A.I. tools to “enrich” his draft and then filed it without giving it a final look, each step violating what the court called qualities of “basic competence.”

Court Rebuke and the $10,000 Sanction Issued

In a scathing opinion, Presiding Justice Lee Smalley Edmon faulted the lawyer for failing to check the authorities he cited. The court determined that the majority of the cases relied upon were fraudulently minted, did not support the propositions expressed or did not exist. The judge emphasized an unremarkable principle: lawyers should read and confirm the authority upon which they rely before filing a brief.

The attorney said that he wrote the filing then used tools like ChatGPT, Grok, Gemini and Claude for “polishing” — but did not re-verify the citations before filing. He claimed he didn’t know about “hallucinations,” a known behavior in which generative AI creates believable but fake information. The court rejected that defense, saying there was nothing inherently untrustworthy about the use of A.I. in the practice of law as long as every factual and legal assertion is independently verified by a human being.

A Record of AI-Citation Failures Across Courts

The episode is the latest in a series of major security lapses. In a well-publicized case out of New York, two lawyers were sanctioned after they submitted their papers with false authorities referencing a chatbot and fake case law. A Stanford law professor came under fire for AI-generated citations in a brief supporting a deepfake bill. Meanwhile, outside court, Alaska’s education commissioner admitted to using AI instead of human effort to create the state’s statewide cellphone policy, drawing on references from studies later discovered not to exist.

Judicial frustration is rising. Courts and judges have begun to go so far as to require attorneys to certify that any use of AI was reviewed by a human or even just if generative AI was used. Significantly, a federal judge in Texas issued a standing order requiring that lawyers independently authenticate all output of AI programs as well as sources generated by them before filing.

Ethical, Competence and Verification Responsibilities

Modern tools are not prohibited by legal ethics; competence is. The American Bar Association has consistently emphasized that competence in technology falls within Model Rule 1.1, and state bars, including California’s, have issued guidance recommending careful guardrails around generative AI. Those guardrails reflect longstanding best practices: read the authorities, use citators such as Shepard’s and KeyCite, verify quotations, and check that propositions expressed conform to holdings cited.

Firms are formalizing policies, too. Some general precautions are that AI be constrained to drafting structure and style only; that it not supply unvetted legal authorities or search queries; and that a human “source of truth” review precede anything going out the door. Some of this work involves attorneys saving validation records — screenshots, docket numbers, reporter citations — so that every reference can be supported if it’s challenged.

Why Hallucinations Happen — and How to Avoid Them

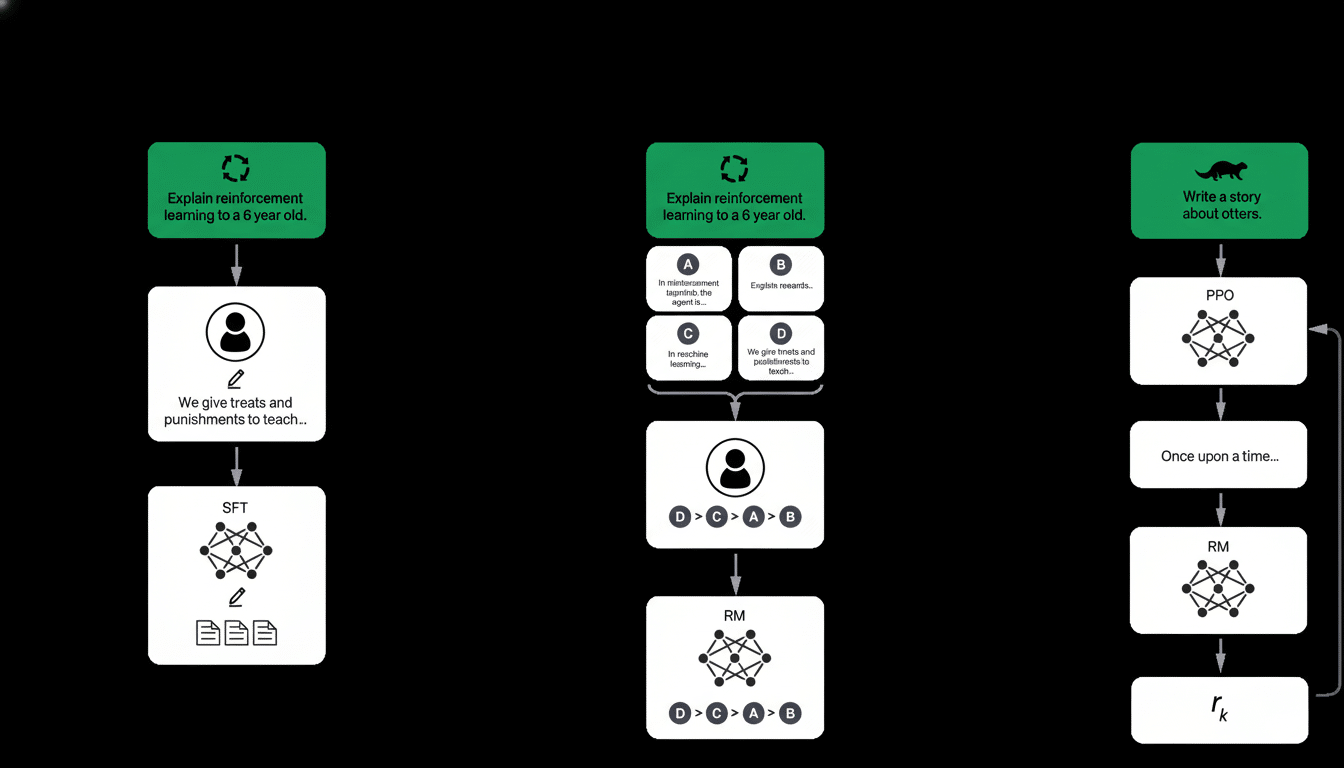

Generative models attempt to predict text that sounds reasonable, not to actually verify truth. Recent work coming out of AI labs (such as technical reports published by OpenAI) even admits that the problem of hallucinations is not solved: when a model does not have the information it needs, it can very easily produce confident-sounding but made-up output. They’re also trained to be helpful, which can inadvertently incentivize guessing over abstaining.

Legal teams hedge with retrieval-augmented generation (they’re feeding models “verified” documents from a closed repository), closed-universe drafting (so the model can only draw on firm-approved material) and rigorous human-in-the-loop review. The practical rule is thus straightforward: Artificial intelligence may draft prose but cannot validate law. Each citation has to be checked at the primary sources, each quote reconciled with the text, and every proposition tried against the actual holding of the case.

What This Means for Lawyers and Their Clients

The $10,000 fine is more than a personal blow; it’s a warning shot for the profession. Our judicial system has limited resources and pursuing phantom cases squanders the court’s time, while putting clients’ interests at risk. Missteps can lead to sanctions, reputational harm and, in severe cases, malpractice exposure.

The takeaway is clear. Generative AI may be helpful for faster drafting and readability, but it cannot replace legal judgment or resonance. Read what you cite. Verify it twice. And take any AI-provided authority as a lead to be verified, not an answer to be trusted.