Apple is reportedly halting work on a major Vision Pro reboot to focus its resources on another push in AI-fueled smart glasses, says Bloomberg’s Mark Gurman. The gesture indicates that Apple sees a faster way to mainstream adoption in lightweight eyeglasses that rely on the iPhone’s processing and Apple Intelligence features, rather than doubling down on an ungainly mixed-reality headset.

Why Apple Is Pivoting to AI-Driven Smart Glasses Now

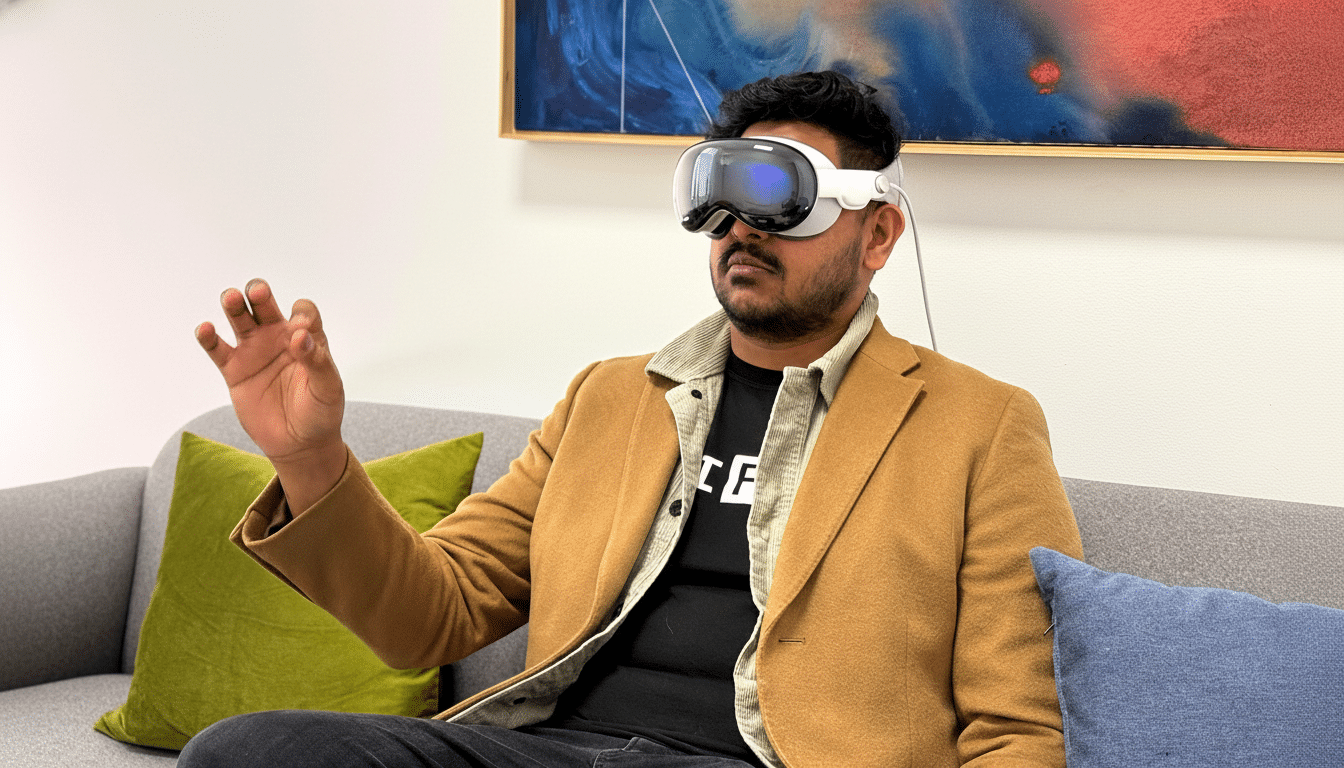

Vision Pro was evidence that Apple alone can make category-defining spatial computing hardware, but at $3,499 and with a tethered battery, it’s premium early-adopter gear. Analysts such as IDC and CCS Insight have consistently observed that the AR/VR market is growing from a small base, and still many years away from delivering volumes comparable to smartphones. Après-launch, enthusiasm waned as novelty was wearing out and costs of developing proprietary visionOS experiences for a so-so installed base were being considered.

- Why Apple Is Pivoting to AI-Driven Smart Glasses Now

- Two Tracks for Apple’s Smart Glasses: Companion and Display

- The AI and Silicon Edge Powering Apple’s Smart Glasses

- Taking a Meta Approach in the Race for Smart Glasses

- What This Shift Means for Apple’s Vision Pro Strategy

- What to Watch Next as Apple Advances Smart Glasses

Smart glasses are, by comparison, a simpler value proposition: glanceable information and hands-free capture, AI assistance without disconnecting from the real world. The hardware is less complex, cheaper to produce and more comfortable to wear for extended periods — three levers that count when you’re aiming not for thousands of users but for tens of millions.

Two Tracks for Apple’s Smart Glasses: Companion and Display

Apple is working on at least two models, according to Bloomberg. The first — said to be known internally as N50 — is a companion pair that relies on the iPhone for compute and apparently doesn’t feature an onboard display. Think of it like AirPods for your eyes: audio first, with the occasional nudge from the phone to deliver things like navigation directions or real-time translations, reminders and a more intelligent version of Siri — without adding weight or battery demands of an all-out headset. Apple could also preview N50 as early as next year, with release timing slated for 2027.

The second model includes a display on the frames, bringing closer to life the heads-up actions that people envision when they think of sci-fi eyewear. That product will be a rival to the most recent Ray-Ban smart glasses from Meta, which has been racing to add AI capabilities and developer access for its eyewear. Apple had targeted around 2028, though the work is being sped up. The technical hurdles — optics, heat, battery life and the invisibility of the tech in a fashionable frame — are nontrivial, but Apple’s command over silicon, software and accessories raises prospects.

The AI and Silicon Edge Powering Apple’s Smart Glasses

Cupertino-based Apple’s smart glasses strategy could pair well with Apple Intelligence, its on-device and privacy-by-design AI suite. Shifting intense processing work to the iPhone or a close-by Mac while leaving latency-sensitive jobs on the glasses would conserve battery life and pass muster with Apple’s privacy demands. Imagine deep integration with new natural-language Siri, multimodal understanding (so it can see what you’re seeing and hear what you’re hearing), and context from calendar, messages, location… served up with minimal exposed surface area at the point of just-in-time need.

There’s also a green silicon story. Meta’s glasses will depend on Qualcomm platforms; Apple can connect its custom low-power chips in the frames with the neural engines in iPhone and Apple Watch using Bluetooth and Ultra Wideband. Usually, that vertical stack produces efficiency gains and experience polish — two areas in which Apple has traditionally out-executed its opponents.

Taking a Meta Approach in the Race for Smart Glasses

Apple is going after an early lead. Meta introduced its first smart glasses in 2021, and has iterated aggressively with improving cameras, voice control and AI features. Though Meta hasn’t provided specific unit sales, it has claimed high engagement and the product has emerged as a popular conduit for hands-free capture and assistant queries. And if Apple brings its ecosystem gravity — iPhone installed base, retail distribution and developer tools — to the category? It could redraw the competitive map overnight.

Others are circling. Amazon is on its third generation of Echo Frames, Snap keeps playing with new Spectacles and there are a few Chinese OEMs playing with camera-first glasses. But none of them has Apple’s unique combination of hardware design, proprietary silicon and a pro-privacy AI narrative.

What This Shift Means for Apple’s Vision Pro Strategy

A pause is not a retreat. The Vision Pro still appears to be THE spatial computing reference device for Apple and I’d expect visionOS to continue evolving with better hand tracking, passthrough or even enterprise features. The killed revamp seems to be the next major hardware refresh [especially a lighter, cheaper] SKU — not the entirety of the platform. At least for the time being, Apple now has the ability to continue catering to its developer community, medical visualization, training solutions and design workflows where Vision Pro’s qualities will stand out.

What to Watch Next as Apple Advances Smart Glasses

Signals to watch for include:

- Whether Apple ships a glasses-focused SDK for glanceable interfaces

- The extent to which Apple Intelligence is baked into Siri for hands-free use

- Supply chain whispers about microdisplays and ultra-low-power chipsets

Pricing will also be crucial: a companion model that hovers around the price of premium audio wearables would likely spur some demand; a version with a display will have to justify an even higher number without creeping too close to the range of headsets.

If Apple can deliver on comfort, battery life and a couple of magical AI moments, smart glasses might be the company’s next mainstream wearable, one that bypasses the roadblocks that have slowed mixed reality so far and ushers in ambient computing for the masses, one glance at a time.