Apple is moving platform security forward with a hardware-powered framework designed to eliminate an entire class of memory exploitation, and Google stares down an expensive verdict over how it handled user data. Taken together, the two biggest cybersecurity story lines of the week announced where the industry is going: Building safety directly into silicon or get dunked on over trust.

Apple’s memory integrity play shifts the threat model

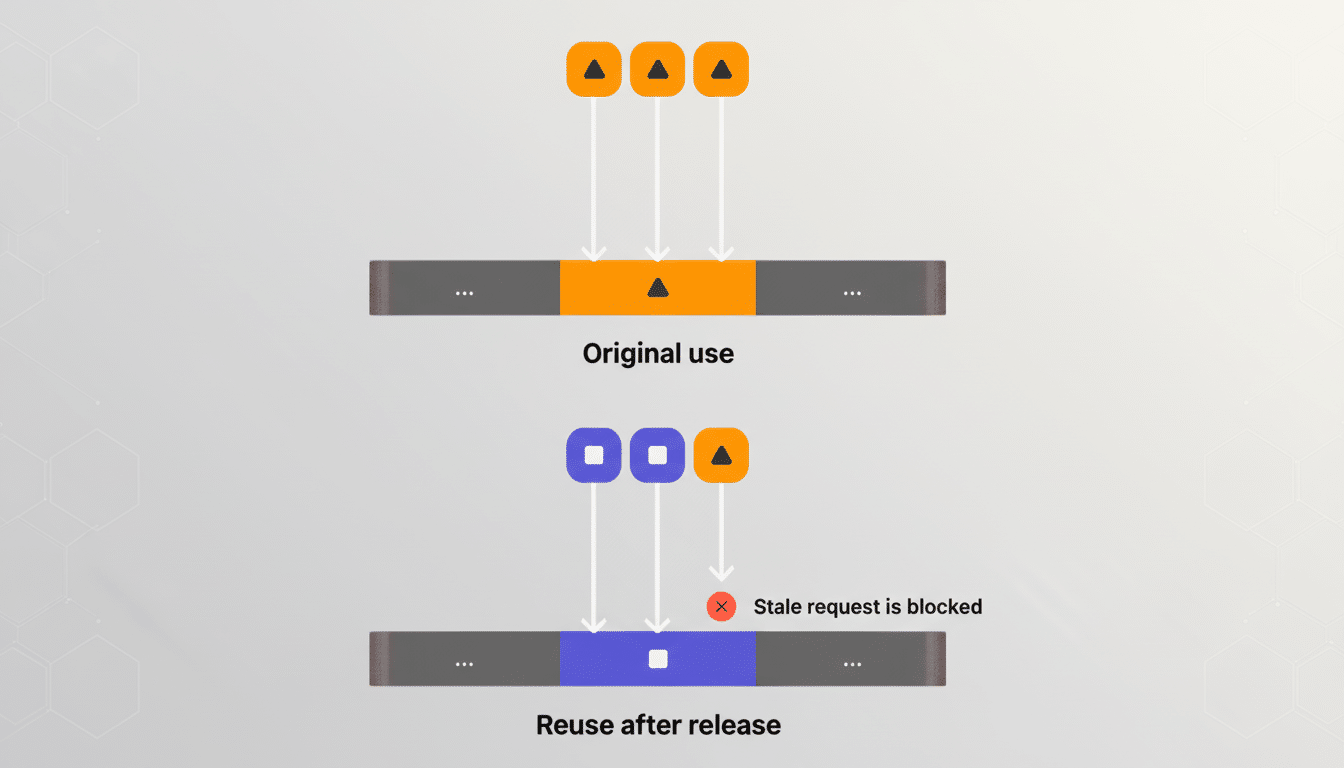

Apple outlined a new system, Memory Integrity Enforcement, which is a collection of hardware and OS features meant to render memory corruption attacks moot on upcoming devices. The company’s security team details years of engineering work to harden validation of pointers, buffers and memory allocations and to provide tools for developers that default to safer patterns. It complements earlier efforts by Apple such as pointer authentication, hardened heap allocators and isolation mechanism like BlastDoor.

Why it matters: Bugs that allow hackers to read and write memory at will have continued to be software security’s favorite hole. Microsoft’s Security Response Center has said that about 70% of the critical vulnerabilities it addressed over a period of years were related to memory safety. Google’s own engineers have said that high-severity Chrome bugs in the past have been dominated by memory issues. CISA’s Known Exploited Vulnerabilities list is filled with real-world occurrences involving use-after-free, buffer overflows, and out-of-bounds access. A second-class citizen at hardware boundary No, removing this class is not a tweak—it’s a change in the attack surface.

Apple’s system melds the properties of silicon — some of whose properties it can validate through background compiler and runtime checks — with developer authoring tooling that help verify correctness without destroying performance. Look for guardrails such as memory tagging-style protections, increased pointer integrity, and selective checks that are always-on for critical services. The company’s Security Engineering and Architecture team indicated that future A‑series chips will surface dedicated primitives for the protections, while developer frameworks will make it the easiest path to adopt.

The immediate win isn’t flashy. You won’t find a new icon on your home screen. But if it’s a success, the exploit chains that used to build on top of single out-of-bound writes will start failing, and attackers will have to rely on more expensive vectors, like social engineering or supply-chain abuse. Independent analyses in outlets like Wired indicate the potential — if other OS vendors and chipmakers mimic the model, we could start to retire a huntch of stubborn zero-days over the next hardware cycle.

Google’s privacy bill is a trust tax

A jury ruled that Google must pay $425 million in a class-action lawsuit brought by app developers who said the company collected their app activity from services other than Google’s, such as Venmo, Instagram and Uber even when users disabled certain account-level tracking settings. Google plans to appeal, but the takeaway is unambiguous: Juries and regulators are increasingly willing to put a price on uncertainty in data practices.

All of this is not happening in a vacuum. In 2022, state attorneys general won a $391.5 million settlement regarding how Google managed location data. Across Europe, privacy regulators have been applying hefty fines under GDPR against adtech and cloud services that failed transparency and consent tests. The latter point comes down to consent that does not square with behavior. When toggles and disclosures don’t map to collections, there are penalties.

The operational takeaway for any platform: data minimization isn’t just a compliance slogan; it’s risk reduction. If a feature requires telemetry, describe the extent that is does in plain language, consider “off” as off, and review SDKs that might short-circuit app-level decisions. Plaintiffs’ lawyers, regulators and security researchers are now fluent in API flows, device IDs and cross-app inference; hand-waving no longer cuts it.

Two avenues to security credibility

Apple is placing a bet that building protections into hardware and compilers can offer something of far more lasting value: safety that users never have to think about. Google is also learning that when it comes to privacy controls that feel optional — or opaque — accountability arrives in the courtrooms. Both lessons hit at a fraught moment: open source ecosystems that just keep getting compromised via the supply chain, as reported by companies such as Sonatype and Phylum; nation-state pressure on encrypted platforms; and human error-based incidents year in, year out across enterprises.

For defenders, the game stack strategy is becoming clearer. Use memory-safe languages (e.g., Rust and Swift where applicable), trust in hardware-assisted integrity mechanisms if available, view privacy settings as contracts not UI elements. For product leaders, don’t measure security just by vulnerability counts as in classes of bugs that no longer exist. That’s how incident curves bend.

What to watch next

Watch Apple’s numbers around performance and compatibility as developers turn on stricter memory integrity modes — real-world benchmarks will show how quickly the ecosystem shifts. Monitor the further deployment by Android of ARM Memory Tagging Extension on high-end devices and whether defaults similar to desktop platforms arrive for commodity CPUs. On privacy: look for added scrutiny on third-party SDKs inside have-to-have apps, a renewed game of whack-a-mole between the FTC and state AGs, and disclosures that are much sharper and change “off” from something you can turn it off to something you can confirm has been flipped off.

The bottom line: Security can be built into the platform or paid for later. This week, Apple revealed at scale what the former looks like. The latter is a price that Google had a reminder about.