Apple is even swinging directly at workstation-class AI rigs by demonstrating clusters of Thunderbolt 5 Macs running trillion-parameter models to give a digitized nod to Nvidia’s DGX family. In a developer preview, the company showed off multiple Apple Silicon systems joining forces over Thunderbolt to address models much larger than any single machine could handle.

Thunderbolt 5 turns Macs into mini AI clusters

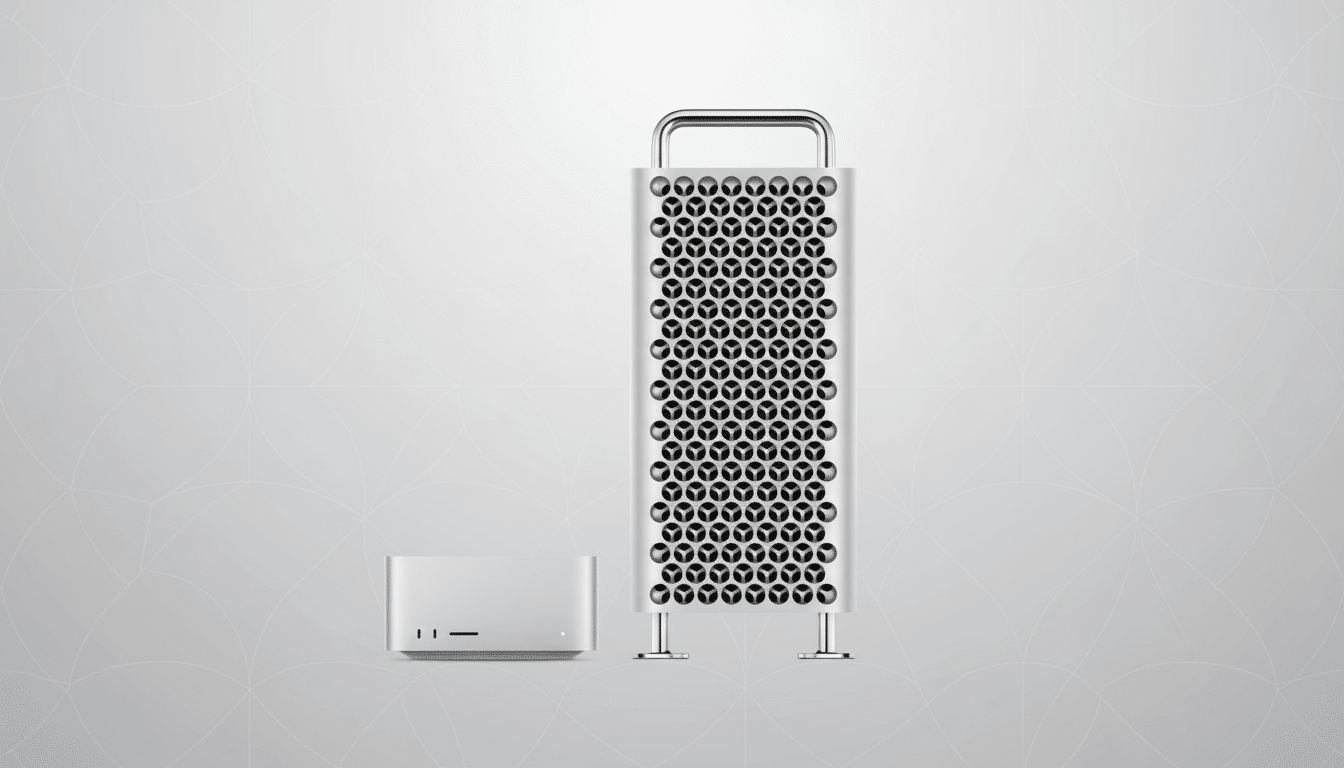

The new feature, based on Apple’s open-source MLX framework, allows developers to network Macs as a collaborative “AI cluster.” Exo Labs and Apple also cooperated on a software tool they developed called EXO 1.0, which manages workloads across machines. The new setup lets you run up to four Thunderbolt 5 Mac Studio machines, or two MacBook Pro laptops running your processed model in parallel, with unified memory treated as virtually shared for bigger parameter counts and context windows that can enter the model without any back-and-forth between host and device.

Thunderbolt 5 introduces high-bandwidth, low-latency links—up to 80 Gbps bidirectional and, with boost mode, up to 120 Gbps for bursts—which allows the Macs to have a direct-attached, point-to-point fabric rather than using slower office Ethernet. For model developers iterating on their models locally, this implies faster synchronization of tensors and caches and less time spent waiting for the interconnect to catch up.

A trillion parameters under 500W in live demo

In a live demo, Apple’s team showed four M3 Ultra-based Mac Studio desktops collectively running the Kimi-K2-Thinking model at a scale of 1 trillion parameters while achieving total power draw lower than 500 W (for context, a single high-end data center GPU can be specced up to 700 W on its own, and Apple positioned its result as an efficiency win for developers).

Nvidia’s DGX Spark systems—aimed at small-scale AI development—are rated up to 240 W per box. You could push toward 960 W at theoretical peak by daisy-chaining four of them, although real-world capacity is different. The point is not performance parity, the message from Apple being that a desktop Mac cluster can now take on workloads previously requiring beastly GPU boxes to accomplish—with far less power and noise. As indie dev John Carmack has publicly warned on social media, DGX Spark performance expectations might have to get adjusted once the units go out widely.

MLX and EXO 1.0 underpinning multi‑Mac clustering

MLX is Apple’s accelerated array framework for Apple Silicon designed by Apple’s machine learning research group. It is integrated with Metal’s Tensor Operations and Metal Performance Primitives for the conversion of machine learning model graphs into efficient kernels. The multi‑Mac features come with an upcoming macOS update, which remains in beta for now.

The strategy is a pragmatic one: instead of replicating high-cost data center fabrics such as NVLink and NVSwitch, Apple relies heavily on tightly integrated CPUs, GPUs, and NPUs with unified memory, before sewing machines together using a fast, widely available port. For a lot of labs and startups, being able to scale from one to four Macs without needing to change code paths or drivers is an important productivity gain.

M5 neural accelerators slash time to first token

Apple also highlighted the M5 generation’s Neural Accelerators and upgraded compute pipelines. According to an engineering blog from Apple, dedicated matrix-multiplication units and expanded support for TensorOps allow time-to-first-token (TTFT)—the compute-bound point at which an LLM yields initial output—to be slashed. On Alibaba Cloud’s Qwen model, M5 provided up to 4x faster TTFT than M4.

While subsequent tokens are more memory-bound, Apple still found overall LLM throughput increases of 19%–27% across various models as a result of increased bandwidth.

That extended beyond FLOPS: image generation also improved. With MLX on an M5 system, it drew a 1,024×1,024 image using FLUX-dev-4bit (12B parameters) up to 3.8x faster than on M4. Those gains compound with the new clustering feature, which provides more headroom for context, batch sizes, and larger model configurations.

DGX competition or another way forward for developers

Nvidia’s DGX family is still the high-water mark for peak training and inference at scale, where inter-GPU connectivity offers bandwidth that the Thunderbolt bus simply can’t match. Apple’s pitch is a different one: developer-first workflow, local privacy, and high performance per watt, now with a way to scale model size and context on the desktop. It is not so much a replacement for DGX as it is another rung on the ladder between one laptop and a rack of accelerators.

The next proof point will be validation from someone independent. If Apple and partners release MLPerf-style numbers, or open-source reproducible benchmarks using MLX and EXO configurations, the community at large will have more clarity into throughput, latencies, scaling characteristics, etc. For now, the takeaway is straightforward: Thunderbolt 5 Macs can team up on truly gigantic models, and Apple wants smooth support to be turnkey for developers.