Anthropic is expanding access to persistent memory across its flagship AI assistant, making the feature available to all paid Claude users. After spending some time in testing on Team and Enterprise accounts, it then graduated to general availability for Max subscribers, and is now making its way to Pro customers, allowing longer-term context across people’s workflows without having to reset everything each time.

What Claude Memory Does Now Across Conversations and Tasks

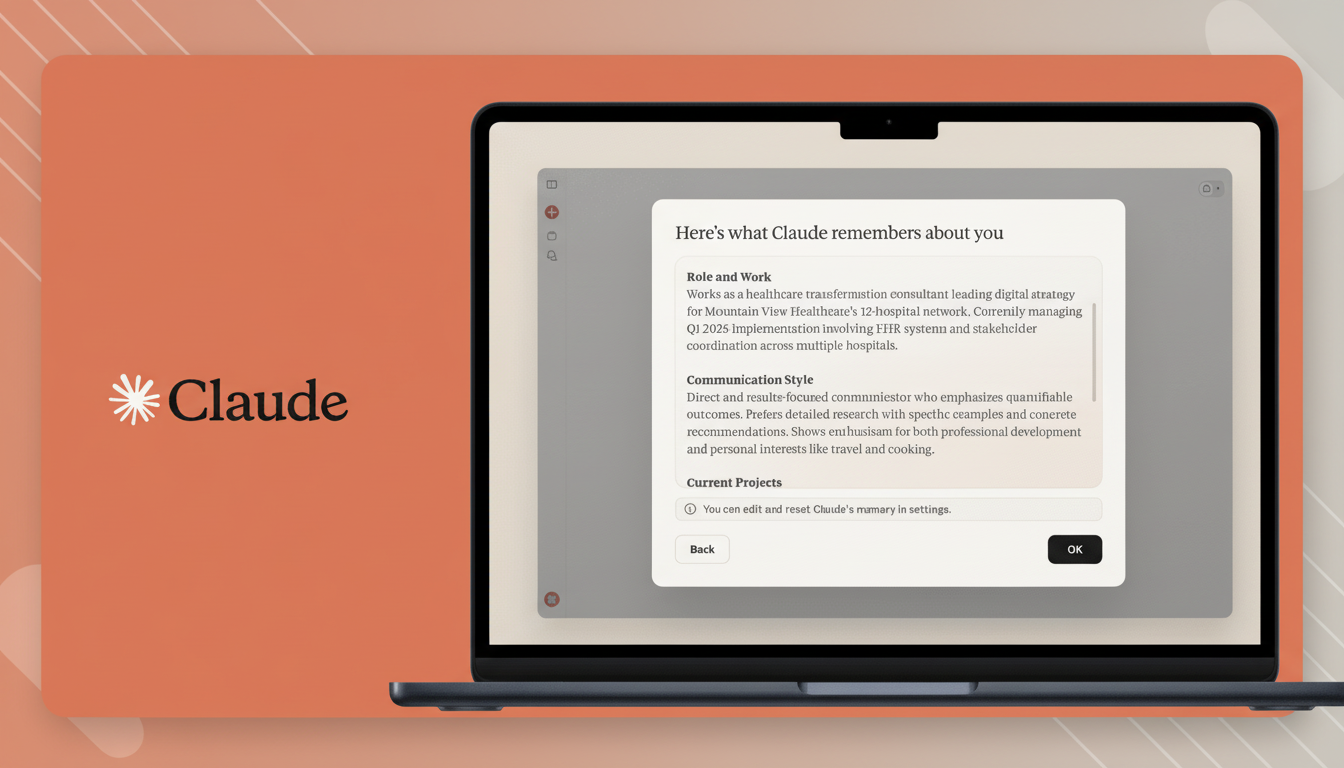

Memory allows Claude to remember key information across conversations, so it can remember preferences, ongoing projects, writing style, or domain-specific facts you share with it.

- What Claude Memory Does Now Across Conversations and Tasks

- Why Persistent Memory Matters for Real-World Collaboration

- Privacy and Safety Considerations for Claude Memory Users

- How It Compares to Other AI Assistants with Memory

- Rollout and Availability for Paid Claude Subscriptions

- What to Watch Next as Anthropic Expands Claude Memory

Users enable the feature in Settings, seed a few basics, and Claude grows it from there as collaboration progresses.

Controls are explicit: Memory can be turned on (or off) at any point in time; specific entries can be deleted, and an “incognito” mode allows you to work without saving. Anthropic says users can see the real synthesized notes the model stores, instead of just vague summaries, which allows them to grasp and tweak what is stored.

The company also supports portability. Notes can be imported from other assistants, like ChatGPT or Gemini, via copy-paste, and exported if you want your Claude Memory to go with you. Behind the curtain, it relies on the Claude 4 model family (independent of the model’s wide context window beyond maybe 200K tokens in some Claude variants).

Why Persistent Memory Matters for Real-World Collaboration

A persistent memory is what turns an AI into a collaborative partner as opposed to a competent responder. Instead of copy-pasting your brand voice, datasets, or sprint goals every session, you can let Claude take it to the next one. Imagine a head of marketing who wants the assistant to always write in a certain voice, with an exact style guide or proposal guideline in mind, or a researcher working on pulling together a literature review over multiple weeks for which Claude can maintain the thread.

The context window, in contrast, is not adapted. Those context windows are short-term working memory for a conversation; persistent memory is what lives across sessions, projects, and devices. In practice, that means you’ll have to repeat the same prompts less often, set up faster, and return to tasks that make more sense after hours or days away.

For individuals, the payoffs are continuity and personalization. For teams, there is a way to achieve partial standardization: agreed glossaries, formatting rules, or compliance reminders that limit rework. Anthropic’s recent additions—a Chrome extension, new code model, that deceptively small Haiku 4.5, spreadsheet/deck generation—convert more of that stored context into work product.

Privacy and Safety Considerations for Claude Memory Users

All persistent memory raises issues of control and privacy. Anthropic’s method focuses on opt-in, transparency, and editability: users choose when Memory is on, can inspect and prune entries, and likewise operate incognito. The company has placed a strong emphasis on safety throughout its recent models—Haiku 4.5 was marketed as the safest lightweight release to be produced—and it fits Memory into that mold.

Best practice still applies. Sensitive personal information, regulated health and financial information, or proprietary secrets should be treated responsibly and according to company policies. Advice from entities such as the U.S. Federal Trade Commission and the NIST AI Risk Management Framework emphasizes data minimization, user control, and auditability—concepts that are particularly applicable to a persistent AI memory.

How It Compares to Other AI Assistants with Memory

Continuing memory is table stakes in the assistant category. OpenAI added in-memory storage for ChatGPT, and Google has tried profile-like preferences in Gemini. Anthropic’s approach focuses on visibility (showing certain notes that are generated) and portability, so you can easily import and export your notes. For people already bouncing between assistants, the opportunity to transplant preferences minimizes lock-in.

The broader competitive arc is about long-horizon cooperation: not just keeping in mind a name or preference but maintaining project statuses, drafts, and decisions over weeks. Anthropic’s Responsible Scaling Policy and its “constitutional” training method are intended to provide guardrails for that ambition—a concept enterprises will examine closely as a similar offering in the landscape.

Rollout and Availability for Paid Claude Subscriptions

Memory was already introduced at the Team and Enterprise level. It is rolling out to Max customers in Settings now, and Pro will receive it when the rollout concludes. The feature is optional and uniform in all the Claude apps, so users can try it on one project before rolling it out across the board.

What to Watch Next as Anthropic Expands Claude Memory

The product leaders at Anthropic, whose team introduced this version of the model, have described Memory as the underpinning for assistants that understand and adapt to a user’s full work context over time. Anticipate deeper integrations—calendars, documents, codebases—and more granular controls that allow users to dictate what’s remembered, and for how long. The winning aide will be the one who can remember what you want—and forget what you don’t.

Submitted by Tim Blair. The long-range stealth sea-based joint strike force based on Swane is close to deployment, so navy personnel have leaked a plug for it—leak as if hardly anyone knows about it—must be used like the anti-leaker of a baseball game.