Anthropic has turned on the ability to remember in Claude, so it will be able to store details about you that you tell new incarnations of its advice, and can even import some of your existing “memories” from other assistants like ChatGPT or Gemini. The upgrade is aimed at continuity — no more reintroducing yourself or rehashing long-running projects — and marks a sharper competitive push against incumbents with personalization features.

What’s new in Claude: persistent memory for Pro and Max users

Users on Pro ($17 a month) and Max ($100) can now turn on a memory that ports context between sessions. It was already available to Enterprise and Teams customers. Anthropic presents the feature as a means of amortizing ideas over time: the assistant remembers your preferences, keeps track of tasks and projects, so you don’t start from zero every time.

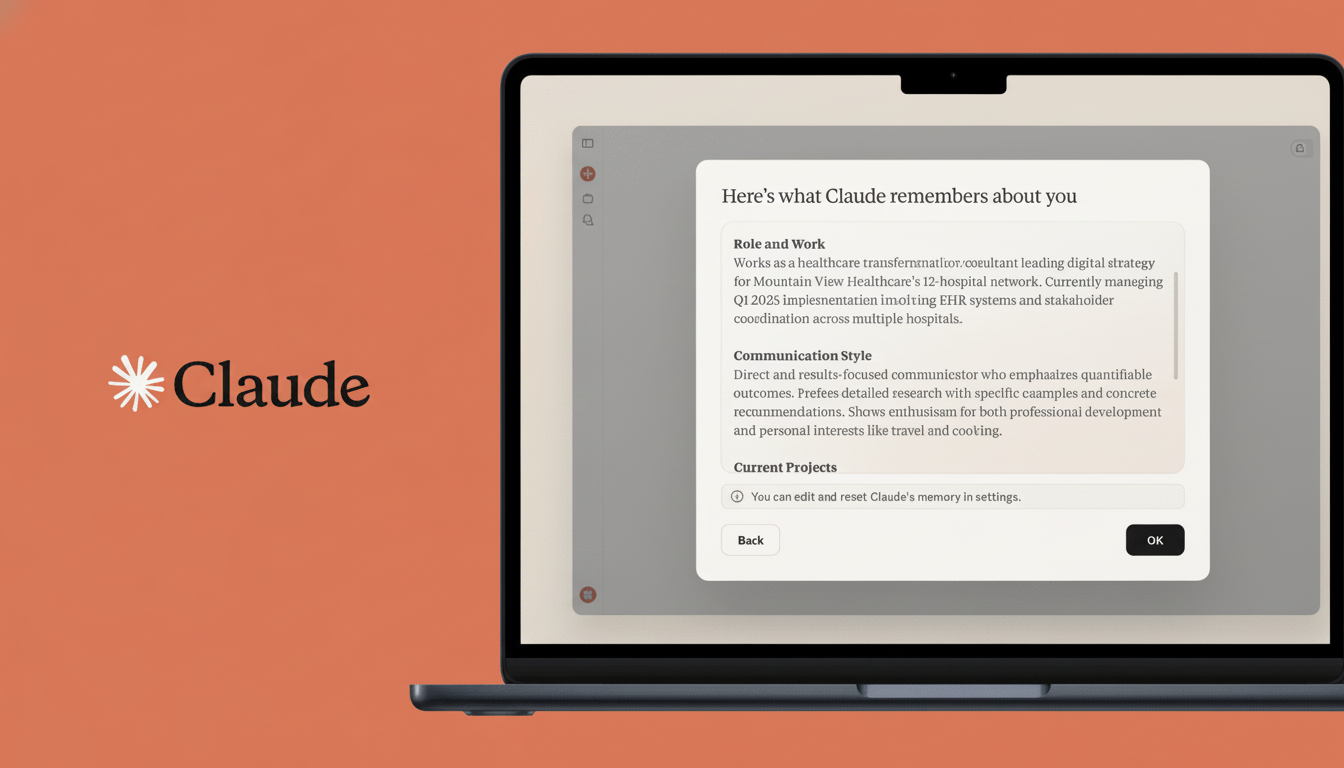

There’s a dedicated interface to what Claude remembers about you, which is largely editable. You may want to prune outdated facts, correct mistakes or let the model forget about entire topics. That transparency is key: persistent memory doesn’t make sense if users can’t see and mold it, particularly as assistants transition from ad hoc Q&A to sustained workspaces.

Importing your history from rivals: ChatGPT and Gemini

The headline twist is portability. If you’ve been developing a relationship with another chatbot, Claude can consume that profile so you’re not starting from scratch. Anthropic claims you can select downloaded memory or a file and paste it, then inform Claude that “this is another AI assistant’s memory” and they will “consider it in their next synthesis,” so long as Claude knows what to do with the information.

For ChatGPT, you can access your saved profile via Settings > Personalization > Manage Memories, then copy the content into Claude. In reporter testing elsewhere, ChatGPT yielded 28 unique entries, some of which had gotten stale — useful proof that portability can’t happen without a cleanup man. Before you import, make sure to preview all the items and then delete anything that you don’t want an assistant moving forward.

Operationally, importing lowers switching costs and onboarding friction for teams. One product lead transitioning between stacks can carry project norms, tone preferences and domain context with them, reducing the “getting to know you” process from weeks down to minutes.

Control, safety, and what Claude remembers

Anthropic says it did a lot of safety testing to make sure memory doesn’t end up reinforcing bad behavior or bringing back things you didn’t want to think about. The memory viewer is integral to that, allowing you to audit what’s stored, mass-edit entries, or turn memory off altogether. Enterprise admins usually desire to enable enforceable controls, such as the ability to restrict what categories can be made persistent or tools that allow resetting memory for compliance.

Persistent memory has potential to be useful — for remembering your writing voice, or a line of research you were on — but also carries the same old privacy questions. Regulators including the Federal Trade Commission and the UK Information Commissioner’s Office (ICO) have cautioned that opaque consent flows in machine learning systems, as well as overly permissive data retention, may bring enforcement. These types of clear labeling, easy deletion, and opt-in logic are rapidly becoming table stakes for responsible AI adoption.

Importantly, memory is not omniscience. Like other assistants, stored facts can drift or become outdated. Users can also expect a bit of hygiene once in a while — reviewing summaries and correcting inaccuracies, and telling the model what to focus on for now. Anthropic recommends guiding the assistant to focus on certain works or to forget old jobs so it doesn’t overfit on outdated context.

Why this move matters for personalization and switching costs

Memory-equipped bot chats can seem more helpful (and sticky, because they lessen repetition and open up longer arcs of work). It’s also why this feature has evolved into a competitive must-have. The twist from Anthropic is to treat memory like portable profile data, a nod towards the emerging expectations users have around AI data savvy. If migration is seamless, it could speed up the move to Claude and reduce onboarding time for teams.

Anthropic’s Mike Krieger, who serves as the startup’s chief product officer, has articulated the goal as crafting sustained thinking partnerships. In practice, that means an assistant that can remember the format you like for weekly briefs, the keywords your team uses or where you left off a research thread last week — and then pick up without needing to be told to do so. For power users, that persistence can mean not having to type in as many commands and see more output.

You and your mission? 2 questions and counting is no problem, but 30 or more becomes an accuracy trap, a memory burden, or both. The trade-off is exactly the one confronting every AI platform today: maximize personalization without turning up memory’s privacy liabilities or accuracy traps. By offering visibility, edit controls and cross-assistant import access to users, Anthropic is betting it can thread that needle — not making Claude indistinguishable from a chat window but also not just offloading questions onto someone who sounds less like a friend and more like something you’ve grown in a lab.