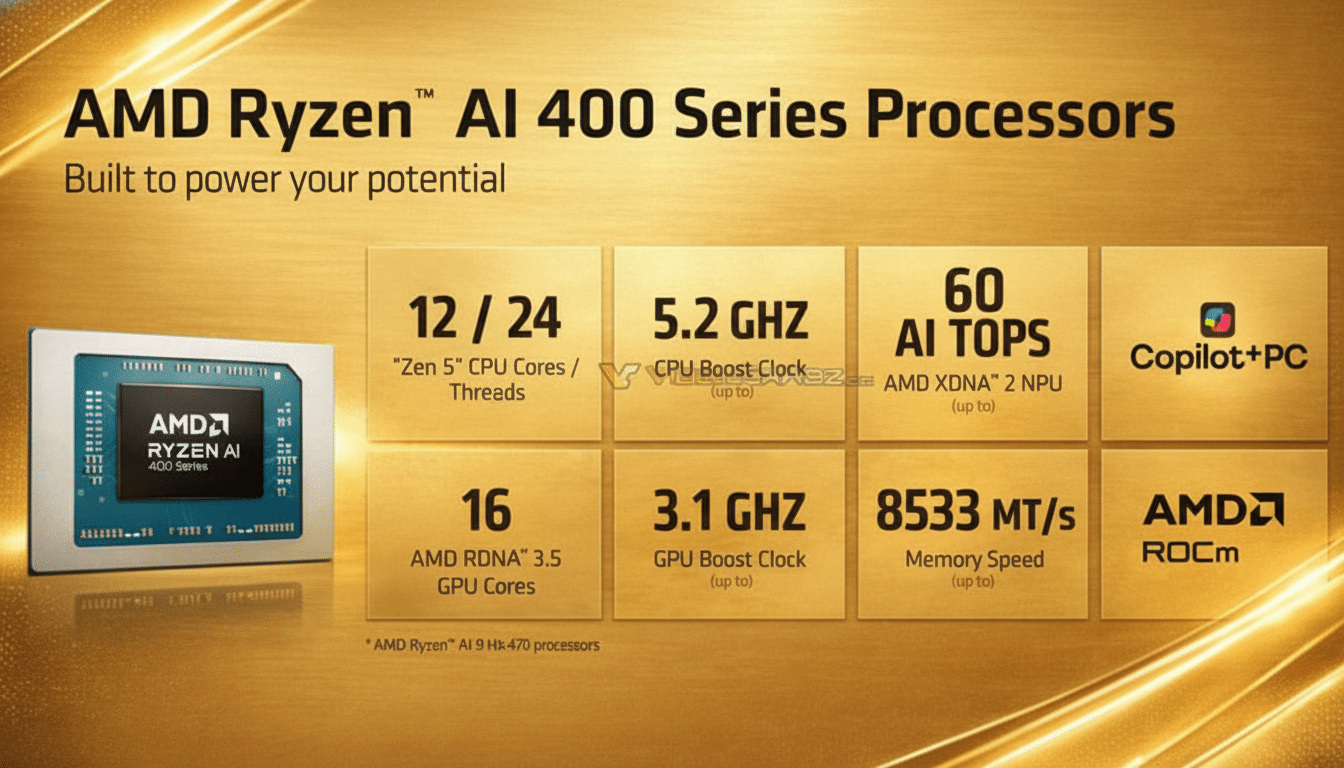

AMD’s new Ryzen AI 400 series is squarely aimed at the next wave of AI PCs, delivering up to 60 TOPS of on-device AI compute to thin laptops and pint-sized desktops. Rounding out the story are new Zen 5 CPU cores, RDNA 3.5 integrated graphics, and the second-gen XDNA 2 NPU; which is to say they’re well-positioned for more quickly handled in-local-AI tasks (and thus less battery-draining); a combination that system makers have been asking for as generative workloads spill over from the cloud.

Zen 5, RDNA 3.5, and XDNA 2 Under One Roof

That top configuration in the line, the Ryzen AI 9 HX 475, drives its NPU to 60 TOPS, and the family baseline hits a floor of 50 TOPS. Those numbers are important because an NPU is the most efficient route for everyday AI features such as live captioning, noise suppression, background object removal, and on-device assistants. AMD combines that with higher-clocked Zen 5 cores for general workloads and RDNA 3.5 graphics for GPU-driven AI and gaming.

In terms of raw NPU throughput, that 60 TOPS ceiling sent AMD flying past current Intel client silicon with its 50 TOPS and just shy in overall AI performance from Qualcomm’s latest Snapdragon X2, which boasts a frightening 80 TOPS. Of course, TOPS is just one lens. Memory bandwidth, kernel tweaks, and software runtimes can tip real-world performance, especially in mixed CPU+GPU+NPU pipelines.

Claims of Performance at Work and Day-to-Day Reality

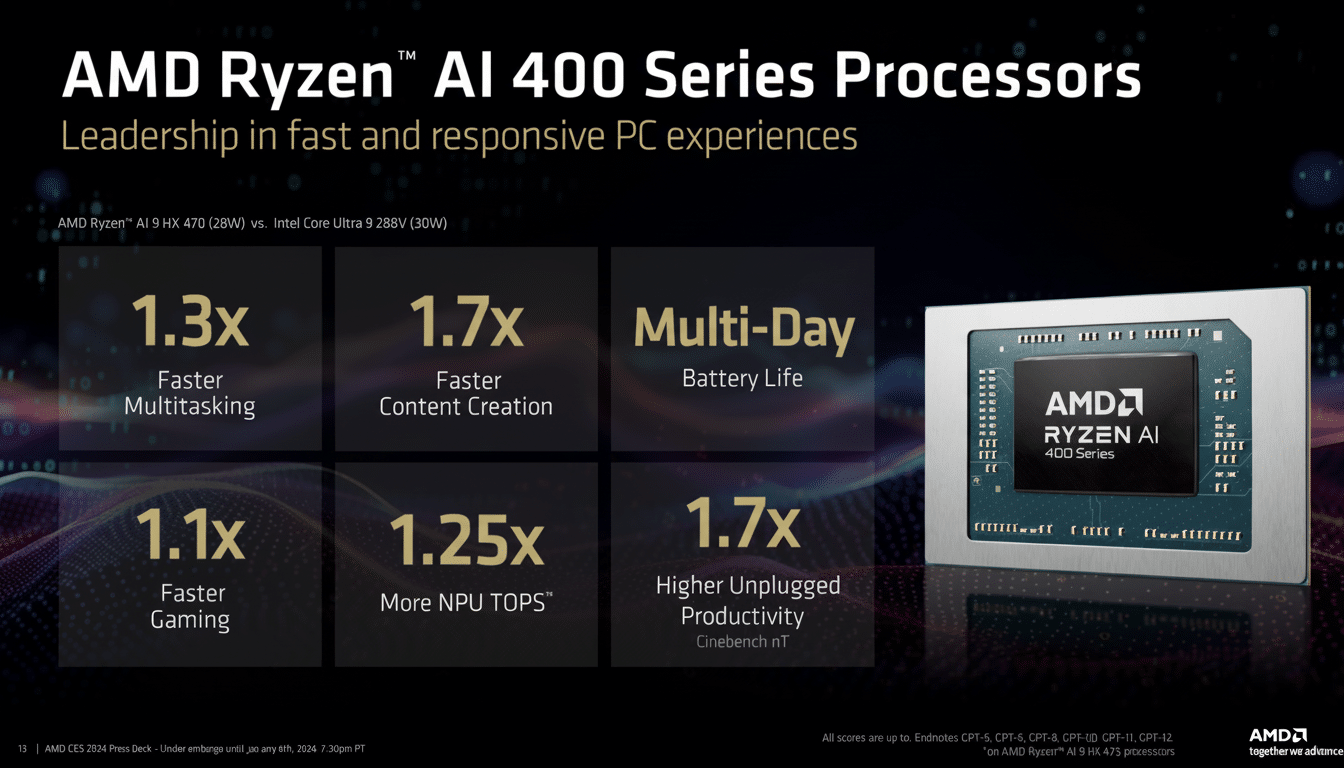

AMD’s own benchmarking even shows the Ryzen AI 9 HX 475 enabling multitasking up to 1.3x faster compared with an Intel Core Ultra 9 288V, and that includes a heady 29% advantage when running a Microsoft Teams call with 10 people alongside regular office fare. For creators, AMD is claiming up to 1.7x gains in some popular content benchmarks, including faster timeline scrubs, filters, and AI upscalers.

On the graphics front, integrated Radeon silicon is dialed in for modern upscaling, empowered by AMD’s fresh FSR Redstone tech that’s designed to rebuild near-native frames from lower resolutions. In power-normalized comparisons, AMD notes that the new chips should be able to nudge an Intel Core Ultra 9 288V by about 10 percent at 30 watts. These are vendor numbers, but they correspond to what a lot of creators and players care about: smoothness at reasonable power.

Efficiency and Battery Longevity for AI PCs

Based on TSMC’s 4nm, the Ryzen AI 400 series centres around perf-per-watt. It’s talking up “multi-day” endurance under typical load, with up to 24 hours of local video playback in between charges, which points to aggressive power gating and smart scheduling throughout the CPU, GPU, and NPU. For ultraportable form factors, maintaining AI features that can run constantly without whacking the battery life out of balance could be the most useful upgrade of all.

Software Stack and Developer Support Across OSes

AMD is banking on its ROCm software to close the distance between data center workflows and client devices. The pitch is that this will be a seamless toolchain across both Windows and Linux, leveraging DirectML, ONNX Runtime, as well as popular frameworks like PyTorch; developers can target the NPU when it makes sense and overflow onto the GPU for massively parallel tasks. This alignment also makes sense as PC Copilot+’s AI baseline (which the Ryzen AI 400 series will easily reach and exceed) sneaks underneath.

Ryzen AI Max+ Aims at Heavier GPU Workloads

In addition to the core lineup, AMD is also broadening its workstation-leaning Ryzen AI Max+ mobile chips. These SoCs feature a “large, shared memory” architecture that can provision capacity in real time between the system and graphics and accommodate up to 192GB of unified memory. The Max+ variants have up to 40 GPU cores (versus 32 in the previous Max and up to 16 in the standard Ryzen AI 400 integrated graphics).

Though the Max and Max+ NPUs remain at 50 TOPS, the additional GPU silicon increases TFLOPS for diffusion models, video effects, and other AI operations heavily bumped by GPUs. Think stable diffusion image synthesis or really good video upscaling on a plane, with the NPU responsible for fast but simple tasks and the GPU working behind it to chew through larger tensors. The family also pairs with AMD’s high-end Strix Halo lineup for creators seeking desktop-class iGPU horsepower in an all-in-one package.

What It Means for Buyers and OEMs in the AI PC Era

Several major OEMs, including Acer and Asus, as well as Dell, HP, and Lenovo (already the world’s largest PC maker), are prepping laptops and mini PCs packing Ryzen AI 400 chips, which could mean far-reaching coverage that touches everything from premium ultrabooks to compact desktops. For buyers, the headline is straightforward: these systems pass the AI test for responsive, local assistants and can handle heavy effects without a discrete GPU. Having both NPU and GPU acceleration integrated on the same SoC, under a unified software stack, should simplify deployment of on-device inference for IT teams.

There remains a gap between what the lab says and what you will experience in real life, and independent testing by industry groups and third-party labs will matter very much. On paper, however, the AMD recipe sounds intriguing: a higher NPU ceiling compared to most x86 rivals with meaningful CPU and iGPU gains to boot, as well as better efficiency for always-on AI features. Assuming the software end lands quite as clean as our silicon suggests, we could even see the Ryzen AI 400 series chips become a go-to for balanced AI PCs for both laptops and mini systems.