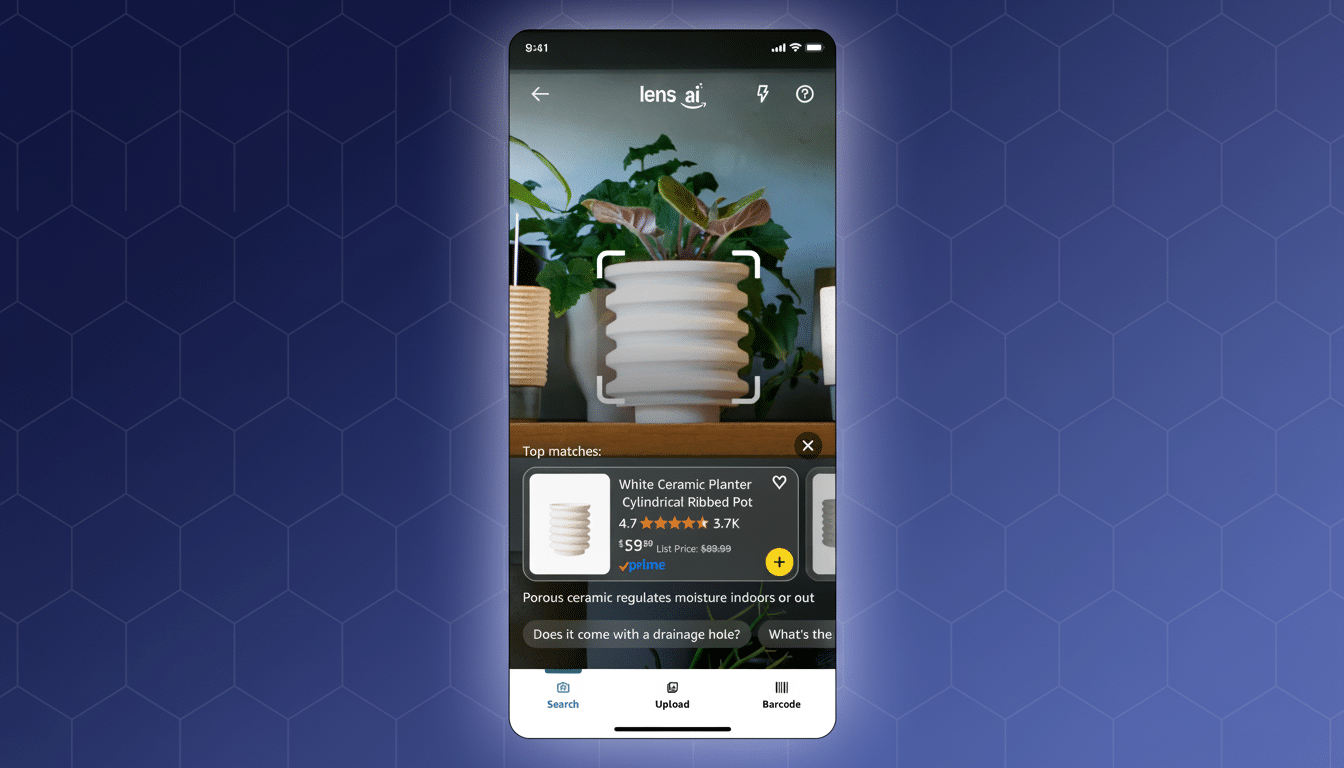

Amazon pulled back the curtain on Lens Live, a real-time visual search tool that transforms your phone’s camera into a shopping tool. Point it at, say, a pair of shoes, coffee grinder, or floor lamp in the wild, and the Amazon app brings up similar products in a swipeable carousel along with a price, average rating, and buttons for quick actions. The experience plugs directly into Rufus, Amazon’s AI shopping assistant, who will help you summarize options and answer follow-up questions before you buy.

Lens Live isn’t superseding Amazon Lens, the company’s existing image and barcode search. It adds instant recognition and conversational context that closes the loop between discovery in the real world and checkout through the Amazon ecosystem. The rollout begins on iOS for “tens of millions” of U.S. shoppers; broader availability will come soon.

What the new camera search can do

Through the camera in the Amazon Shopping app you point at an object and then tap on the one you’re interested in. Lens Live makes the product and the lookalikes and compatible alternatives, if there are any, appear at the bottom of the screen. If something works for you, tap the plus to add to cart or the heart to save for later — no manual typing, no trial and error on keywords.

Since it is designed for real-world use, the system is designed to perform under less than ideal conditions: off-angle angles, cluttered backgrounds, and occlusion. Rufus sits by the answers to produce fast overviews (—”Key differences between these espresso machines?”—), provide buying guides, and suggest follow-up questions you wouldn’t think of asking.

Under the hood: AWS-scale AI

Lens Live works on Amazon SageMaker for training and deploying large-scale vision models and can be combined with AWS-managed Amazon OpenSearch for high-speed queries on a huge catalog. Aim: That combination is aimed at matching a camera frame, or essentially a rich visual query, to items of interest in as little as a few milliseconds, with latency low enough that it feels like instantaneous recall.

The tech challenge isn’t just identifying an object; it’s mapping that object to commercial inventory with accurate attributes, variants and availability. The hitch for Amazon is that the better the models understand what you’re looking at (“men’s trail runners, wide fit” versus “lightweight gym trainers”), the more likely they are to return appropriate listings — but modeling can immediately amplify some of the fatigue issues, noted Tom Raifer, the chief technology officer for Jet.com.

Why this is important for retail

Shoppers are increasingly using stores for researching what to buy online — a behavior that has long been called “showrooming.” Trade groups like the National Retail Federation say most consumers use their phones in stores to check prices and read product reviews. Visual search condenses that behavior to seconds: see it, match it, assess it, buy — or save to return to later.

Visual discovery also cuts down on language friction. ” It’s harder to describe “that curved oak lamp with a linen shade” than to just point your camera at it. From Google Lens to Pinterest, platforms have shown there’s an appetite for this behavior; adding the same capability directly onto the Amazon purchase flow could boost conversion and average order value by making intent more actionable.

Real-world examples

See a jacket on your way to work? Lens Live will show you a range of price-point-comparable cuts and provide Rufus to give you some gross trade-offs between waterproof and water-resistant materials. Testing a blender in a store? The bottom line: Scan it to compare wattage, jar capacity and warranty terms on Amazon before making your move.

Home and DIY situations have particularly strong use cases too: point at a faucet to discover matching finishes, or hold up to a curtain rod bracket to identify the correct diameter and hardware. For parents, quickly finding compatible replacement parts — straws, lids, filters — without searching product pages.

Effects on Sellers and Search Quality

For sellers in the marketplace, Lens Live may widen the top of the funnel, capturing spontaneous, real-world intent that keyword search doesn’t pick up, and it raises the bar on catalog data hygiene: complete and accurate titles, attributes, and imagery to help matching models rank listings correctly. The benefits of richer product data have been well documented by the Baymard Institute in relation to conversions, and visual search amplifies that.

Quality and trust are critical. Vision systems can’t be plagued by lookalike confusion, and AI summaries must mirror the product data, not adorn it. Amazon’s decision to root results in its own catalog and match them to product-level signals — ratings, verified reviews, fit notes — can help hedge against AI overreach.

Availability and what to watch

Lens Live is being introduced first to tens of millions of U.S. customers of the Amazon Shopping app for iOS. Amazon hasn’t said when in 2020 it will be available for Android or internationally. The experience will keep getting bigger, the company says, and it will blend further with Rufus for better comparisons and buying guidance.

Two questions will guide its trajectory: how well the system functions around edge cases in the wild, and how Amazon weighs the mix of organic visual matches and sponsored placements over time. If Lens Live is fast and prescient, then you might soon find yourself pointing your camera at the world as casually, as naturally, as you’re taking a picture or a video of something—like you might already type a question.URI MAO If Lens Live is fast and prescient, then you might soon find yourself pointing your camera at the world as casually, as naturally, as you’re taking a picture or a video of something—like you might already type a question.