The AI arms race isn’t just about GPUs anymore. Memory has become the make-or-break factor for running modern models at scale, with costs and architecture decisions increasingly turning into a relentless game of cache management. As hyperscalers pour billions into new capacity, the price for DRAM has surged roughly 7x over the last year, shifting attention from raw compute to how efficiently systems move, store, and recall tokens.

That shift is visible at every layer. At the chip level, high-bandwidth memory is the throughput lifeline for transformer workloads. At the software level, prompt and KV caches have turned into line items that can swing unit economics. The players that master memory orchestration—getting the right context to the right model at the right time—are cutting tokens, trimming latency, and winning deals.

Why Memory Has Become the Critical AI Bottleneck

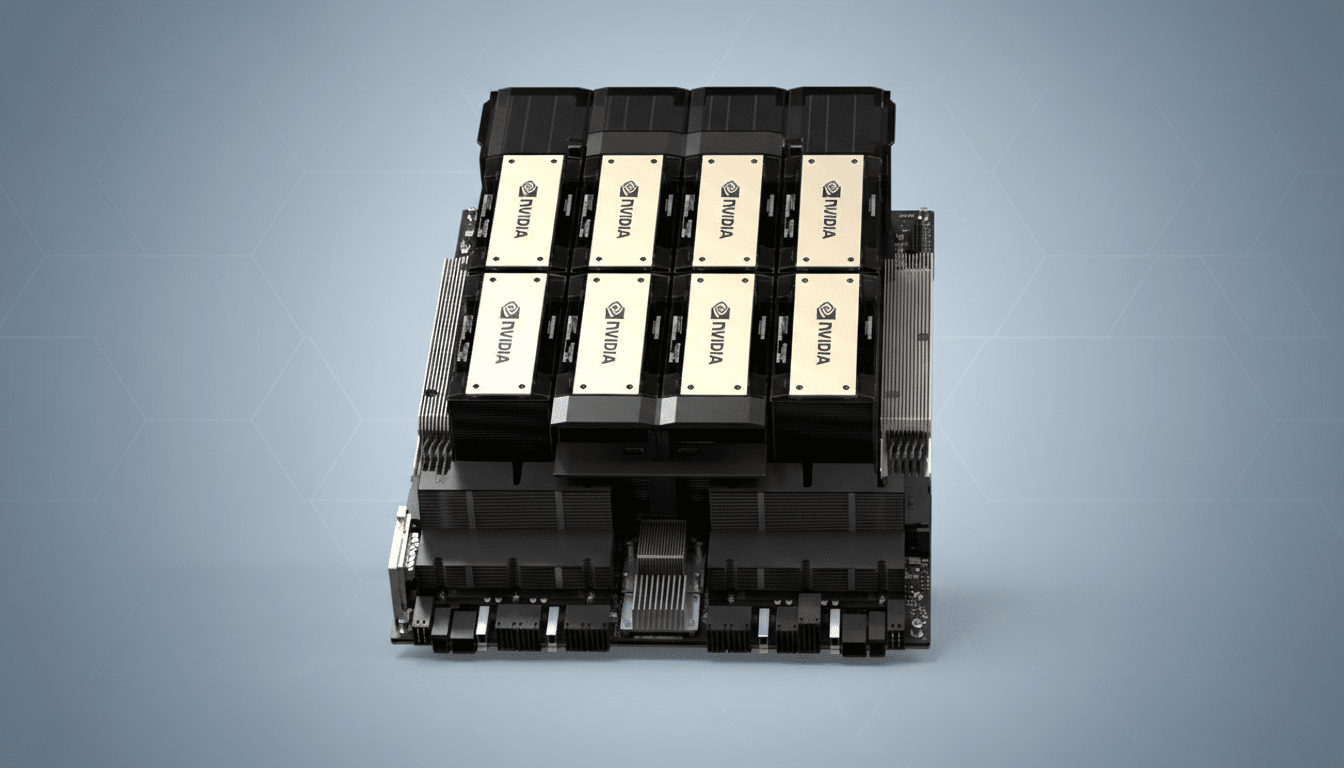

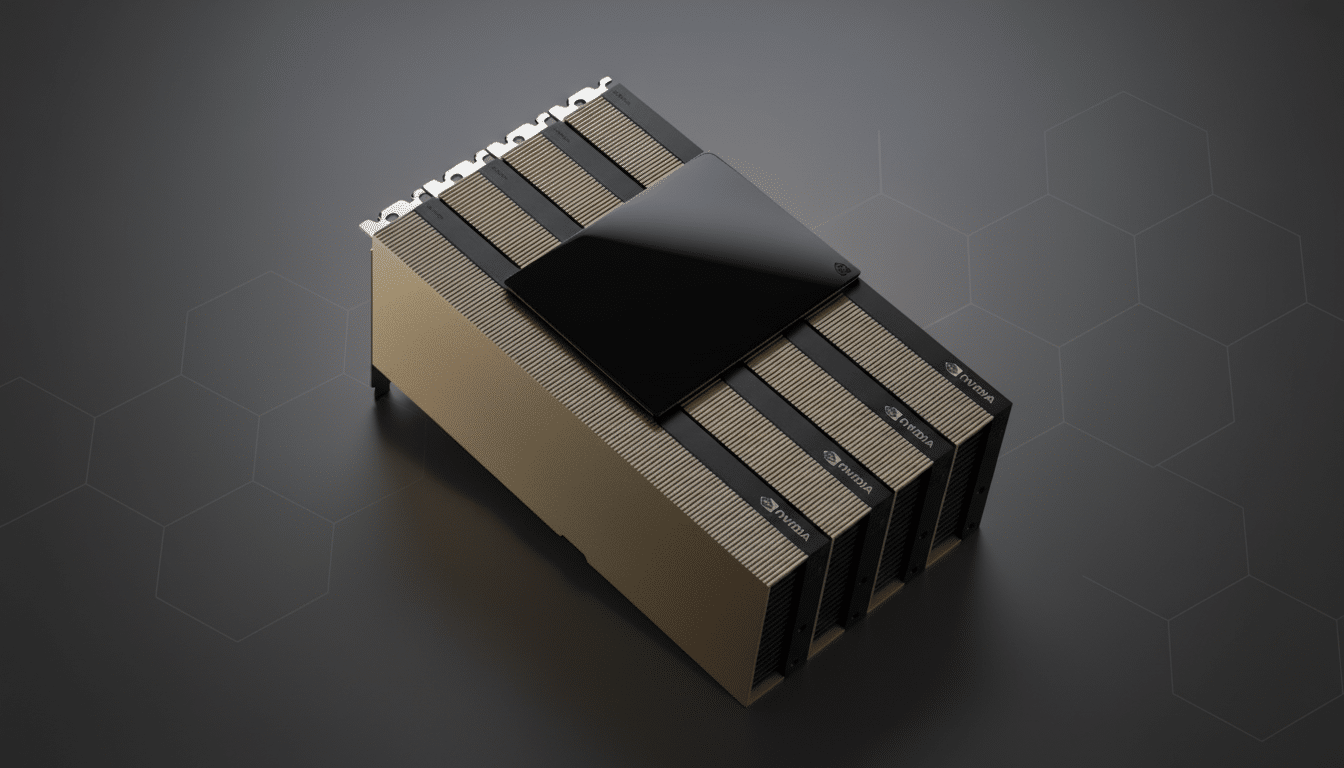

Large language models are voracious readers. Their memory footprint scales with parameter count and context length, and their throughput depends on keeping activations and KV caches close to the compute. That is why vendors pack accelerators with stacked HBM; Nvidia’s latest parts ship with up to 141 GB of HBM3e and around 4.8 TB/s of bandwidth—vital for long contexts and high-concurrency inference.

But HBM is scarce and expensive, and falling back to DRAM or SSD introduces penalties. Industry trackers such as TrendForce have warned that HBM supply remains tight, fueling price increases that ripple into total cost of ownership for inference clusters. The result: memory capacity and bandwidth, not just FLOPs, now set the ceiling for practical throughput.

Model design compounds the pressure. As vendors advertise 200K-token contexts and agentic systems chain multiple calls, KV caches balloon. The cache can easily dominate per-request memory, meaning utilization strategies—batching, sliding windows, speculative decoding—pay off only if the working set stays resident in fast memory.

The Economics of Caching in Modern AI Inference

Prompt caching has moved from a neat trick to a pricing battleground. Anthropic’s documentation, once a simple “use caching, save money,” now reads like a playbook for prepaid windows, with 5-minute and 1-hour tiers that change the calculus for session-heavy apps. Keep hot context in cache and the next call is cheap; miss the window and you pay the full token toll. Even small prompt edits can evict entries, turning context engineering into cost engineering.

Semiconductor analyst Dan O’Laughlin and Val Bercovici, chief AI officer at Weka, have argued that the industry has underweighted memory in its pricing and design assumptions. Their point is playing out in the field: teams report double-digit savings by deduplicating system prompts, splitting retrieval into reusable subqueries, and sharing KV caches across concurrent agents. Multiply that across millions of calls and the difference shows up on the P&L.

This has spawned an ecosystem. Startups like TensorMesh focus on cache-optimization, while platform providers are exposing knobs for cache lifetimes, eviction policies, and partial-key matching. Expect more transparency in metered cache reads and writes, and greater alignment between model providers’ rate cards and how data actually sticks in memory.

Orchestrating Memory from Chip to Swarm Architectures

Memory strategy now spans the entire stack. At the hardware layer, operators juggle HBM for speed, DRAM for capacity, and NVMe for spillover. PCIe bottlenecks, NUMA placement, and interconnect topology can make or break latency budgets. Chiplet roadmaps and standards like CXL are enabling pooled memory and tiering schemes that squeeze more work from each node by reducing overprovisioning.

Higher up, scheduler and runtime choices determine whether tokens hit hot cache or cold storage. Swarm-style systems—multiple specialized models coordinating on a task—are moving toward shared caches and graph-aware schedulers that pass intermediate state instead of re-encoding it. Retrieval flows are being redesigned so that expensive, immutable chunks (like policies or product catalogs) live in long-lived caches, while ephemeral user context is scoped tightly to avoid churn.

Enterprise storage vendors are also entering the conversation, arguing that AI pipelines should treat model state, embeddings, and vector indexes as first-class data assets. That mindset keeps training, fine-tuning, and inference caches coherent, reducing duplication and improving hit rates across the lifecycle.

What to Watch Next in AI Memory and Inference Costs

Benchmark suites like MLPerf have started to surface the impact of KV-cache and batching optimizations, and we’re likely to see “memory-efficiency” metrics formalized alongside throughput and latency. On the hardware side, more accelerators will ship with larger HBM footprints and better compression for cache entries, while data centers pilot CXL-based memory pools to stretch scarce capacity.

On the software side, expect SDKs that are memory-aware by default: automatic prompt deduplication, cacheability scoring for RAG payloads, and policy engines that balance hit rates against freshness. Pricing will evolve, too, with granular SLAs for cache retention and volume discounts that incentivize sustained reuse over bursty workloads.

The big takeaway is simple: as memory turns into the governing resource for AI, architectural choices and pricing signals will reward teams that treat context like capital. In a world where DRAM and HBM set the rules, the winners will be the ones who spend tokens sparingly and keep the right bytes close.