Center of gravity is moving from making max production model races to practical deployment: smaller models carefully tailored for real work, intelligence integrated into devices, agents that actually plug into enterprise systems, and simulation‑driven “world models” learning the ways of the world.

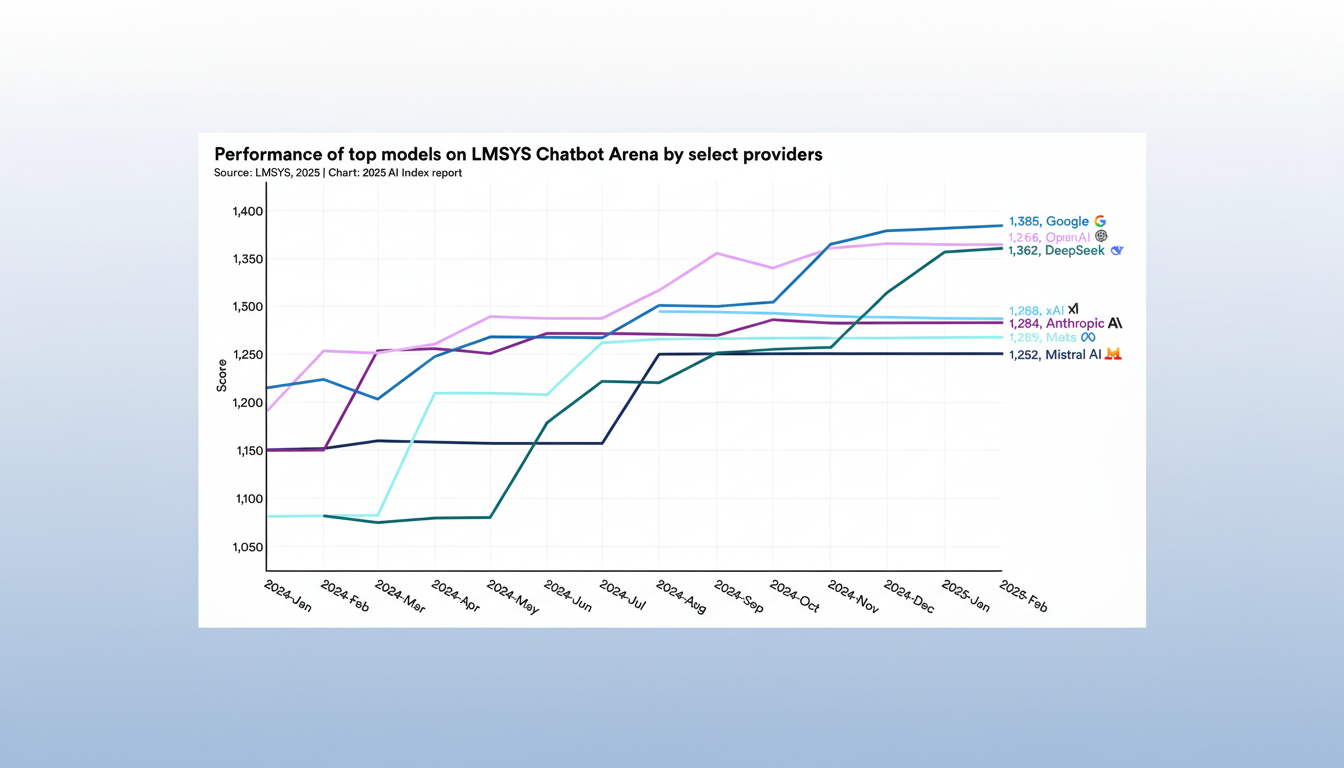

That turn is not only cultural, but also technical and economic. Stanford’s AI Index has documented the rising costs of training frontier systems, which have pushed into nine figures for top‑end budgets, whereas leading researchers have pointed out that mere scaling of transformers is increasingly hitting the point of diminishing returns. The latter victories, they say, arise from better architectures, better data, and a better fit with human workflows.

Smaller Models, Bigger Payoffs in Real Deployments

Welcome to the time of little language models. Instead of one behemoth model doing everything decently well, companies are building families of small models — typically with between 1B (billion) and 13B parameters — specialized on in‑house data and locked down by stringent policies. Open‑weight offerings such as Llama variants and recent newcomers from Mistral proved that task‑specific tuning can match or surpass larger systems in accuracy, at the same time slashing latency and spend.

It is difficult to ignore the economics and governance in a regulated industry. Teams have observed inference cost reductions (where targeted) from 60–90% when moving workloads to distilled or quantized small models, along with higher throughput and less data residency risk due to the ability to deploy on‑premises or in VPC. On‑device NPUs from Apple, Qualcomm, Intel, and AMD are driving this push to the edge, providing private sub‑second experiences for summarization, translation, and vision tasks.

The key unlock is data quality. One‑off prompts are being replaced with retrieval‑augmented generation, careful evaluation suites, and continuous fine‑tuning pipelines. Organizations that prioritize feedback loops — humans in the loop, weak supervision, and telemetry — are seeing measurable improvements in precision without having to turn toward even larger base models.

Agents Get Real With The Standard Connectors

Agents had a tendency to be lackluster when they lived inside sandboxes. The breakthrough is the connective tissue that allows models to invoke the tools where work actually happens. The Model Context Protocol from Anthropic has become a de facto “USB‑C for AI,” establishing how agents have secure conversations with databases, search, and APIs. Support from top model providers and its management as a Linux Foundation project are driving vendor‑neutral ecosystem creation.

And with standardized connectors, agentic workflows are advancing from demos into production: voice ingress that opens tickets, reconciles records, and writes back to systems of record; sales assistants that query CRMs, produce quotes, and track approvals; IT service agents that triage incidents end‑to‑end. The ingredients are now clear — tool usage, memory, role‑based access control, observability, and guardrails, packaged with SLAs and audit trails that make security and compliance teams happy.

World Models Push Into Interactive Systems

Language models predict the next token; world models infer the dynamics of environments. That distinction is significant for the fields of planning, robotics, and simulation. Research labs and startups are building real‑time, general‑purpose world models that learn to understand phenomena such as physics and language by observing the world. High‑profile efforts range from Google DeepMind’s Genie line through new commercial releases like Marble from World Labs to new creative tools like Runway’s early world‑model work. Seasoned scientists founding dedicated labs also speak to the centrality that this track has assumed.

The first commercial wave is gaming and synthetic data. The analysis of PitchBook paints a picture of how world‑model tech could turn from a novelty to a hundred‑billion‑dollar market over the course of this decade when used for procedural content, adaptive non‑player characters, and rapid playtesting. The longer‑term bet is autonomy: robots and vehicles that generalize safely over messy, open‑ended settings.

Economics and Safety Take the Wheel for AI’s Future

Business budget owners are asking for line‑of‑business results, not benchmark trophies. That makes cost per task, time to value, and reliability the most important selection criteria. Techniques such as quantization, pruning, sparsity, and retrieval are gaining traction in the industry because they compress total cost of ownership while retaining accuracy. The International Energy Agency is warning that data‑center electricity demand is on the steep part of a rocket‑ship ride; efficient inference has swiftly become both a margin issue and an environmental mandate.

Governance is professionalizing in parallel. The NIST AI Risk Management Framework and ISO/IEC 42001 are popping up in RFPs; the EU AI Act is driving technology providers to document data provenance, assess systemic risks, and label synthetic media. Enterprises are spinning up red‑teaming programs, safety checklists, and incident response playbooks: AI begins to look and feel like other mature software disciplines.

The Human-Centered Playbook for Real-World AI

This era is also embracing humans in the loop. The hot jobs aren’t sci‑fi overlords; they’re AI product managers, data stewards, evaluators, and safety analysts who wire models into real processes with measurable KPIs. In areas such as customer support, finance, or operations, plausible goals now look more like 10–30% reductions in cycle time, increased first‑contact resolution rate, and reasonable lift in conversion — not “replace the team.”

The upshot is straightforward. Winners will deliver dependable, observable systems that plug into the tools people already use, consider data protection by design, and demonstrate their value in dashboards. So that’s what progress looks like, after the hype: smaller where it really matters, smarter where it counts, and closely matched to how work actually gets done.