OpenAI is warning that a fundamental flaw in agentic browsing will persist.

Even as it ships new protections for its Atlas AI browser, the company says rapid injection attacks—malicious instructions hidden in web pages, documents, or emails that take over an AI agent’s behavior—are likely to only be reduced to a lasting risk rather than a bug that can be fully eradicated.

- What Rapid Injection Looks Like in AI Browsers

- OpenAI’s defense strategy to secure Atlas agent browsing

- Why the risk of prompt injection persists in AI browsers

- Expert opinions and practical steps to reduce AI browsing risk

- The competitive context among AI browser security efforts

- Bottom line: safer agentic browsing, but never foolproof

The admission highlights a larger industry truth: Once an AI system is given autonomy to interpret and act on arbitrary content from the open internet, adversaries can work to inject commands into that content. The question goes from “Can we prevent it altogether?” to “Are we able to minimize impact and catch it quickly?”

What Rapid Injection Looks Like in AI Browsers

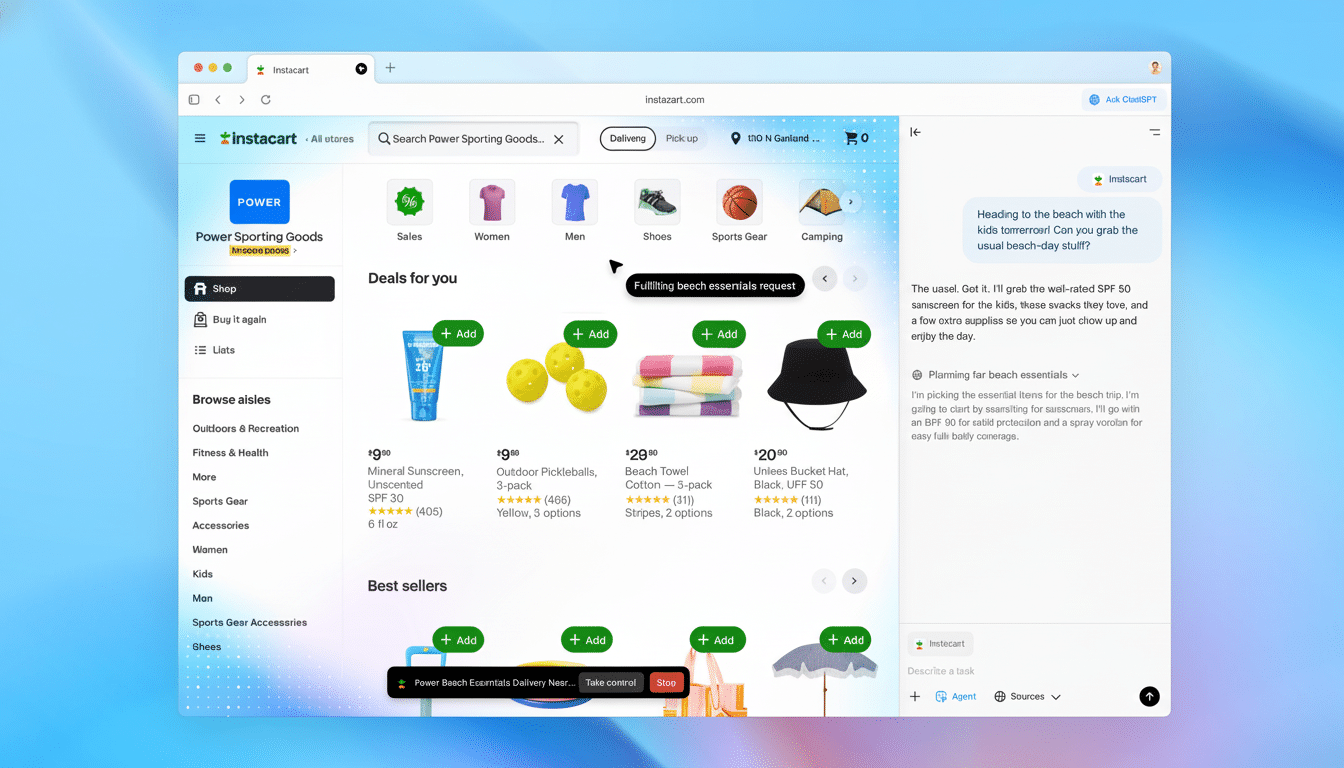

Accelerated injection fuses the face-to-face gambits of social engineering with machine-readable ruses. There can be a seemingly benign spreadsheet or shared doc that has programmed instructions to tell an agent to forward emails, rewrite settings, or exfiltrate data. Early experiments with agentic browsers — including demos taking aim at OpenAI’s Atlas and competing products — have demonstrated how a few words in a shared workspace can push an AI off track, without the user even realizing what occurred.

Security teams have long feared this would be systemic, not product-specific. Indirect prompt injection was recently identified as a class-wide issue impacting AI-driven browsing tools by a leading provider of privacy-focused browsers. And the U.K.’s National Cyber Security Centre warned that such attacks “can’t entirely be mitigated,” and instead advised organizations to focus on containment and monitoring rather than silver-bullet fixes.

OpenAI’s defense strategy to secure Atlas agent browsing

Atlas relies on what OpenAI calls “agent mode,” which is designed to increase attack surface: It gives the model the power to read, reason, and act across tabs, messages, and apps. To combat that, the company is piling on defenses and accelerating the speed at which it sends out patches. A key part of the approach is an LLM-powered “automated attacker,” which has been trained with reinforcement learning to behave like a hacker—one that pokes at the agent in simulation and iterates until it identifies failure modes.

Since the simulator gets to see those internal reasoning traces, OpenAI is able to catch long-horizon exploits (ones that unfold over dozens of steps) which real red teams might overlook; the company says this approach has already turned up entirely new attack chains as well as improved Atlas’s ability to spot and flag malicious prompts, like undercover instructions in an email that would lead it to execute unintended behavior.

OpenAI has additional suggestions when it comes to user-side constraints. These are similar to the least-privilege design pattern popular in cloud security and API governance:

- Restrict logged-in sessions.

- Keep scopes minimal.

- Require explicit confirmations for at-risk actions, such as sending responses or initiating payments.

- Give concrete instructions rather than broad leeway.

Why the risk of prompt injection persists in AI browsers

At the heart of the problem is a pair of troubling tendencies: large language models like GPT-3 process natural language as both data and instructions. When an agent reads untrusted material, in effect it is parsing possible directives—and adversaries use that lack of clarity. The web complicates this; content is dynamic, adversaries can chain pages together, and honest sites may host instructions written by users that the owner didn’t intend.

Business organizations have been codifying such risks. Prompt injection and its closely related cousin, indirect injection, are menacing categories for the OWASP Top 10 for LLM Applications. Cloud and AI providers, like Anthropic and Google, advocate defense-in-depth measures, including:

- Sandboxed use of tools

- Strong output filters

- Policy checks

- Capability scoping

- Continual red-teaming

None offers a guarantee of 0% exposure; the goal is resilience — detect, contain, and recover.

Expert opinions and practical steps to reduce AI browsing risk

“There is a trade-off in the infrastructure, according to security researchers: agentic browsers have modest autonomy but can access high-value resources such as email, calendars, and tokens of identity. As one of the lead researchers at Wiz noted, not allowing logged-in access narrows the blast radius, and human-in-the-loop approval caps autonomy during sensitive times. In practice, that translates into more interstitial prompts, tighter defaults, and narrower task scopes.”

AI browsing teams should operate under the assumption of breach. Effective controls include:

- Domain allowlists

- Read-only modes on untrusted sites to compartmentalize changes

- Content sanitization at rendering time

- Behavior anomaly detection

- Separate profiles or containers for sensitive workflows

Other provenance cues—model-visible metadata based on source trust, for example—may also nudge agents into ignoring dubious instructions.

The competitive context among AI browser security efforts

OpenAI’s reinforcement-learning attacker is unique, but competitors are on similar tracks. Defensive measures have been focused on architectural guardrails and policy engines for agent actions at Google, while others are adding scale to internal and external red-team programs. The emerging consensus: rapid iteration, diverse adversarial testing, and transparent guidance to users about risk will be more important than any single defensive trick.

Bottom line: safer agentic browsing, but never foolproof

OpenAI’s message is unsentimental but clear: Agentic browsing can be made safer, not foolproof. In the meantime, improvements will come in the form of faster detections, smaller radii of detonation, and fewer successful exploit paths — not a scorched-earth avoidance strategy. Those who take on that mindset as users and builders will be better suited for the long game.