Not sure which is the layers menu and which is the lasso tool? Adobe’s latest AI feature is here to change that. And now the company is launching Prompt to Edit, a conversational image editing feature based on the Firefly Image Model 5 that allows anyone to correct their photos with plain-language requests without needing Photoshop know-how.

Write “remove the fence,” “brighten the sky” or “swap for a beach at sunset” and the system makes selections, masks and fills in new skies or beaches or anything else you show it. It may be the surest sign yet that mainstream photo editing is migrating away from manual toolwork and toward intent-based, AI-driven commands.

What the Prompt to Edit Feature Actually Does

Prompt to Edit understands spoken language and performs sophisticated tasks with your photos using existing images, such as removing objects, color grading, replacing backgrounds or content-aware extensions. In a live demo, for instance, a dog photo shot through the bars of a fence was cleaned up by asking the tool to remove the bars from the image; it filled in realistic detail where those bars had once been.

The feature further extrapolates upon Adobe’s AI-assisted tech by combining several pro-grade steps—selection, masking, generative fill and blending—into a single command. For non-nerds, that condenses a workflow of multi-tooldom into seconds.

Inside the Firefly Image Model 5 and Its Upgrades

Prompt to Edit also operates on Adobe’s Firefly Image Model 5, which the company describes as its most advanced generation and editing model ever. Now, frames are rendered in native 4 MP resolution — roughly double the pixel count of 1080p — for sharper lines, finer texture and convincing details in trickier-to-render things like hands, faces and fabrics.

The model, Adobe says, produces more “photorealistic” results and handles composition-aware edits more consistently — important for edits that must maintain accurate perspective, lighting sources and shadow consistency. The company has also prioritized inclusive training data for Firefly, drawing on licensed and public domain sources, as well as endorsement of Content Credentials, the C2PA industry standard for AI-assisted editing labeling, which is co-developed with the Coalition for Content Provenance and Authenticity.

Layered Image Editing Coming Soon to Firefly Users

Adobe also previewed Layered Image Editing, a sister feature that maintains the scene structure while you tweak various elements. Imagine a table with some objects: you can point one item out, scale it up or down, move it and change its color/material using a prompt while maintaining shadows and occlusions. The company said this feature is still in progress.

If that could be delivered in the demonstrated way, then it would potentially transform and speed up how you’d have to approach compositing work that usually relies on the use of masks, smart objects and adjustment stacks.

How It Compares to Other AI-Powered Editing Tools

Editing via prompts, even if it’s not done manually, isn’t entirely novel — there are Google’s Magic Editor and Magic Eraser in Photos, Canva’s Magic Edit and infilling tools in models from OpenAI and other artists that provide similar “fix what I did” experiences. What Adobe has going for it, though, is conversational control, composition awareness and integration with the larger Firefly and Creative Cloud ecosystem that many photographers and designers are already using.

Resolution and printability are another practical separator. At 4 MP, Firefly’s outputs laugh at many common use cases such as social media sharing, web usage and casual printing (reference point: 5” x 7” @ 300 ppi is ~3.1 MP). For desktop publishing or editorial workflows, pros will still be keeping an eye out for high-res options and how edits round-trip into things like Photoshop and Lightroom.

Availability and Ecosystem for Adobe’s Prompt to Edit

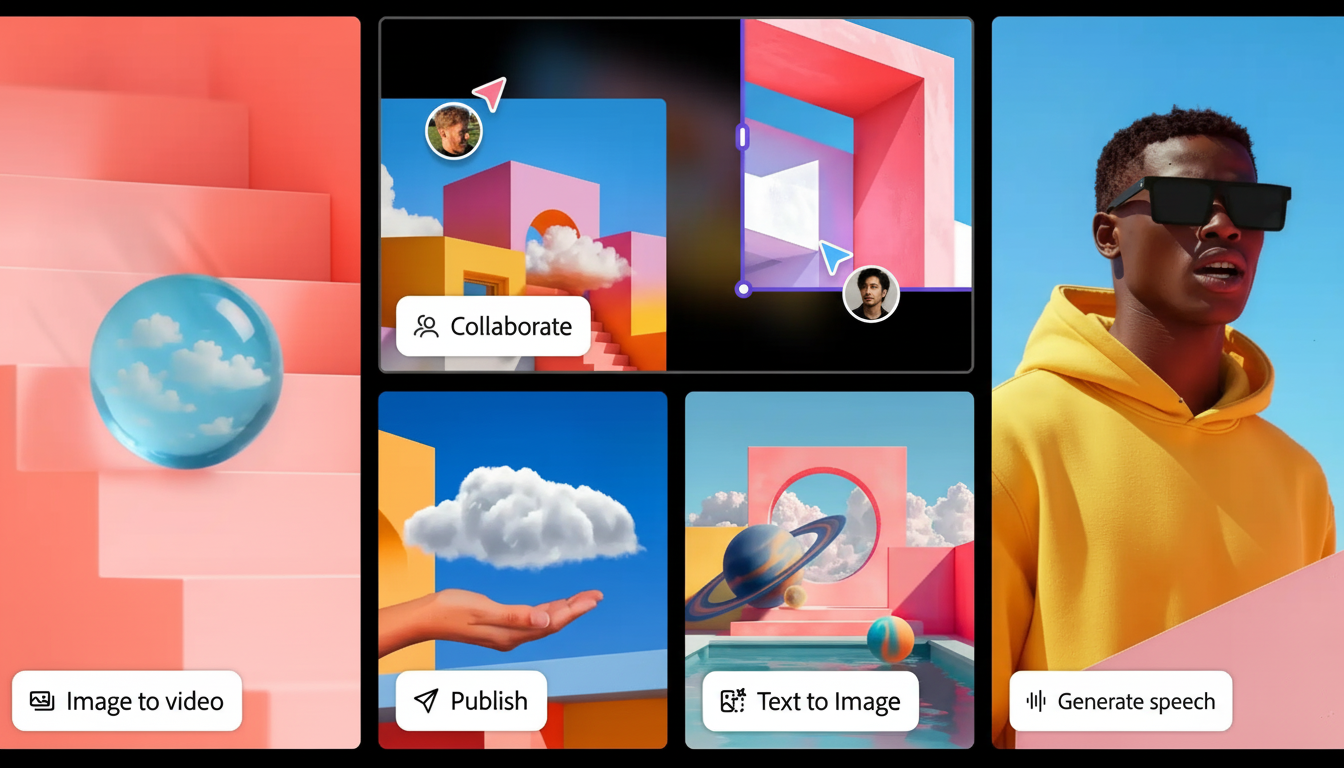

Prompt to Edit is rolling out to Firefly customers starting today, with compatibility for the Firefly Image Model 5 and partner models created by Black Forest Labs, Google and OpenAI. We anticipate Firefly Image Model 5 will go beta soon, and Adobe says there’s more editing depth and integrations in the works.

And importantly, this is not some niche research demo. By placing the feature in the middle of Firefly’s production environment, and by following alongside third-party models like Autodesk’s, Adobe is drawing a line in the proverbial sand: Text-driven editing is table stakes in modern creative pipelines.

Why It Matters for Photographers and Creatives Alike

For everyday photographers, Prompt to Edit translates to the ability to fix common issues — distracting objects, lifeless skies and awkward crops — without taking a crash course in pro software. For working creatives, it unburdens you from rote editing and speeds up ideation — manual finesse for the remaining 10%, where craft still triumphs.

As generative tools mature, the true differentiator is no longer whether AI can create a picture but whether it can understand a user’s intent and manipulate an existing image accurately, quickly and with provenance.

A new tool from Adobe takes the conversation even further, turning those edits into a dialogue — and making sophisticated results possible even for anyone who can type a sentence.