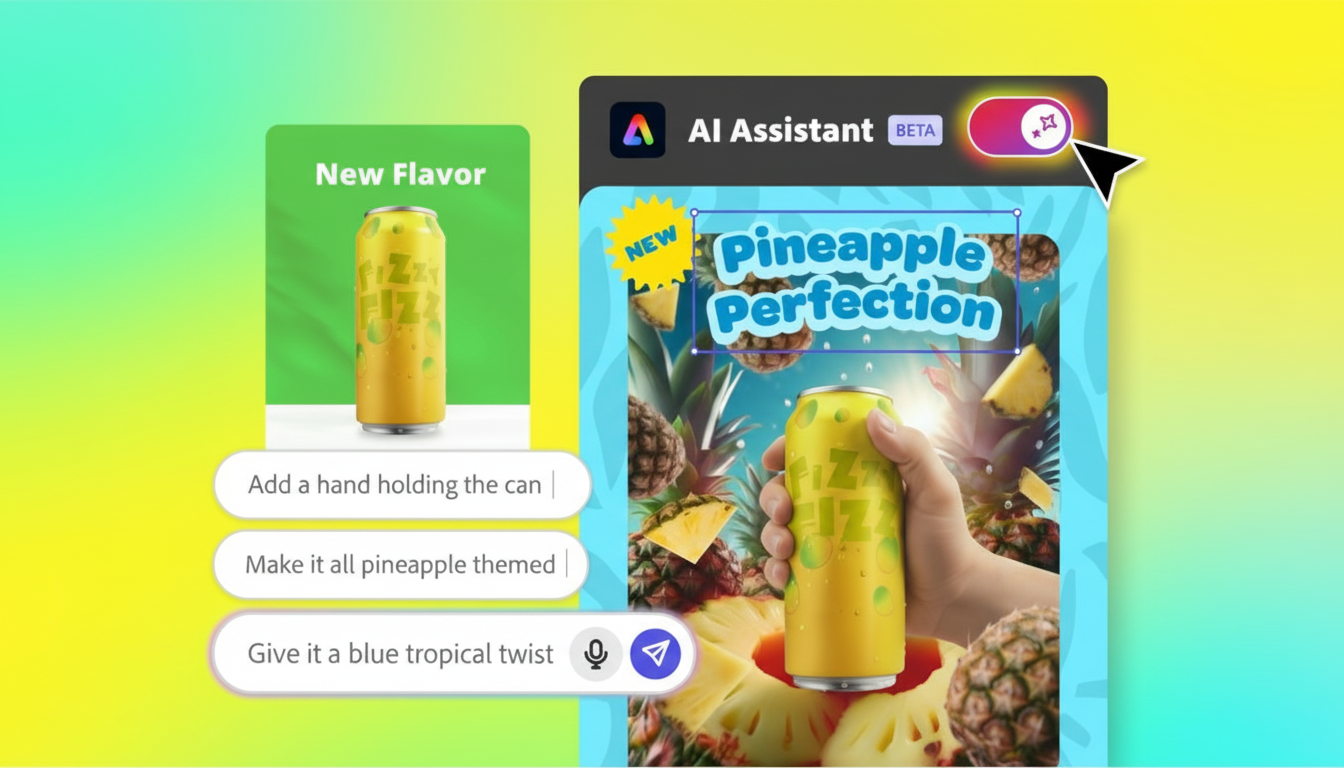

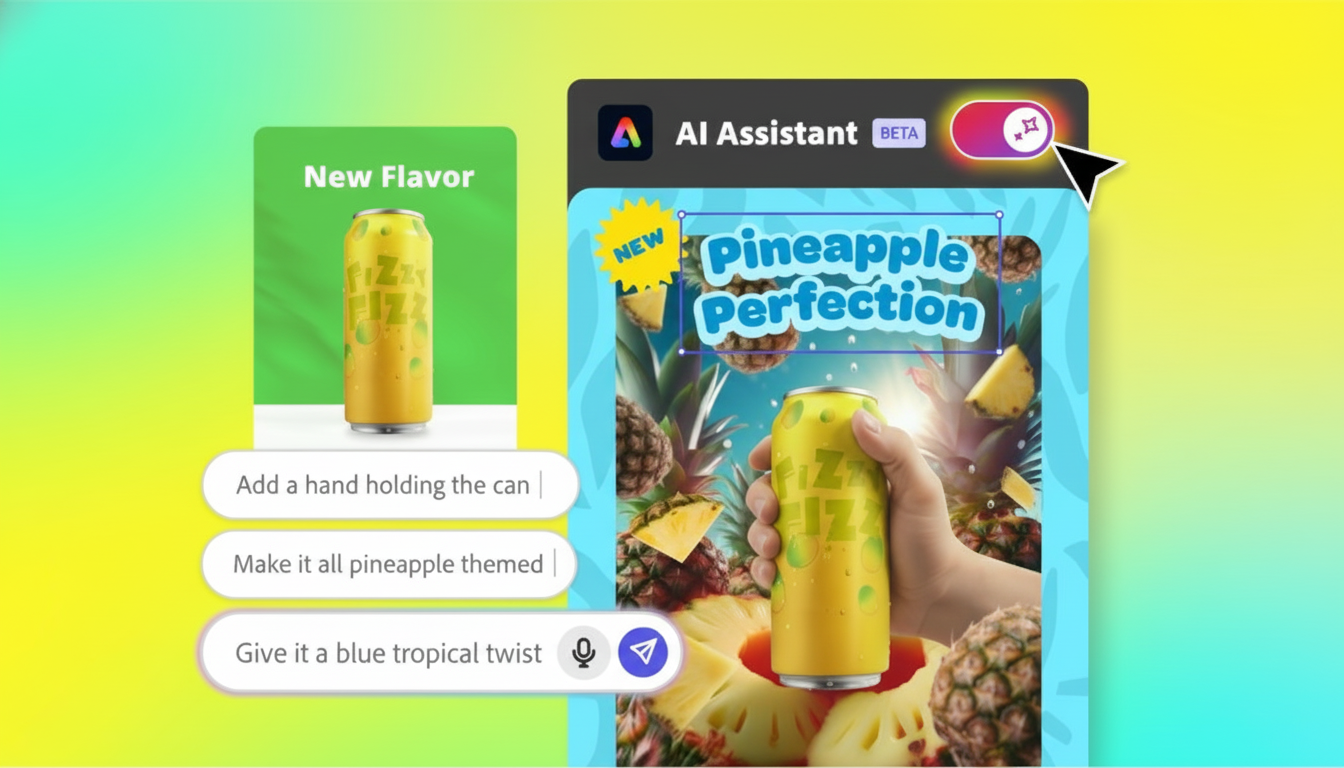

Adobe introduced new AI assistants for Express and Photoshop, bringing its creative suite further into intent-driven workflows. Express includes a generative prompt mode that flips back to manual controls, and a closed-beta Photoshop assistant sits inside the sidebar; it understands layers, selections, and masks to speed up day-to-day edits.

What the new assistants do in Adobe Express and Photoshop

In Express, the assistant ushers in a creation-first mode: type an English-based prompt to create images, layouts, or social-ready assets and flip back to manual editing for tweaks to typography, colors, and brand kits. The back and forth is meant to reflect generative AI’s speed, while continuing to ensure the level of detail that creative teams demand from Express.

Photoshop’s assistant comes in a vastly different form. As part of the sidebar and currently in closed beta, it’s context-sensitive: it reads layers, knows selections, and aids in creating non-destructive masks. Ask it to strip out a background, isolate a subject, or change the color range of a product across layers, and it will crunch the steps — often in seconds — while maintaining an editable result.

Adobe frames the split approach — prompt when you want speed, control when you crave craft — as an entrepôt between AI as something both accessible and controllable. It’s a small distinction, but an important one from a generic chat sidebar, and it mirrors the way power users already operate within these complex layer-based designs.

Project Moonlight and early ChatGPT integration experiments

Adobe is also piloting Project Moonlight, an early-stage assistant designed to manage tasks across tools. The idea: a meta-assistant that can speak to other Adobe assistants and that can learn how a creator works by connecting to opt-in social channels. The aim is continuity — a consistent tone and look across campaigns — without requiring creators to recreate preferences in every app.

Meanwhile, Adobe is looking into a link between Express and ChatGPT through OpenAI’s app integrations API. That would enable users to create design drafts within a chat interface and open them in Express for finishing. It reflects a broader trend toward cross-app creation, where work begins in conversation and then flows into production tools without needing to be handed off manually.

Model choice options and new video workflows in Adobe apps

In addition to the assistants, Photoshop has model choice for Generative Fill — a feature that allows you to remove objects or extend scenes.

Users are free to use both Adobe’s own Firefly models as well as third-party ones like Google’s Gemini 2.5 Flash or Black Forest Labs’ FLUX.1 Kontext. Choosing the right model for the job — speed versus photorealism versus fidelity to context — can make a critical difference, especially in high-volume production.

Video teams get help, too. To speed up identifying people or objects, Premiere Pro is introducing an AI-powered object mask tool. It is especially handy when working, for example, in ad versioning, product highlight reels, or with multicam material where the grading should remain the same, but you want to add a few targeted tweaks to specific shots.

Trust, transparency, and content provenance still count

Adobe also continues to rely on Content Credentials, an effort from the C2PA standards venture Adobe co-founded, to describe how media was made or edited. These metadata “nutrition labels” are added at export and can travel with files across platforms. Camera manufacturers like Leica and Nikon have started to endorse credentials in hardware, and we’re starting to see more general uptake as creative teams scale generative output while maintaining a chain of audit.

The company also emphasizes that Firefly was trained on licensed content, such as Adobe Stock and public-domain content. For agencies and brands who require model transparency — for example, indemnities, provenance, and training disclosures — these are becoming as crucial as raw features.

Why these updates matter for creators and production teams

The assistants focus on the high-friction aspects of design: choosing objects, masking and background removal, making the same color change across a set of objects, and quick ideation. A social team might also nudge Express for a series of on-brand variations, then dive into more precise layout edits. A retoucher could, for example, ask Photoshop to select a product over grouped layers and then create a mask for it or prepare it for colorways without having to do the labor-intensive lassoing by hand.

Crucially, this isn’t AI slapped onto the side of a canvas. By interpreting layer structure and returning editable results, Adobe is ensuring that automation can coexist with existing workflows rather than supplant them. That’s a competitive win against all-in-one design suites that go for speed but squash control.

Availability is all over the map: the Express assistant is in rollout with a new AI mode, the assistant for Photoshop is in closed beta, and Project Moonlight itself remains private. With expandable model choice and provenance tagging baked in, Adobe’s update spells a clear direction — assistants that speed the work along without stealing it from the creator.