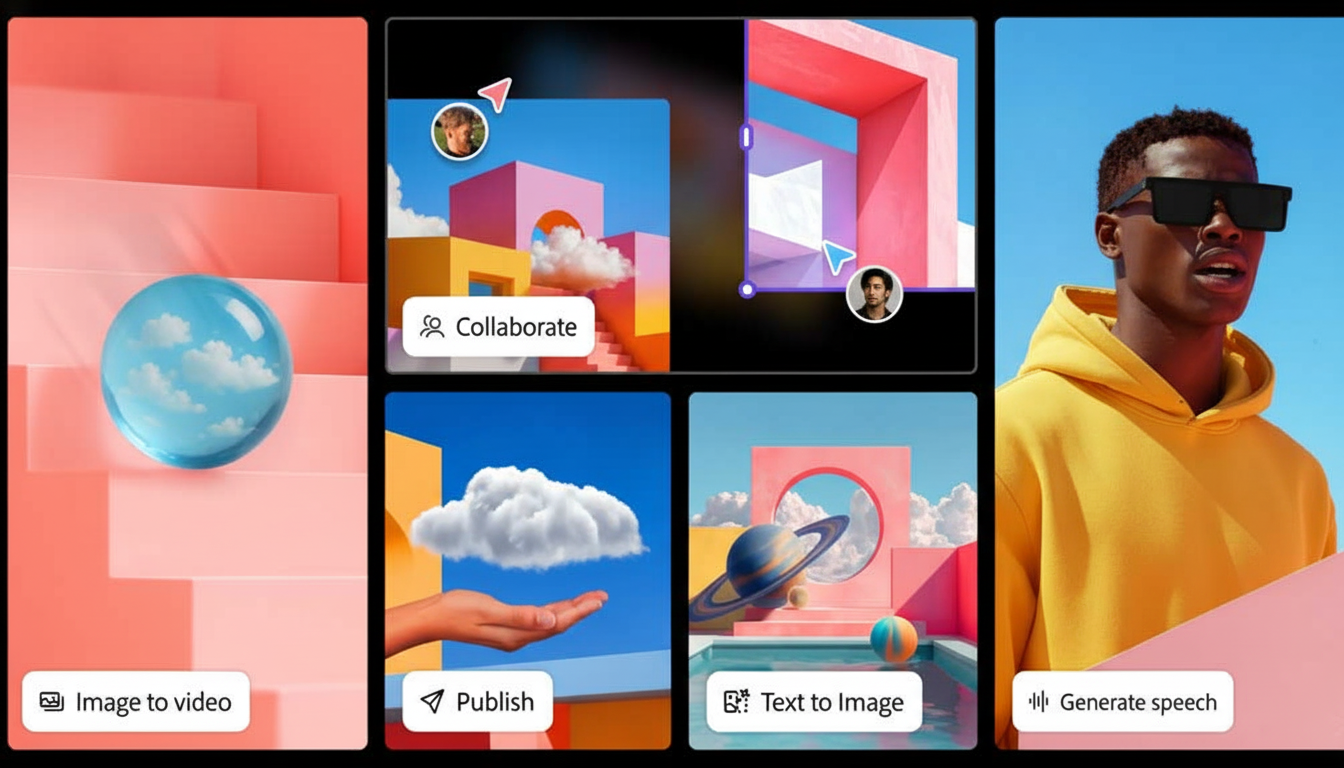

Feeling intimidated the first time you open a photo editor? Adobe’s new AI will make that experience a thing of the past. The feature, Prompt to Edit, which ties closely to the new Firefly Image Model 5 released at the same time as The Photographer’s Phantasy, allows anyone to type in a request — “remove the fence,” “brighten the sky,” “add soft studio light” — and see it appear moments later.

It’s a direct jab at the friction that gets between casual shooters and busy professionals, and proper shine time: fiddly tools, countless steps, and an expensive learning curve.

In this release, Adobe is taking a bet that conversational editing can make high-level retouching something as simple as having a chat.

What Adobe Announced at Its Creativity Conference

During its annual creativity conference, Adobe unveiled Firefly Image Model 5, the latest iteration of the company’s most advanced image generation and editing model to date, launching Prompt to Edit for Firefly customers. The model’s native 4MP images—2X the pixels of 1080p—enrich finer textures and more realistic skin tones, and lessen jagged edges.

Adobe says the model advances in historically difficult areas — such as anatomically accurate portraits, natural motion, and complex, multi-object scenes — with a goal of more “photorealistic” results.

Firefly Image Model 5 is in beta, and Prompt to Edit is live, which also supports partner models from Black Forest Labs, Google, and OpenAI.

How Prompt to Edit Works for Everyday Photo Tasks

Instead of searching for the correct brush or mask, users type and write a plain-language instruction. In demonstrations, simply asking to “remove the fence” led to photos of dogs behind chain-link fences with AI filling in the background nicely where the fence used to be. The tool even dealt with occlusion, textures, and lighting continuity in one swoop — all stuff that would have usually demanded multiple selections and healing steps in pro software.

Prompt to Edit can handle local tasks (like remove objects and change background, relight, color grade, and stylize) by parsing the task intent and automatically selecting the optimal operations. That makes it a good pick for fast product shots, travel images, listings in the marketplace, social posts, and event photos where speed is as important as polish.

Behind the scenes, the system combines inpainting, segmentation, and context-aware synthesis, but the interface obscures all that complexity. The point, after all, is not simply to make up new pictures — it is to stick closely and respect the original (if surgically on the operating table you will be asked for your choice).

Layered Editing and Notable Resolution Improvements

Expanding beyond Prompt to Edit, Layered Image Editing was given a sneak peek at the presentation of compositing with object-level structure, providing the ability to reposition and resize elements in addition to restyling where everything remains connected for continuity. That could have meant meticulous overlay creation, masking, and transforms in typical workflows. Here it’s drag, drop, and prompt. Adobe says the feature remains in development.

The switch to native 4MP actually makes a difference in use. That means more pixels, which yield crisper hair, fabric detail, and typography, while lessening telltale AI artifacts that flare up when you zoom in or print out the results. It also lets editors crop more generously without losing clarity.

Telling Safety Stories And Training Data

“Adobe has been embedding Content Credentials (as part of an effort along with the Coalition for Content Provenance and Authenticity) and AI-edited images leave tamper-evident metadata that documents what was modified,” said Adobe. That’s ever more important to detect as generative edits become indistinguishable to the eye, particularly in news, education, and e-commerce.

The company has made a point that Firefly models are trained on licensed content (Adobe Stock and public domain), to eliminate rights worries for commercial usage. That stance has also been a selling point for brands wary of dark training sets from elsewhere on the market.

Availability and the Wider Competitive Context

Prompt to Edit is available as of now to Firefly customers and takes advantage of Firefly Image Model 5 in beta, with optional partner model support. Access and use is typically part of Adobe’s generative credit system married to Creative Cloud and Express plans.

Adobe is not the only company doing natural-language editing. Google’s Ask Photos, Samsung’s Galaxy AI Generative Edit, Microsoft’s Designer, and Canva Magic Edit all follow in the footsteps of similar “type it and fix” promises. What gives Adobe the edge is its close integration with professional workflows, and its focus on preserving continuity — retaining layers, lighting, and composition, rather than just sticking AI patches everywhere. Gartner predicts that by 2026, over 80% of enterprises will use generative AI APIs or have AI embedded in their applications — highlighting why frictionless, trusty editing will soon become table stakes across creative tools.

Why These AI Editing Advances Matter to Creators

For the casual creator, this means less barrier to pro-looking results; for pros, it offloads drudgery so time can transition from mechanics to decisions. According to Adobe, Firefly tools have already powered billions of generations since launch — a sign that people want speed but not if it means giving up control. Prompt to Edit takes that momentum and makes high-quality photo fixes something you can tell someone to do.