Box is leaning hard into agentic AI, and CEO Aaron Levie has a clean thesis for why: the next wave and productivity will be unlocked not by simply scaling up models, but by models steeped in the right context.

The actual frontier, in his mind, is hooking AI up to the sprawling, messy messes of enterprise content — contracts and slides and PDFs and design assets — and doing it with precision and governance and repeatability.

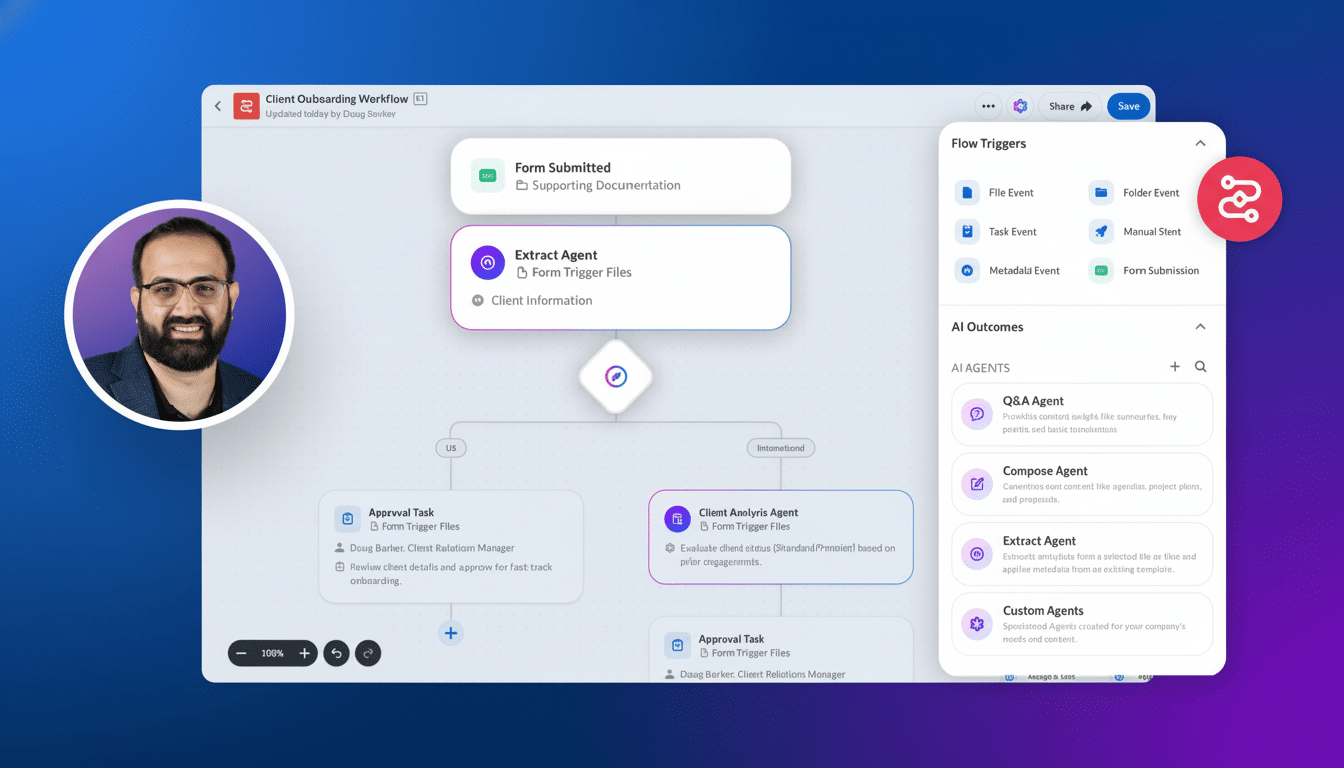

Now Introducing Box Automate, an orchestration system for AI agents across business processes, decomposing complex work into bounded tasks.

It is a practical answer to a harsh reality Levie is fond of repeating: there is no such thing as a free lunch in AI. Calculate is finite, context windows have time limits, and companies can’t afford agents who go rogue.

Why context is overtaking raw scale

Until now most corporate automation has resided in structured data—CRM, ERP, HRIS. The larger prize now is unstructured content. IDC has calculated that 90% of enterprise data is unstructured, which is why legal reviews, marketing-asset approval, and M&A diligence still require human judgment and manual handoffs.

Levie’s bet is that agents get useful when you feed them exact, permissioned context extracted from files, metadata and activity histories. Box Automate chunks workflows into stages — intake, classification, review, redline, approval — so each agent receives just the context it requires to perform its bounded job. That all keeps the prompts lean, lowers the risk of model drift, and cuts down on thrown-away tokens.

Even the most sophisticated systems can hit a wall when you have sprawling tasks. Long-horizon agents run out of context windows, “leak” memory in various ways, or accumulate small distractors to make mistakes. Distributing work across sub-agents with explicit hand-offs and retrieval steps that refetch relevant content can alleviate those failure modes without having to wait for the next model size bump.

Guardrails, determinism and trust

Companies crave repeatability as much as brains. Levie depicts the design decision as one of choosing where to be deterministic and where to permit agentic flexibility. There are deterministic guardrails that define who can trigger an agent, which repositories it can look at, confidence levels on extracting or summarizing and when a human must review.

This is in line with other AI risk approaches. NIST and Gartner market guidance frameworks have high impact steps that involves policy enforcement, monitoring, and human-in-the-loop. In Box’s case, you could partition a “submission agent” from a “review agent,” introduce acceptance tests at every gate, and log every prompt, response and source document for traceability.

Security and permissions as the moat

Context without management is a danger. The most common failure mode in the early enterprise AI launches has been lax retrieval — agents responding with content that the user should never have seen. Levie posits that Box’s 20 or so years of work on identity, permissions and compliance are now an AI asset: enforcement of least-privilege access is built in at the content layer, not bolted on around the model.

Practically, thtat means retrieval operations and vector query obay the same ACLs as the plain files they are over.

It also means policy, retention, legal hold and DLP signals follow the content wherever agents operate. For heavily regulated industries, controls that are already mapped to standard frameworks such as ISO 27001, SOC 2 and HIPAA serve as a governance baseline the technology needs to expand safely.

“Neutral platform” in the model race

Nesting model provider are moving up stack with native file tools. Box’s counterpoint is to be the connective tissue: storage, security, permissions, embeddings and orchestration that can be plugged into multiple leading models. That allows customers to select a model based on task — fast and cheap for tons of extraction, more sophisticated for detailed analysis — then toggle back and forth as the cost performance curves change.

This is important for CIOs because while businesses are planning to increase AI spend, a core application of AI is the enhancement of customer experiences and services, including the monitoring of processes to more quickly detect errors and creating feedback channels to influence new product designs, Rex says.

For CIOs, this is an issue because AI spend often expands as pilots move to production. Levie’s “no free lunch” is also a no single-model lock-in; routing across networks, caching requests, and cutting out context to what’s relevant are now table stakes for driving unit economics.

Where agents help today

Consider NDA intake. A submission agent decodes the document recording type, extracts entities and clauses, and examines playbooks. A reviewing agent suggests policy-aligned redlines. A last agent writes a summary and pushes status into the deal system. It consists of each step independently using only the contract library, contract templates and permissions specific to it, reducing hallucination and cutting the cycle time.

Marketing teams can use similar patterns — auto tag the creative, check the claim against approved messaging, route exceptions to legal. In diligence, agents showcase anomalies through the data rooms and create sourced-linked briefs. The earliest enterprise pilots frequently boast double-digit time savings on drafting and research; McKinsey estimates generative AI has the potential to deliver trillions in annual value when deployed within these types of knowledge workflows.

The CIO playbook for the new era of context

Levie’s framing lesson is simple: the quality and controllability of your content is now a factor of AI performance. Firms will get preferable agent outcomes from firms that normalize formats, clean permissions, enrich metadata, and standardize taxonomies over those that just plug a model into a dirty repository.

Track what matters—time to decision, exception rates, retrieval precision, cost per completed task—and delicately tune workflows up and down by adjusting agent autonomy based on where results suggest they need it. In this age of context, the advantage goes to the organizations that don’t relegate content governance to the overhead, but embrace it as the engine of AI effectiveness.