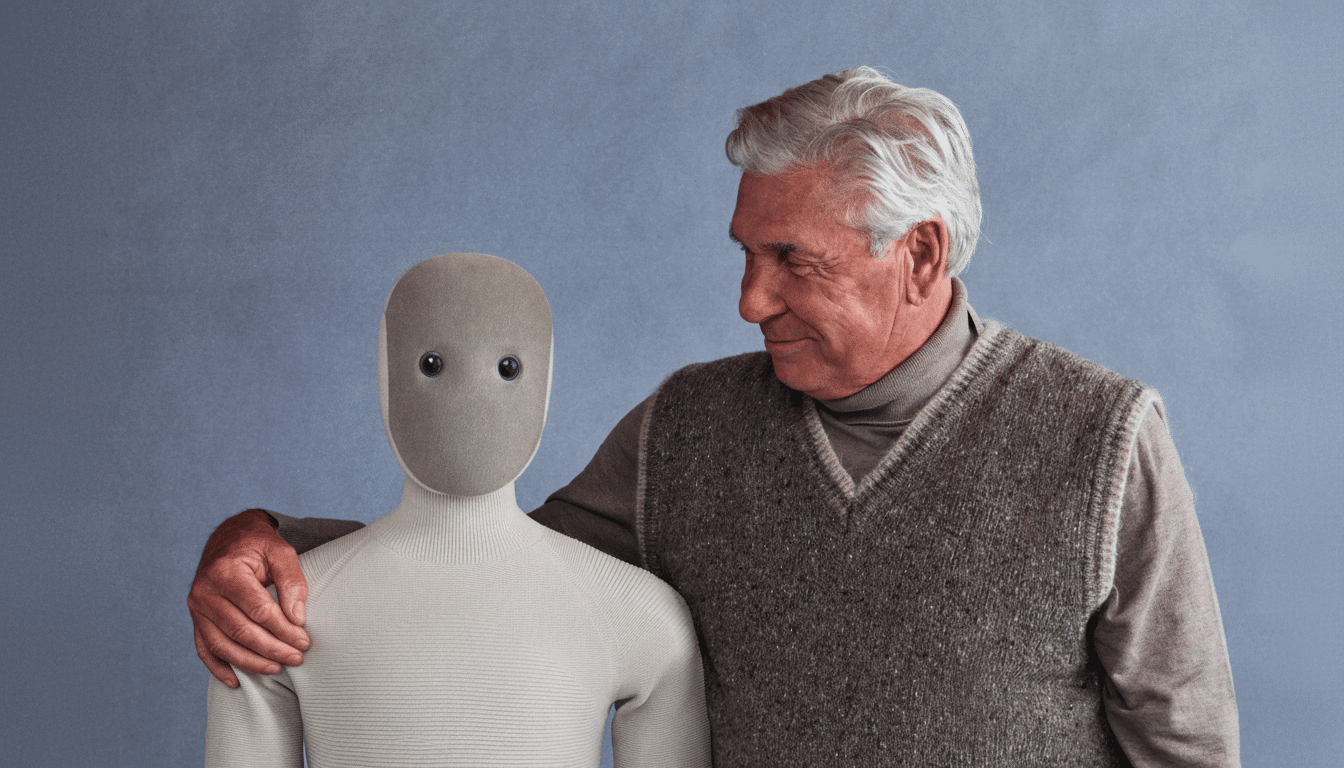

Humanoid robotics startup 1X has introduced a new “world model” designed to help its Neo robots understand cause and effect in the physical world and learn new skills directly from video. The company says the physics-aware system can map natural-language prompts and visual input to actions, giving Neo a path to acquire abilities it was not explicitly programmed to perform.

What a Physics-Based World Model Brings to Robots

World models are a fast-rising approach in robotics: instead of hard-coding rules, engineers train a model to predict how the environment changes when a robot acts. By learning dynamics from large volumes of video and demonstrations, the robot can mentally simulate outcomes and choose actions that are more likely to succeed. This mirrors progress seen in research like Dreamer from DeepMind and the original World Models work by Ha and Schmidhuber, which showed that learned dynamics can drive planning and control.

1X’s system blends video understanding with prompt-following, so a user can describe a goal and Neo estimates a sequence of motions that satisfy it. Crucially, the company says the model is grounded in physics, which helps avoid visually plausible but physically impossible moves—a common failure mode when pure vision-language models are used for control.

Beyond execution, the model provides a window into intent. By predicting how Neo expects the scene to evolve step by step, engineers can inspect planned behavior, identify failure points, and refine training data. That feedback loop—observe, plan, act, audit—is increasingly viewed as essential for safe deployment in homes and workplaces.

Early Skills and Realistic Limits of Neo’s Model

While the company frames the launch as a milestone toward general-purpose capability, it also acknowledges practical limits. The model enables Neo to attempt many tasks from a prompt, but current successes are clustered around simple, short-horizon household actions: removing an air fryer basket, placing toast in a toaster, or returning a high-five. Complex, multi-step procedures, like safe operation of vehicles or power tools, are outside the system’s present scope.

That progression is typical. Robots first master reliable grasping, tool alignment, and object placement before moving to longer chains of dependencies. The key metric to watch is not anecdotal demos but sustained success rates across varied homes and lighting conditions, and the time it takes the robot to acquire a new skill from minimal examples.

The Race to Generalist Robot Brains Accelerates

1X’s announcement lands amid a broader shift toward “foundation models” for robots. Google Research has reported that its RT-2 vision-language-action model improved generalization to unseen tasks, with evaluations showing 62% success on novel instructions compared with 32% for earlier baselines. A multi-lab effort around RT-X demonstrated the benefits of training on pooled, diverse datasets measured in millions of robot episodes. Covariant, NVIDIA’s Project GR00T, and academic groups at Stanford and the Toyota Research Institute are pushing similar ideas: leverage web-scale video and language to bootstrap manipulation policies.

The differentiator for 1X is embodiment. Neo is designed around humanlike reach, dexterity, and mobility, which expands the action space and the set of tools it can use. The difficulty is that humanoid morphology amplifies control complexity; success hinges on blending robust low-level control with the higher-level reasoning that world models promise.

From Preorders to Real-World Proof of Performance

1X opened preorders for Neo and says demand has exceeded internal expectations, but it has not disclosed a shipping timeline. For prospective buyers, the proof points will be transparent benchmarks in uncontrolled settings, the number of hours of video used for training, and how quickly the system adapts to new homes without laborious calibration.

Equally important are safety and accountability. Because the world model predicts intended behavior before execution, it can be paired with rule-based validators or learned safety critics to halt unsafe motions. This aligns with guidance from frameworks such as the NIST AI Risk Management Framework and standards efforts in service-robot safety, which emphasize pre-deployment testing, runtime monitoring, and post-incident traceability.

Why This Matters for Humanoid Robotics and Safety

If world models work as advertised, they can reduce the amount of task-specific programming and enable robots to pick up new skills from the same internet-scale content that trains language and vision systems. The near-term impact will be humble—more reliable kitchen and household assistance, faster iteration on manipulation—but the medium-term prize is enormous: a data engine where each new deployment generates experience that makes the next robot better.

For now, Neo’s new brain marks a tangible step. It moves the conversation from hand-picked demos to a repeatable learning process, one that blends predictive physics, language grounding, and real-world testing. That is exactly where humanoid robotics needs to be heading.