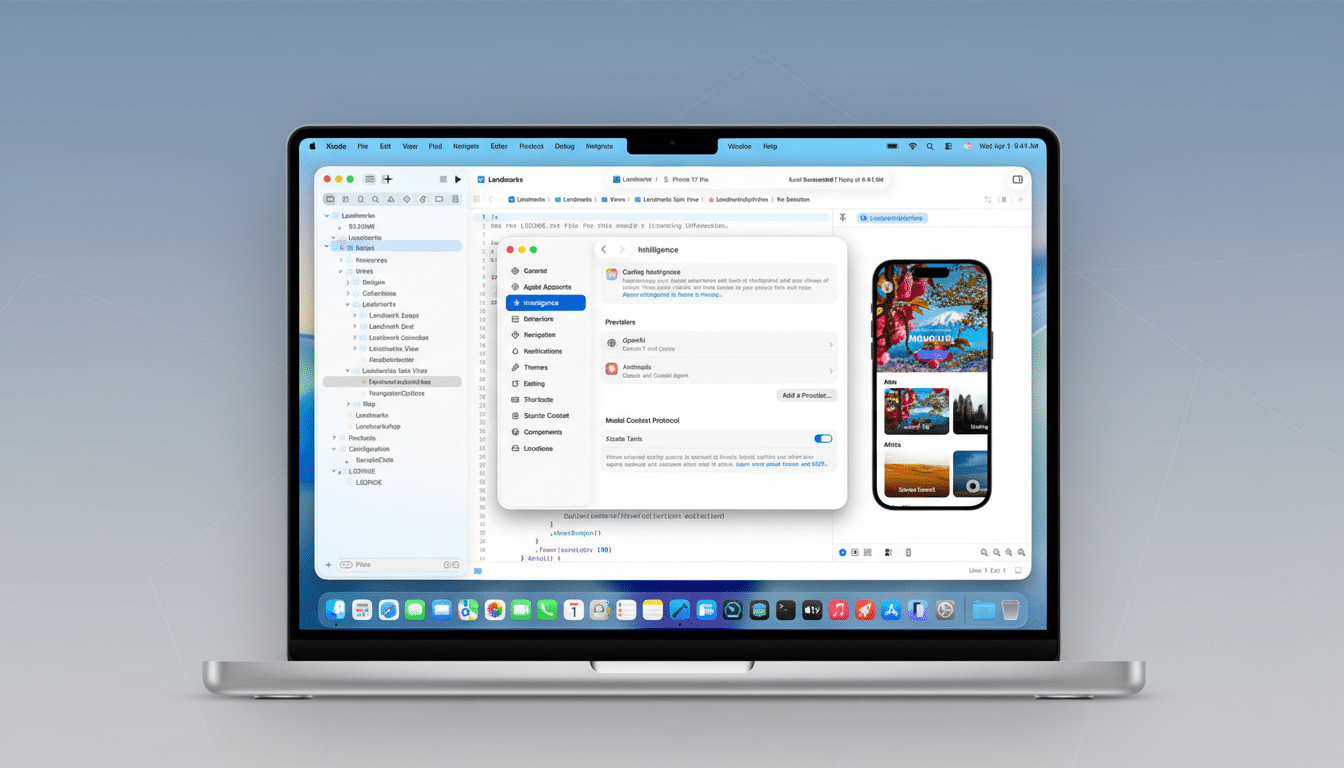

Apple is pushing Xcode into agentic coding, rolling out deeper integrations with Anthropic and OpenAI that let AI agents plan, build, test, and iterate on apps directly inside the IDE. With Xcode 26.3 now in release candidate, developers can bring Claude Agent and OpenAI’s Codex-style models into their daily workflow without leaving Apple’s tooling.

What Agentic Coding Brings To Xcode: Capabilities and Flow

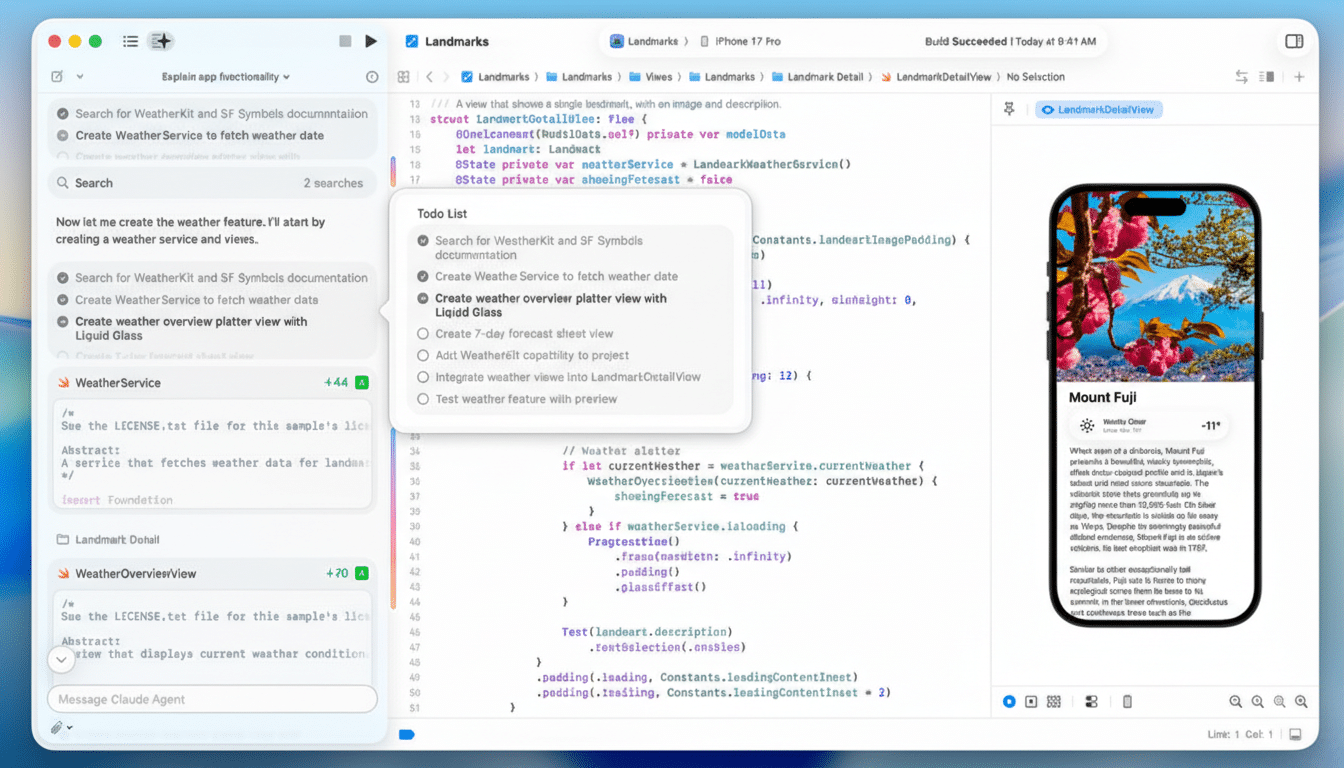

Unlike autocomplete or single-shot chat, agentic coding treats software work as a sequence of steps. In Xcode, these agents can explore a project, understand its metadata and targets, build it, run tests, analyze failures, and propose fixes—looping until the goals are met. Think of it as a teammate that reads the documentation first, explains its plan, then executes transparently.

Apple is giving agents live access to current developer documentation so suggestions align with the latest Swift, SwiftUI, and platform APIs. Changes are highlighted inline, and a running transcript shows what the agent did and why. Xcode also creates milestones at each change, so reverting to a known good state is a one-click safety net.

How the New Xcode Agents Work Across Your Projects

Developers enable agents in Xcode’s settings, sign in to Anthropic or OpenAI or supply an API key, then pick a model variant from a drop-down—options include larger “Codex” class models for complex refactors or lighter-weight models for quick edits. A prompt panel inside Xcode accepts natural language requests, from “add an onboarding flow using SwiftUI” to “migrate deprecated APIs and update unit tests.”

As the agent works, it breaks the task into steps, fetches the relevant docs, edits code, and runs builds or tests. Apple says it optimized token usage and tool calling so these multi-step runs feel responsive even on large workspaces. Asking the agent to outline its plan before coding can improve results, and the UI keeps that plan visible as it executes.

At the end of a run, the agent verifies behavior through tests or previews and can iterate if something fails. Nothing is locked in: developers can accept changes incrementally, inspect diffs, or roll back to any milestone.

Standards and Compatibility With MCP and Third‑Party Agents

Xcode now exposes its capabilities to agents via the Model Context Protocol (MCP), the emerging standard for tool-enabled AI agents. Through MCP, agents can perform project discovery, file edits, snippet generation, previews, and documentation lookups in a consistent way. That also means any MCP-compatible agent—not just Apple’s launch partners—can plug in as the ecosystem matures.

Apple worked with Anthropic and OpenAI to co-design the experience, focusing on stable tool interfaces and efficient context management. The goal: agents that are powerful enough to handle multi-file refactors yet judicious with tokens and calls to keep costs and latency in check.

Why It Matters in the IDE Wars for Apple Developers

AI-native coding is already reshaping developer tooling. GitHub’s research has shown developers complete tasks 55% faster with AI pair programming, while McKinsey estimates generative AI can accelerate software development activities by 20–45%. With this release, Apple integrates those gains directly into the place where iOS, macOS, watchOS, and visionOS apps are actually built.

The competitive field is crowded—GitHub Copilot Chat, JetBrains AI Assistant, and AI-forward editors like Cursor are pushing agent workflows hard. Apple’s differentiator is deep, first-party context: intimate knowledge of Xcode projects, build systems, and platform frameworks, paired with immediate access to the latest Apple documentation. That tight loop could reduce context switching and help the agents avoid stale or deprecated APIs.

Practical Impact for Teams Using Xcode’s Agent Features

Early use cases are straightforward and high-value: migrate projects to new SDKs, replace deprecated APIs, scaffold features in SwiftUI, generate unit tests and UI tests, fix flaky builds, or standardize code style across modules. Because the agent can compile and run tests as it works, teams get a tighter feedback loop than chat-only tools.

Onboarding also gets easier. New contributors can ask the agent to explain targets, schemes, and build settings, then follow the transcript to see how changes propagate. For senior engineers, the milestone system enables safe large refactors—explore a branch of changes, and if it’s not right, roll back instantly.

Apple is also offering a live “code-along” workshop to demonstrate best practices for prompting, planning, and reviewing agent output. The emphasis on transparency and reversibility is a nod to real-world concerns about AI-generated code: teams still need human review, policy guardrails, and careful handling of secrets and licenses.

Bottom line: Xcode’s new agent layer doesn’t just autocomplete—it participates. By combining first-party platform knowledge with Anthropic and OpenAI’s models through MCP, Apple is turning the IDE into an active collaborator that can read, plan, implement, and verify. For Swift and Apple platform developers, that’s a tangible step toward AI-native software engineering.