X recently added a new window into self-discovery on the platform, in the form of a profile transparency panel called About This Account, and it’s already influencing conversations around authenticity. The feature reveals minimal metadata — basically, when a profile was created, when an app was downloaded, and an inferred recent location — but it is the line with “location” that has drawn the most attention and raised questions about privacy.

Within hours of its more widespread availability, viral posts cataloged accounts identified by labels such as “America First” that were run from places like Japan, New Zealand, Pakistan, and Thailand. The screenshots were of both tiny profiles and accounts with hundreds of thousands — hordes of claims about foreign influence or bot activity.

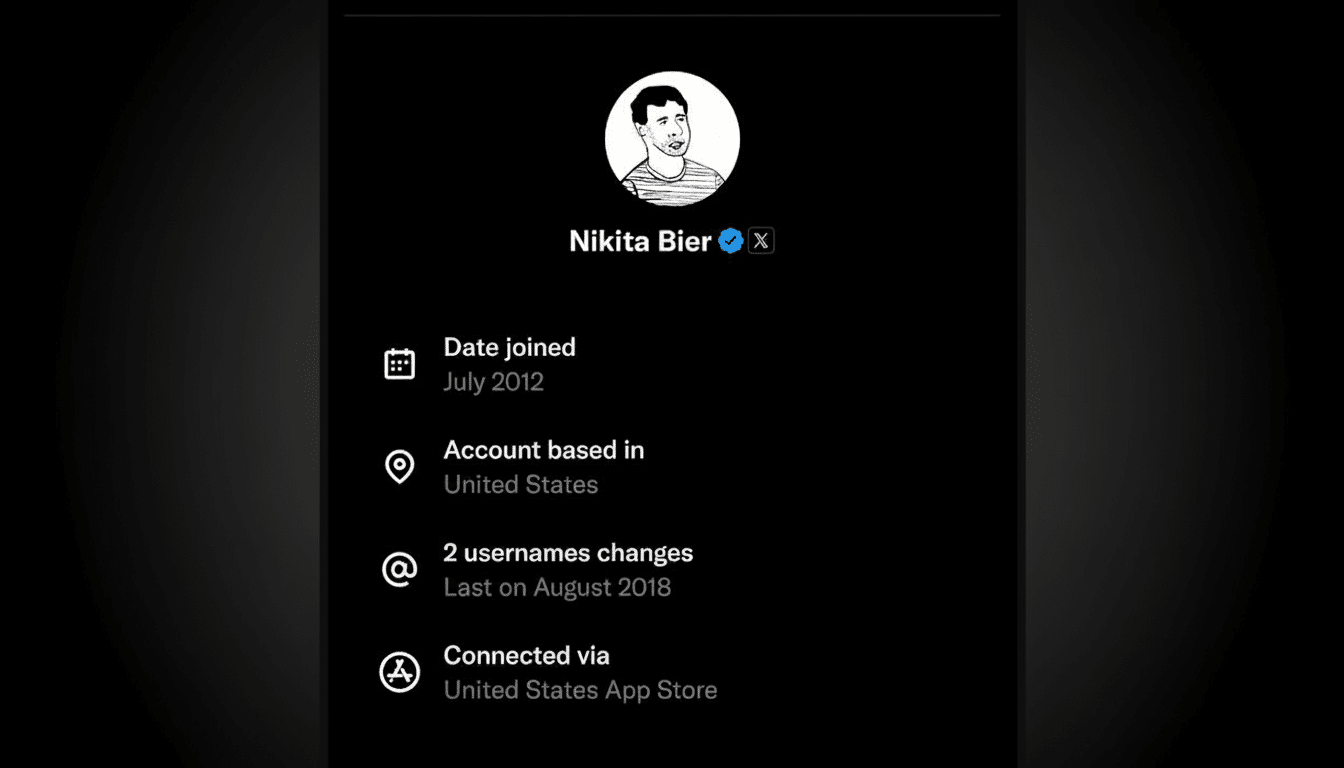

The rollout was characterized by X’s director of product Nikita Bier as an “important first step” in protecting the health of the platform’s public square. He also conceded that the data for older accounts may not be reliable, and fixes are under way to correct early errors.

What the New Panel Really Shows to Users on X

About This Account reveals a peek at the hinges: an approximate sign-up date for the account, where it got its app from (say, iOS, Android, or web), and a guess about where in the world that was, based on technical evidence. While X has not publicly disclosed its methodology, industry practice is that such labels are frequently based on recent login IP addresses, device metadata, and other network indicators to assess where an account’s conduct appears to be occurring.

It’s simple: to make it easy for users, members of the press, and advertisers to more easily discern context around a profile at a glance. Apps elsewhere have introduced similar transparency tools; Instagram has had an About This Account section for high-reach profiles for years — but including location on X is where things boil over.

Location labels spark scrutiny over privacy and accuracy

One such widely circulated gallery, assembled by an influencer named Micah Erfan, showed U.S.-themed political handles placed in far-flung countries — evidence for the seemingly ubiquitous murmurs that these voices are not what they purport to be. While the phenomenon fits with longstanding fears of cross-border trolling and coordinated activity, it is not evidence on its own that an account is fake or foreign-run.

Investigative groups such as the Atlantic Council’s DFRLab and Graphika have cataloged foreign influence networks across social platforms for years. At the same time, plenty of legitimate users inadvertently trigger geolocation filters via travel, remote work, team logins, or outsourced social media management. The nuance is crucial: an inferred location indicates where someone at or using that account seemed to log in from recently, not necessarily who operates the account or where its audience is.

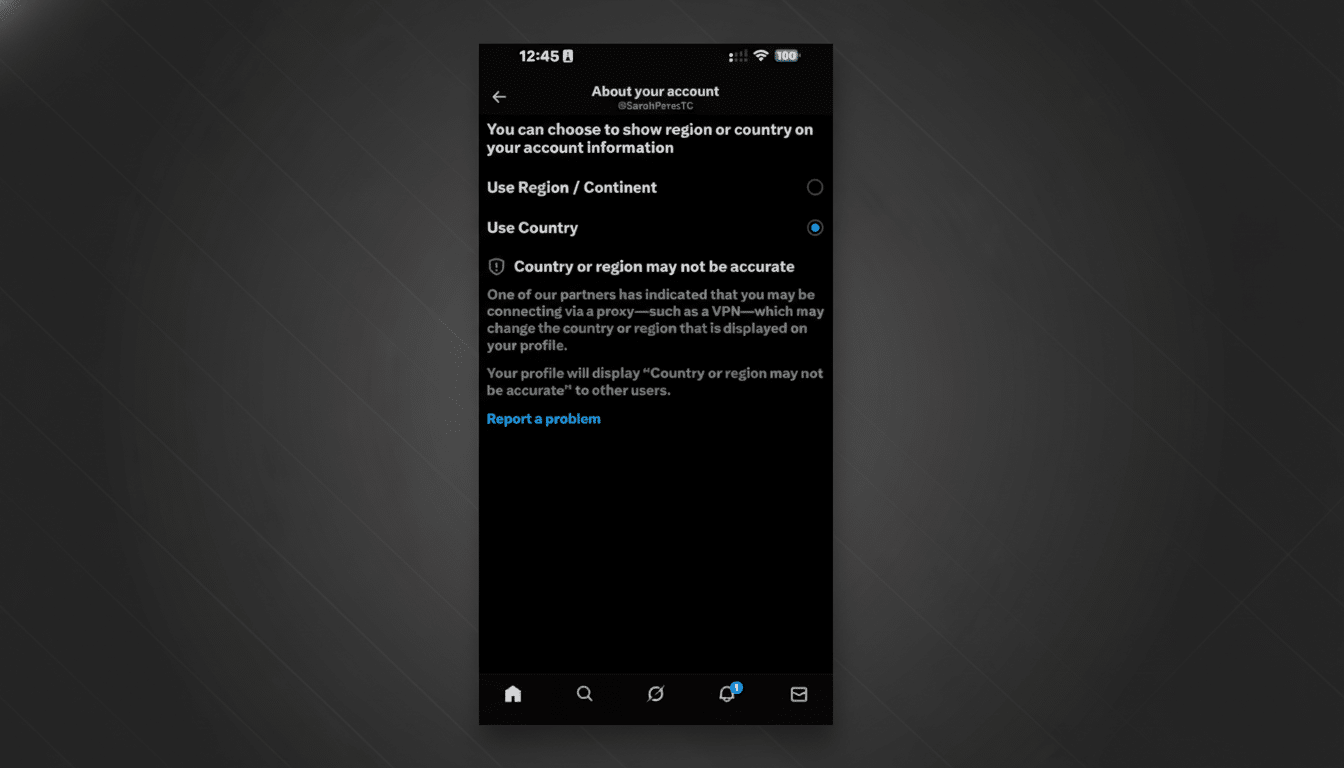

Accuracy caveats and edge cases in inferred locations

Early adopters have reported false positives — accounts identified as Nigerian but listed in the wrong country, for example — and independent journalists have pointed to likely suspects like VPN use, corporate social teams that operate across time zones, and old IP data left over from past sessions. Coverage from tech publications also reported that old accounts appear to be disproportionately affected, which X said it’s working to fix.

Geolocation is famously finicky at the edges. A last known IP might be an airport lounge, hotel network, a data center exit node, or a mobile carrier route that ends in an adjacent country. If X relies too heavily on recent login signals, labels can drift over time — resulting in fresh but volatile signals. There is much to be said for clear guidance and appeals, which will minimize the extent of confusion and misinterpretation.

Why this matters for trust and transparency on X

This is X’s third layer of transparency tools over the last year, with Community Notes addressing content-level context and About This Account now introducing profile-level signals. Both are designed to help users judge credibility without a heavy hand of moderation. That mission is also weighty: X, according to recent social media research from the Pew Research Center, continues to draw a large news-seeking audience in the U.S., so even small adjustments around transparency may have outsize impacts on public discourse.

For advertisers and brand safety teams, provenance cues are included in evaluating risk. In the realm of political discourse, mislabeling can become one more vector for bad-faith attack. The trust in About This Account will thus depend on the accuracy of its location signals and the effectiveness of its disclaimers.

What to watch next as About This Account evolves

The key questions now are methodological and procedural:

- Will X publish more detail on how it infers locations, how often labels get updated, and how users can challenge a bad placement?

- Will the company disclose error rates or indicate when a label is based on limited signals?

- And how about those high-reach or institutional accounts — will there be further verification signals to mitigate the confusion?

The feature has a good premise — visible signals upgrading the cost of impersonation and making influence operations harder to run. But front-loaded misfires obscure that promise. If X cleans its data pipelines and builds user-facing guardrails, About This Account could be a robust trust layer. If not, the label could end up yet another screenshot-driven Rorschach test.