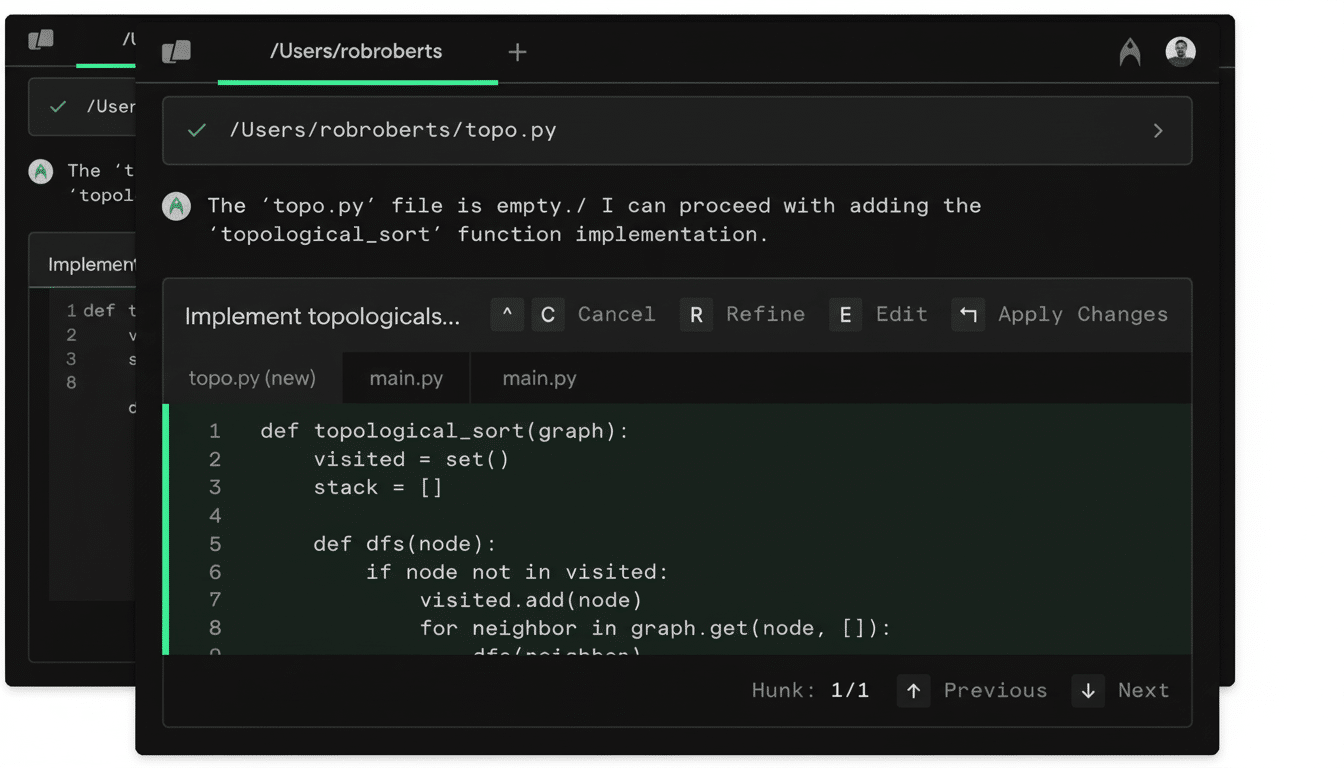

Warp is releasing a set of features that it is calling Warp Code to bring AI coding agents closer to accountable pair programmers. Rather than allowing an agent to silently rewrite files, and crossing fingers that builds pass, Warp now exposes a diff, the comments, and edit suggestions, so that developers can view, shape, and approve every change (without leaving terminal).

Why visibility trumps “black box” coding

AI assistants have been speeding up daily coding but have also created a rest-of-trust gap. Chat-based tools can seem like black boxes — good at spitting out code, not so great at explaining themselves. By describing the primary unit of work as a diff, Warp recasts AI assistance as something that is auditable and transparent. Each agent action becomes a small inspactable patch, rather than a mysterious sweep through a codebase.

That’s influential for quality and compliance. Teams that have tight change control, SOC 2 or ISO 27001 processes, or regulated pipelines need audit trails. A diff-first workflow turns agent output into peer-reviewable, testable, and confidently-mergeable code. It’s pair programming etiquette, integrated into the terminal.

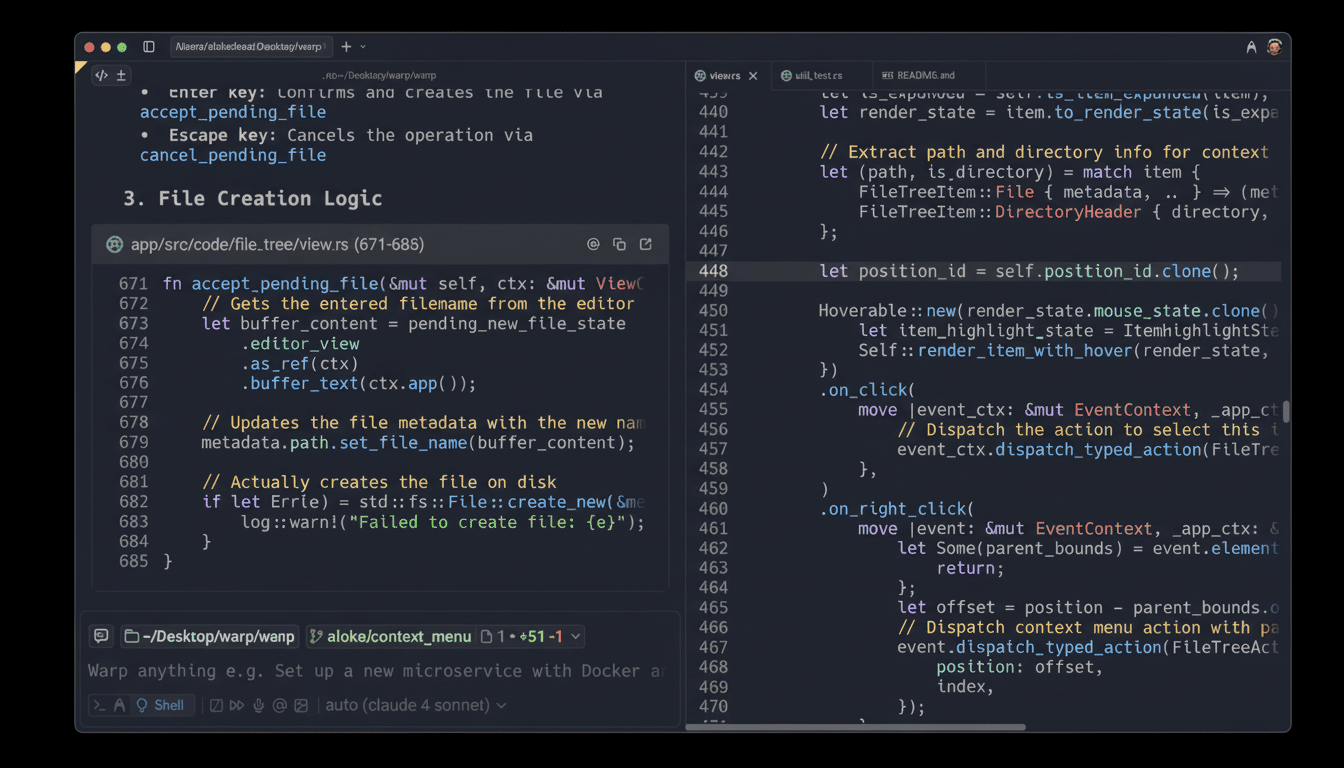

How Warp Code works under the hood in the terminal

The interface sticks to the minimalist Warp plan: commands in a bar at the bottom, an agent response pane, and a side panel that draws the sequence of diffs that are running. At any point a developer can step in and A/R/A (accept, reject, abandon) changes directly without even leaving the terminal scope. Focusing on particular lines or blocks of code provides targeted context to the agent, leading the next patch.

Importantly, build failures are not a dead-end. Warp compiler, runtime feedback back into the agent so can propose fixes automatically, and shows those fixes as new diffs rather than as secret retries. Keeping the developer in the loop as that design does, meanwhile, means not totally harvesting the speed benefits of an autonomous agent.

Consider porting over an API from Flask to FastAPI. Instead of a giant PR, as the agent drafts schema updates, route transformations, and dependency changes as individual patches. You label a few that are pesky, agent learns, failures hint a narrow fix, and you merge only what you’ve seen. And now suddenly the metaphor “agent as junior pair” applies.

Where Warp fits in the AI coding landscape

Warp’s move is aimed at the space between editor-native assistants and one-click app builders. Cursor and Windsurf focus on in-editor refactors and repo-wide actions, and there are tools such as Lovable which are about quick app scaffolding. Providers of the foundation models have coding experiences of their own, from command-line workflows to integrated development environment (IDEs) plugins. Warp’s gamble is that the terminal will continue to be the most efficient cockpit for serious work and that agent surveillance belongs there as well.

The company also draws on a maxim from code review culture: Small, composable diffs are easier to reason about than wholesale rewrites. GitHub’s own research on AI-assisted workflows has demonstrated that developers get their work done faster with capable helpers, and other research has documented substantial time savings on boilerplate and unit tests. Because The next battlefield is not raw code generation — it’s governance, traceability, and the ergonomics of human-in-the-loop control.

Indications of traction — and what to watch for next

Warp claims to have more than 600,000 active users and says paid adoption is rising, though the company provided no specifics in terms of the pace with which annual recurring revenue had been growing. That growth says developers are willing to pay for more than just faster code, but for safer changes to the code, and for tighter feedback loops.

For engineering managers, the attraction is tactical. Diff-tracking transforms AI output into artifacts which can be reviewed or inserted at existing CI/CD gates, DORA metrics, and practices from trunk-based development. It builds a defensible audit trail – who approved what, when, and why – which is also critical for post-incident review and policy audit.

The bigger move: from autocomplete to responsible agents

Early AI coding tools were fantastic at autocomplete; this wave is about agents that plan, edit, run and fix. But as autonomy grows, so does risk. Warp’s solution is to anchor such autonomy to a human-readable listing of changes. If the past few years were about shipping AI that writes code, the next few will be about shipping AI that can explain — and defend — every line it touches.

If Warp can make agent behavior as readable as a well-spun PR, it won’t just accelerate develop- ment; it will naturalize AI’s contributions within the rituals teams already on the hook for.

In a sea of options, that could very well be the difference between a novelty and a default way of doing things.