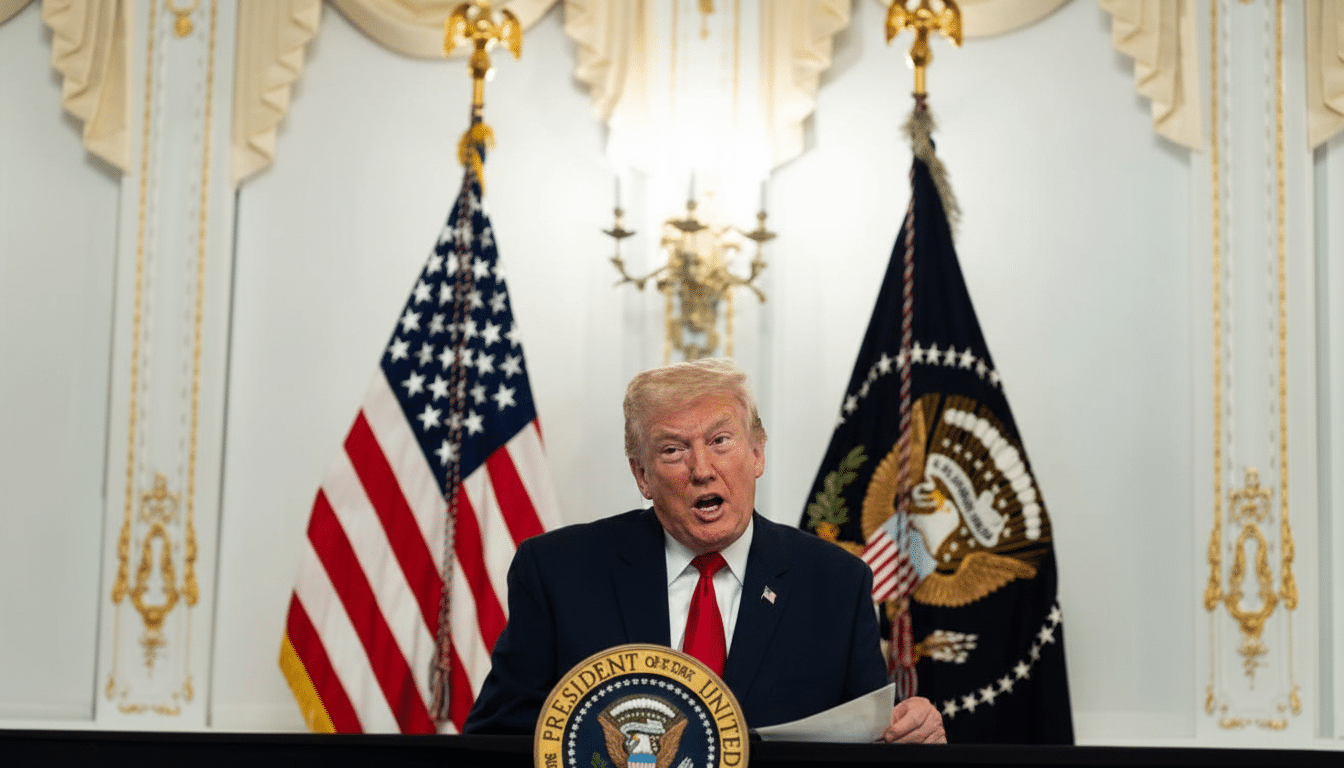

President Donald Trump said he will sign an executive order directing U.S. agencies to minimize the use of foreign workers, as his government is aggressively pushing forward with its efforts to protect American jobs amid the coronavirus pandemic. The move paves the way for an inevitable collision with statehouses that have already been crafting guardrails on transparency, safety, and consumer protection.

In a message memorialized as framing the effort around “one rulebook,” Trump said state-based efforts were an impediment to innovation. A leaked draft, reported by The Verge, indicated the order would instruct federal agencies to contest and sideline or even penalize conflicting state AI laws — prompting immediate questions about how far an executive order could go without new legislation.

What the Executive Order Would Accomplish on AI Regulation

Though the final text is not yet public, people familiar with the process anticipate that it will be infused with legal jargon instructing agencies to assert federal primacy over AI policy. That probably means the Commerce Department, Federal Trade Commission, and Justice Department would be called on to develop uniform standards and use preemption arguments when state rules run counter to federal aims.

Procurement might be a potent lever. Already, the Office of Management and Budget has wielded purchasing rules to influence federal AI activities, and a new order could condition government contracts on compliance with a single federal standard. NIST’s AI Risk Management Framework, which has been universally embraced since 2023, should set the de facto standard for safety, security, and test and evaluation requirements across agencies.

The attempt comes after an earlier administration-backed legislative effort to stop state AI regulation for a decade; that bill faced opposition from both parties and died in Congress. The new executive approach is attempting to achieve much of the same result administratively.

Clashing With States and Courts Over AI Preemption Plans

States haven’t waited for — or relied on — Washington. Colorado passed a comprehensive AI law targeting high-risk automated decision-making technologies and risk mitigation duties. California and New York lawmakers introduced bills on model transparency, whistleblower protections, deepfakes, and youth safety in recent months, while other states have taken similar steps in a trend across states that echoes previous waves in privacy and online safety policy.

It’s a matter of law: An executive order doesn’t have the power to wipe out state laws. The Supremacy Clause permits preemption when Congress has enacted a law or when a federal agency promulgates a valid rule under clear statutory authority. You cannot expect state attorneys general to let stand the proposition that sweeping AI preemption by executive order is within that authority — particularly under the Supreme Court’s “major questions” doctrine, which requires explicit congressional direction for major regulatory moves of this sort.

Past fights offer a roadmap. Federal preemption fights over auto emissions standards and net neutrality have birthed years of litigation. The same trajectory is probably present here, as courts balance federal uniformity with state police powers to safeguard consumers and workers.

Industry Stakes and Investor Pressure in AI Preemption

For AI developers and cloud platforms, a national rulebook could also make compliance easier (and less expensive) than operating under a patchwork regime of state laws. Venture investors, some with proximity to the administration, have contended that preemption is necessary in order to keep up with rivals in Europe and China. Tech investor David Sacks, now focused on AI and crypto, has been a reported link to the order’s creation.

Even as governance costs rise, the Stanford AI Index found that the United States leads the world in training frontier models (like GPT-2) and attracting private investment. Companies are already spending heavily on security evaluations, red-teaming, and data provenance — areas where overlapping state mandates can differ in definitions, testing protocols, and other requirements. One national standard might reduce duplication, but it could also water down protections that states with more aggressive consumer safeguards prefer.

Workforce and Safety Implications of Federal AI Preemption

AI’s potential impact on labor is already on lawmakers’ radar. Bipartisan proposals from Sen. Josh Hawley and Democratic colleagues have introduced the idea of requiring large employers to report AI-driven layoffs or major plans for automation. A federal preemption push that sidelines states could hinder those efforts — or establish a ceiling on worker protections — unless Congress intervenes.

Safety is equally unsettled. Analysis by the Future of Life Institute this year determined that only 3 of the top 8 models for which information is available met its minimum safety standards, underscoring how much ground voluntary practices have yet to make up. Critics caution that doing away with state enforcement backstops before federal regulations are complete could exacerbate the lag between swift deployment and robust risk management.

What to Watch Next as the AI Executive Order Unfolds

The details will matter. Key signals will be whether the agencies use procurement, guidance, or formal rulemaking; how they interpret “conflict” with state laws; and whether the White House points them to a particular preemption test. States’ existing statutes will almost surely be challenged in court soon, if not immediately.

The order’s staying power will depend on Congress. If Congress codifies parts of a national AI framework — on audits, disclosures, and model safety — preemption is all the more clear. In its absence, the administration is wagering that executive power and agency authority can muscle a national AI policy through the courts. The result will decide, for developers and investors, as well as consumers, whether the United States adopts one swift playbook — or a more tentative patchwork with more likely state guardrails.