TikTok is introducing new controls and transparency features for users who are tired of AI-generated video clogging their For You feed. The company is experimenting with a topic-level feature that allows people to adjust the volume of AI-made content they consume, as well as an “invisible watermark” system intended to quietly label synthetic media even if — unbeknownst to disseminators — it gets shared all over the internet.

What TikTok Is Changing With AI Content Controls

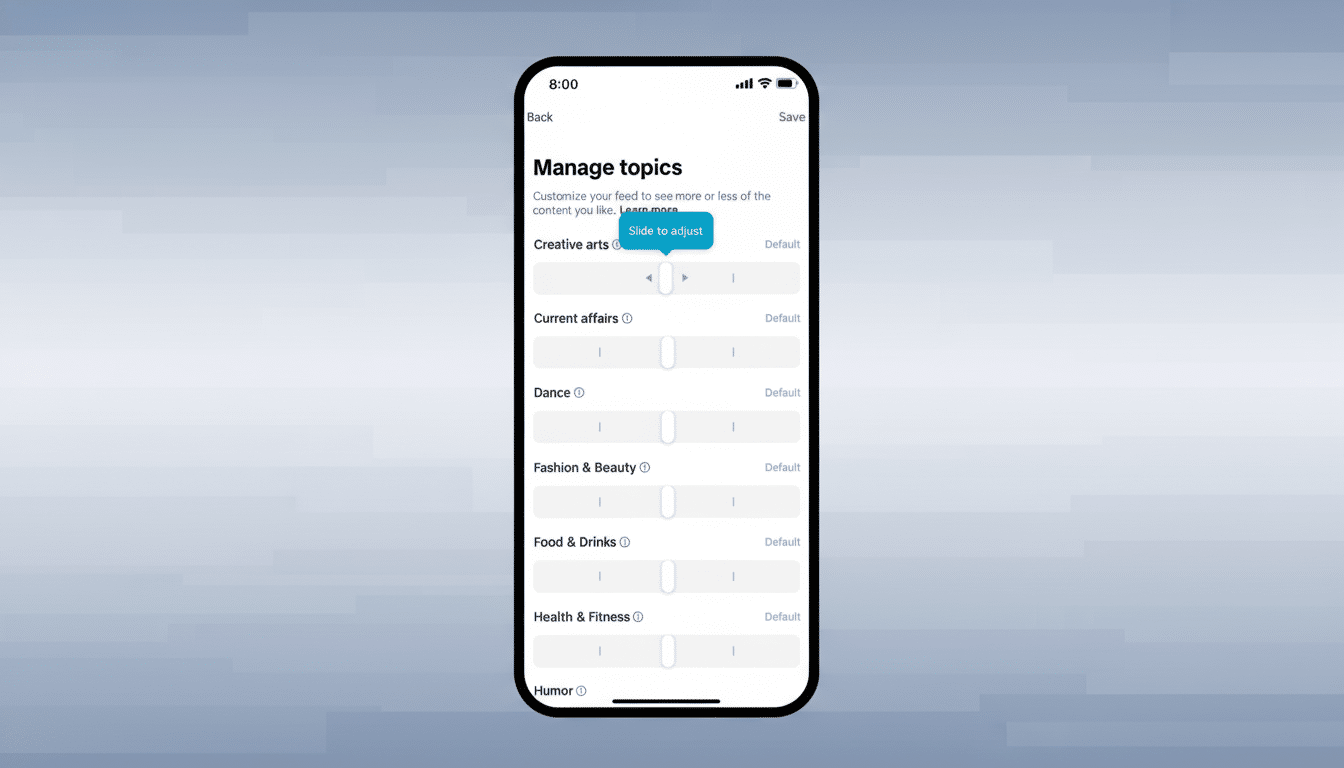

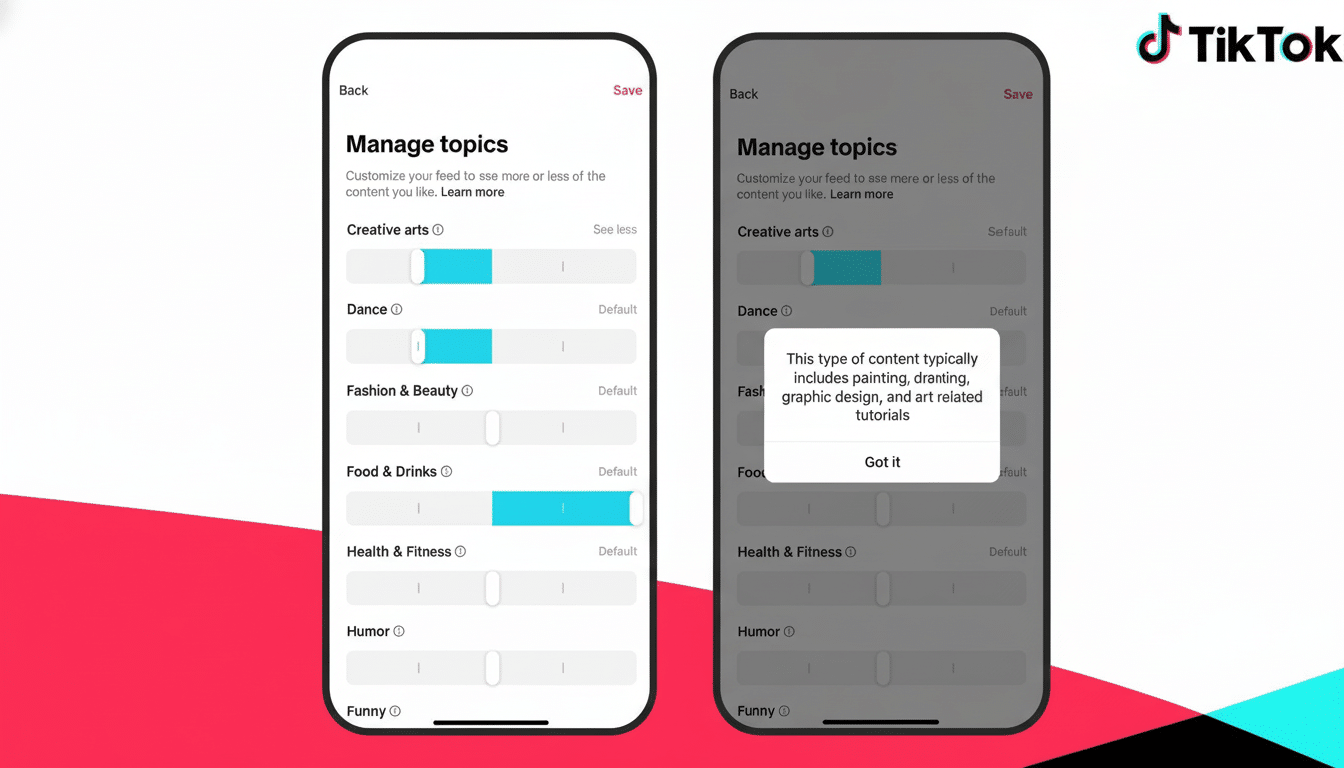

The linchpin is a control found within Manage Topics, TikTok’s central destination for influencing recommendations. This is not like category picks of the sort (think Current Affairs or Fashion & Beauty) but rather a choice that goes across all genres, highlighting how content gets made. The concept is straightforward: If AI videos aren’t your bag, you’ll be able to dial them down — and if you’re curious, surface some more.

TikTok stresses it isn’t anti-AI. The company is positioning the tools as user-choice and transparency features, not a rejection of generative tech. That nuance matters. While creators muck around with AI effects and storytelling, the platform is attempting to allow viewers not to have what many now scornfully refer to as “AI slop” fed into their content diet without kneecapping creative uses.

Invisible Watermarks, And Why They’re Important

With the feed controls, TikTok is also testing invisible watermarks to bolster its current “AI-generated” labels. Unlike a visible badge, these signals are baked into the file so that identification survives reposts, crops, and recompression — normal machinations that can obliterate on-screen labels.

Watermarking synthetic media is a very active area of research. Standards efforts such as the Content Authenticity Initiative and C2PA (the Coalition for Content Provenance and Authenticity) are pushing cryptographic “content credentials” that travel with files, while academic labs and industry teams continue to investigate how resilient watermarks are to edit attacks. There’s no perfect solution, but combining creator disclosures with automated detection and embedded provenance information is fast becoming a best practice across platforms.

Why Users Want AI Out of Their Feeds on TikTok

The audience’s fatigue from low-quality generative content is real. NewsGuard has cataloged hundreds of AI-generated news and information sites spewing out junky articles, while social platforms routinely grapple with synthetic celebrity clips and deepfake mashups. At the same time, Pew Research Center reports that most Americans are concerned — a sentiment whose intensity tends to rise around any kind of election or a public health event.

Creators and advertisers have an interest as well. Brands are concerned about lining up next to synthesized disinformation, while genuine creators can be smothered beneath an avalanche of templated, auto-generated clips. Offering users a conspicuous tool to filter AI could boost quality metrics that measure how long people spend on the platform and help reduce the cost of moderating borderline synthetic content.

How This Fits Into The Broader Industrial Shift

TikTok isn’t alone. Pinterest introduced an option recently to exclude AI-driven content altogether, and it seems people who like human-made inspiration are grateful for it. YouTube has asked creators to reveal altered or synthetic content that they created and now “labels” many such uploads, while Meta has extended AI-powered labeling of content across its apps. Regulators are urging it too: the European Commission and consumer protection authorities have called for clearer labels on manipulated media, and the F.T.C. has signaled that misleading AI content can set off enforcement.

Education and governance are also being supported by TikTok. The platform is introducing a $2 million AI literacy fund to support work to educate people about how generative tools function, and it recently joined the Partnership on AI, a nonprofit organization that creates guidelines for responsible AI. Both moves imply a philosophy that combines user controls with system-level transparency.

The Open Questions About TikTok’s AI Controls

The biggest question mark is how granular such a feed control will be and how easily TikTok can train this system to detect AI content in the wild. Invisible watermarks can help, but many tools do not embed them and bad actors can attempt to strip or obfuscate signals. That’s also why it will be crucial to combine provenance tech with user reporting, creator disclosures, and model-driven detection.

Still, the direction is clear. When AI-generated media becomes common, platforms will be evaluated on how well they allow people to select their experience — as well as how clearly they label what is on the screen. TikTok’s tests are a practical step toward that kind of future, and they may force competitors to provide similar AI filters that are less opaque.