The Pitt’s episode 8:00 AM serves up a timely take on artificial intelligence in emergency medicine, capturing both the buzz and the blind spots. Newcomer Dr. Baran Al-Hashimi pushes an AI note-taking tool into a chaotic ER, promising big gains and brushing off errors as rare. The reality in hospitals is more complicated: ambient AI can indeed free clinicians from keyboards, but its accuracy, safety, and equity depend on context, governance, and relentless human oversight.

What The Show Gets Right About AI Scribes

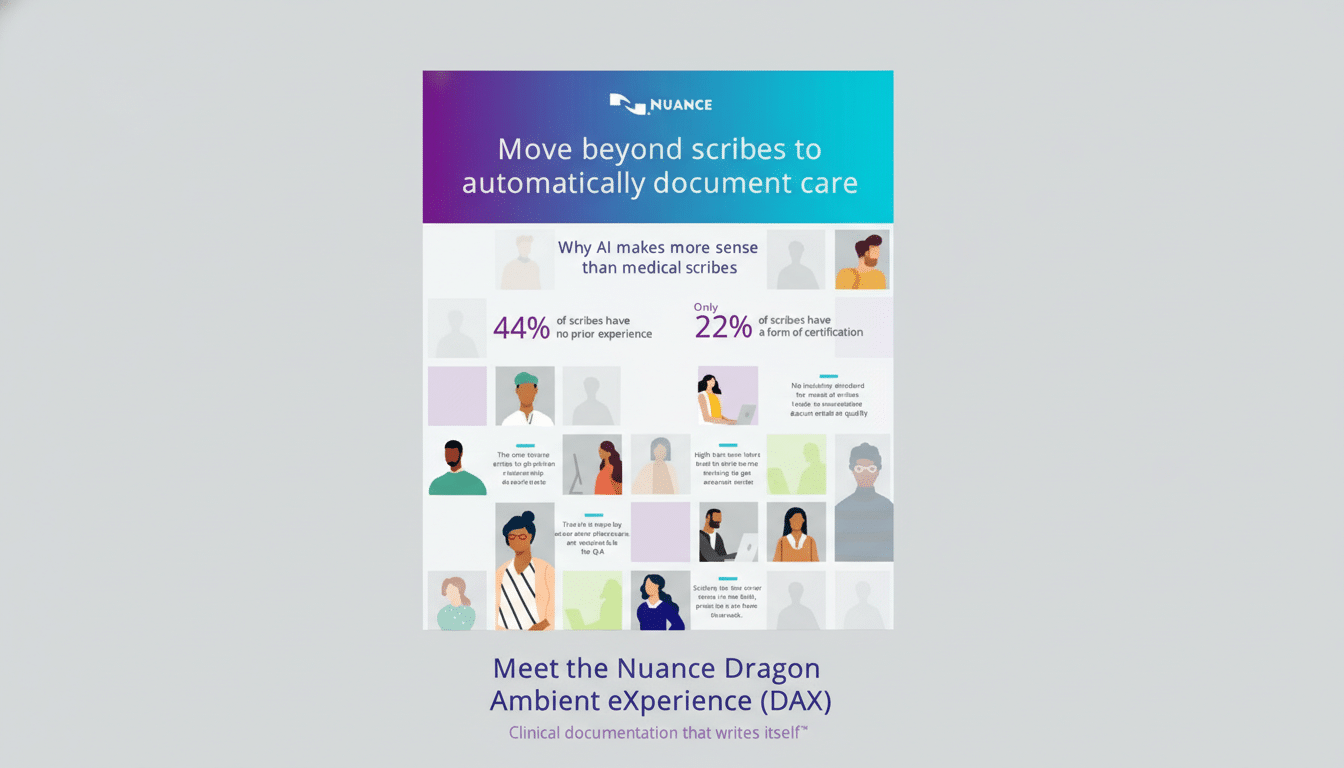

The episode’s core pitch—AI that listens to visits and drafts clinical notes—is grounded in what many health systems are piloting now. Ambient documentation products from vendors like Abridge, Nuance DAX, and Nabla are already embedded in electronic health records. Early evaluations and hospital reports point to significant time savings, often in the range of 50–70% for documentation tasks, and reductions in after-hours “pajama time.” The American Medical Association has long flagged clerical burden as a driver of burnout, so any credible relief matters.

Crucially, the show also gets the workflow right: clinicians still review and edit AI-generated notes. That human-in-the-loop step isn’t optional—it’s the safety net. In real deployments, clinicians remain the accountable author of the chart.

The 98% Accuracy Claim Needs Real-World Context

Where the script stretches reality is in asserting that “generative AI is 98% accurate.” Accurate at what, exactly? Automatic speech recognition and summarization? Diagnostic reasoning? The answer matters.

In controlled settings, medical speech recognition can approach high accuracy, but performance drops in noisy, multi-speaker environments like a busy ER, with crosstalk, alarms, and jargon. Systematic reviews in BMC Medical Informatics and Decision Making have documented highly variable word error rates and clinically significant misrecognitions in real-world clinical audio. And large language models that summarize or reason over transcripts can introduce “hallucinations,” fabricating details that were never said.

Importantly, accuracy is not a single number. It differs by task (transcription vs. summarization vs. recommendation), by population (accents, dialects, non-native speakers), and by setting (quiet clinic vs. trauma bay). Research has shown higher ASR error rates for Black speakers compared with white speakers, raising equity concerns if AI output is trusted without scrutiny. A blanket 98% figure glosses over these nuances.

Medication Mix-Ups Are A Real And Present Risk

The episode’s immediate error—substituting a similar-sounding drug—rings true. Look-alike, sound-alike medications (think hydroxyzine vs. hydralazine) are a well-known safety hazard tracked by the Institute for Safe Medication Practices. Voice recognition can exacerbate that risk, and generative summaries can entrench it if a wrong term is confidently rephrased.

Best practice is boring but vital: structured medication reconciliation, closed-loop verification with the patient, standardized vocabularies, and pharmacist review where feasible. When AI is used, organizations should require high-sensitivity alerts for medication entities, clear provenance (what was heard vs. what was inferred), and auditable edits. The show correctly implies that proofreading is non-negotiable; in production deployments, health systems also add governance, guardrails, and ongoing quality monitoring.

Where AI Truly Shines In Everyday Medicine

The series frames AI as a threat to clinical judgment, but the clearest wins today are narrow, measurable, and collaborative. The US Food and Drug Administration has cleared hundreds of AI/ML-enabled medical devices, the majority in radiology. Applications like stroke and bleed triage, pulmonary embolism detection, and mammography decision support have shown faster time-to-alerts and improved sensitivity in studies, while keeping clinicians in control.

Documentation assistance also shows promise when scoped tightly. Ambient tools that extract problems, medications, and orders from conversations can reduce clicks and copy-paste errors, provided the system highlights uncertainties and supports quick correction. The payoff is not just speed—it is reclaiming attention for the patient in the room.

The Human Factor And Governance In Clinical AI

The episode’s nod to “gut” instinct and empathy is well placed. Clinical intuition is not mysticism; it’s pattern recognition informed by experience, context, and values. AI can surface patterns at scale, but it doesn’t shoulder accountability or build trust at the bedside. That’s why national bodies—from the World Health Organization’s guidance on AI ethics in health to NIST’s AI Risk Management Framework—emphasize transparency, human oversight, and bias management.

What’s missing on-screen, and critical off-screen, is the plumbing: data security under HIPAA, bias testing across patient groups, calibration monitoring, incident reporting, and clear policies on where AI is allowed to act vs. advise. The Joint Commission has urged organizations to validate AI tools locally and train staff on limitations. Without that scaffolding, promised gains can evaporate into new risks and new work.

The Pitt captures the moment medicine is in: excited by tools that might give clinicians more time and attention, wary of overconfident claims, and adamant that people—not models—remain responsible. If the show keeps its focus there, it will continue to feel uncomfortably, usefully real.