Snapchat is bringing open-ended generative imagery into its camera with a new feature called the Imagine Lens that generates shareable images from conversational prompts. Write a caption, and the Lens will generate, edit or re-roll a Snap there and then — then allow you to post it on your Story, send it to friends, or save it for use outside the app.

What the new Lens does, and does not

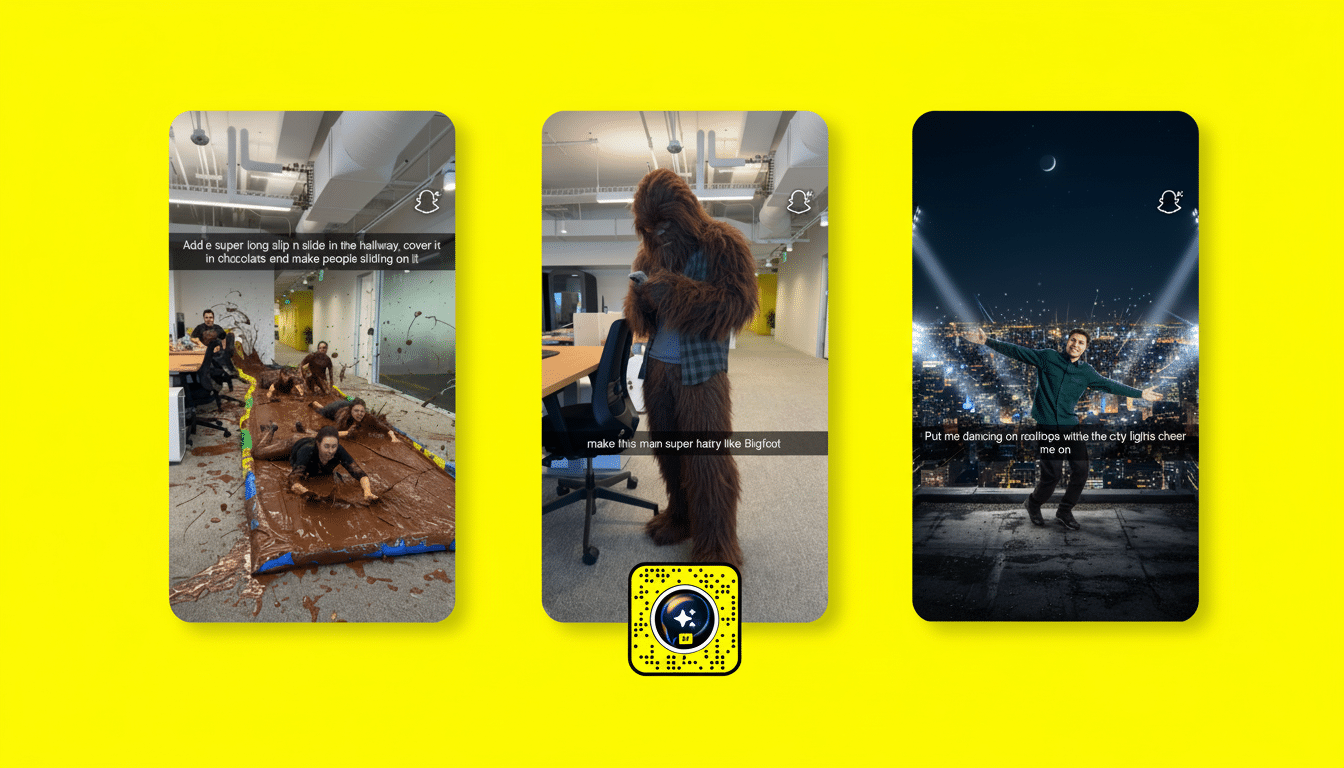

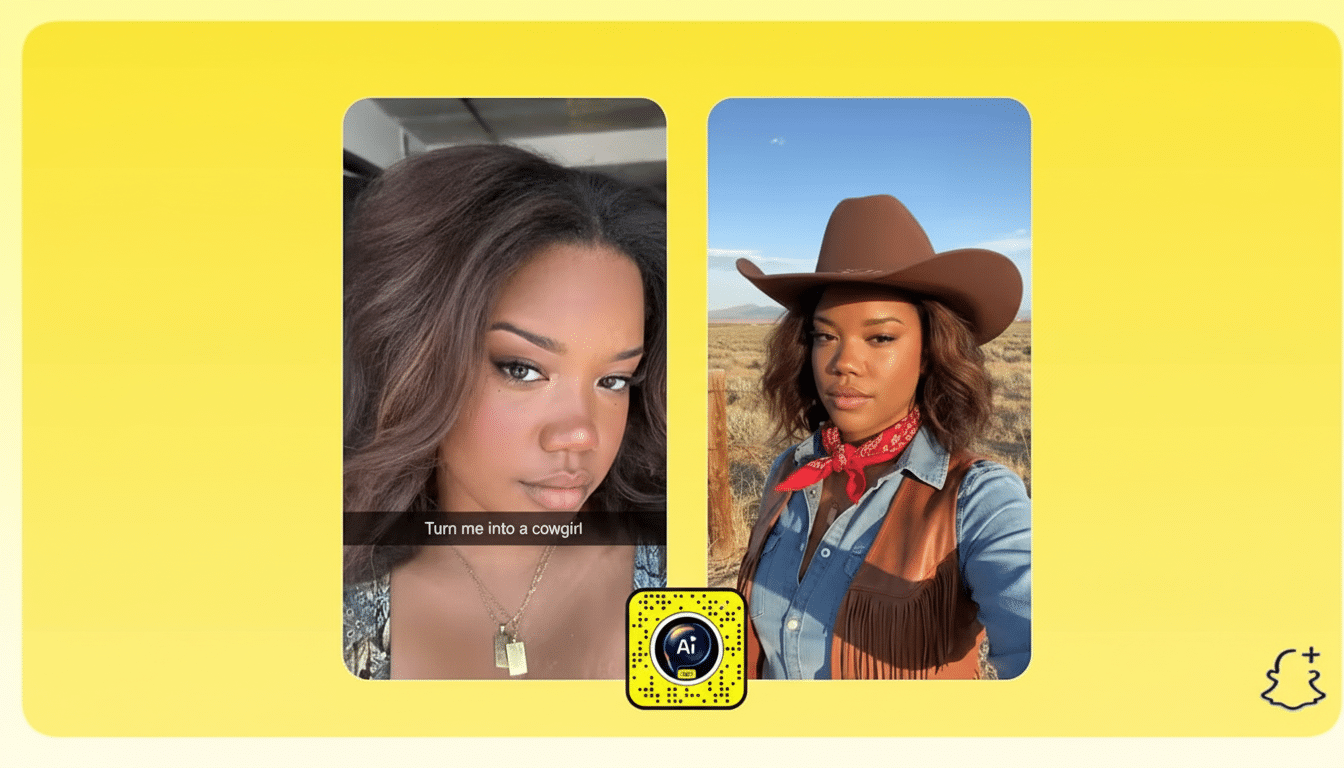

Unlike Snapchat’s early AI effects, which were confined to certain styles or scenes, Imagine Lens is an open prompt. You might request an illustrated pet portrait, a surreal man land behind your selfie or a stylized character interpretation of yourself. The Lens also features recommended effects that offer a quick hit of inspiration, from multi-panel comic treatments to charming caricatures and action shots of the subject in unlikely settings.

And crucially, the prompt is in the caption bar, so you can niggle with the meter of one phrase to radically alter tone, art style, lighting, composition without having to begin again. It was an iterative loop — prompt, preview, edit, regenerate — that mirrored the workflow in popular desktop tools, but condensed into a mobile-first AR experience.

How to get it and how much it costs

It is showcased in the Lens Carousel for Snapchat+ Platinum and Lens+ subscribers, and is found under Exclusive as well. Once you’ve chosen the Lens, tap the caption section to write or edit your prompt.

Snapchat+ Platinum goes for $15.99 a month, and Lens+ is $8.99 a month. Gating an open-prompt generator behind premium tiers is a smart choice: it moderates the demand for compute-abusing A.I. features, and gives a more obvious way to monetize the app along with its broader Snapchat+ bundle.

What’s powering the images

Snap has contended that its Lenses are made with a mix of proprietary and best-in-class industry models. The company previously shared a lightweight text-to-image research model that’s been mobile-optimized, signaling some moves to minimize latency and to put more of the steps of creation on-device when possible. In reality, you likely get a hybrid pipeline: both rapid feedback on your camera, server-side diffusion for quality, and then so-so postprocessing to blend results seamlessly into selfies and scenes.

That also matches up with Snap’s longer-term plan to combine AR with generative AI. The company also launched new video-capable generative Lenses and made Lens Studio tools available on iOS and the web to make it easier for creators to develop for the platform. More deeply integrated AI authoring inside the camera would also drive that ecosystem faster — particularly for short-form storytelling where velocity is even more crucial.

Why it’s important for Snap and creators

Snapchat’s AR has long been a daily habit for audiences in the hundreds of millions, according to industry analysts, who estimate that that many users engage with Lenses every day. Embedding open-prompt generation right into the capture flow effectively turns the camera itself into a creative assistant, not just a drawer for filters. For creators, it means quicker concepting: turn a joke into a four-panel gag, try out visual styles for a meme format or put together a brand pitch without leaving the app.

For Snap, it helps blunt competition from social rivals, some of which are rolling out their own AI art and editing tools. Meta has included image-generation tools throughout its apps, Google has added generative editing to Photos, and short-form video platforms have embraced AI effects. The advantage Snapchat owns is immediacy — create and remix content the instant it’s captured, while social intent is at its peak.

Safety, Limits, and Responsible Use

The open-ended nature of generators raises familiar questions about misuse, likeness manipulation and intellectual property. Snap has always built safety filters and policy checking into its camera features, and the same expectations carry over here: rapid moderation, content suppression, and obvious telltales when AI is involved. Industry organizations like the Partnership on AI advocate for transparent disclosures with synthetic media; Snapchat’s in-Lens experience and sharing flows are also well-suited to surface such signals.

Contrib.Users should also not expect this process to provide perfect prompt completion, as there may be mismatches between prompt and output in rare cases, a known limitation of diffusion-based systems. There will still be style differences between rere-rolls. The upside is creative serendipity; the tradeoff is learning to steer the model with a pithy descriptive phrase.

Early take: practical scenarios

Imagine Lens looks good in formats that are remixed in a jiffy, including turning friends into comic-book heroes for a group Story, adding a whimsical background when the real world around you is lackluster or creating a stylized avatar for your profile photo. Local businesses and content creators can storyboard out an idea photo in seconds, iterating toward what a final look might become before they commit to a shoot.

As prompts are editable on the fly, the Lens is sort of a live mood board inside the camera. It’s a subtle but profound shift — from seeking the “right filter” to articulating the idea and letting the system handle the rendering.

The bottom line

With its AR camera incorporating an open-prompt generator, Snapchat is converting text, in real time, into instant visuals the very moment users are most likely to create and share. It’s a logical extension of Snap’s AR playbook, a clever premium differentiator and, if those underlying models keep getting better, a plausible way to feel like everyday Snaps are authored, and not merely captured.