ServiceNow has signed a multi-year partnership with Anthropic, deepening its push to infuse generative AI into enterprise workflows just days after unveiling a separate pact with OpenAI. The agreement makes Anthropic’s Claude family the preferred models across ServiceNow’s AI features and sets Claude as the default engine for the company’s AI agent builder, Build Agent. Financial terms were not disclosed.

The collaboration also extends inside the company: ServiceNow is rolling out Claude to its roughly 29,000 employees, with Claude Code available to engineering teams. The move reflects a clear strategy—use multiple frontier models and orchestrate them behind the scenes to balance quality, safety, and cost for different enterprise tasks.

- Why Anthropic Is in the Mix for ServiceNow’s AI Push

- What Changes for ServiceNow Customers With Claude as Default

- A Deliberate Multi-Model Strategy for Enterprise AI

- The ROI Question and the Broader Industry Context

- The Competitive Landscape for Enterprise AI Is Heating Up

- What to Watch Next as ServiceNow Deploys Claude AI

Why Anthropic Is in the Mix for ServiceNow’s AI Push

Anthropic’s Claude models are known for long context windows, strong instruction-following, and an emphasis on safety guardrails—attributes that matter when AI is embedded into IT, HR, finance, and customer operations. For regulated and global organizations, the ability to constrain outputs, log decisions, and apply policy controls can be as important as raw model quality.

Anthropic has been steadily expanding its enterprise footprint with alliances across consulting and infrastructure, including Accenture, IBM, Deloitte, and Snowflake, and has highlighted deployments with insurers like Allianz. The partnership complements Anthropic’s ties to major clouds and signals growing demand for Claude as an option inside mainstream enterprise platforms.

What Changes for ServiceNow Customers With Claude as Default

Customers will see Claude become the default brain behind AI-native workflows on the Now Platform. In practical terms, that means agentic automations—like triaging incidents, drafting knowledge articles, summarizing long ticket threads, or proposing remediation steps—can be composed and governed inside ServiceNow’s environment with Claude under the hood.

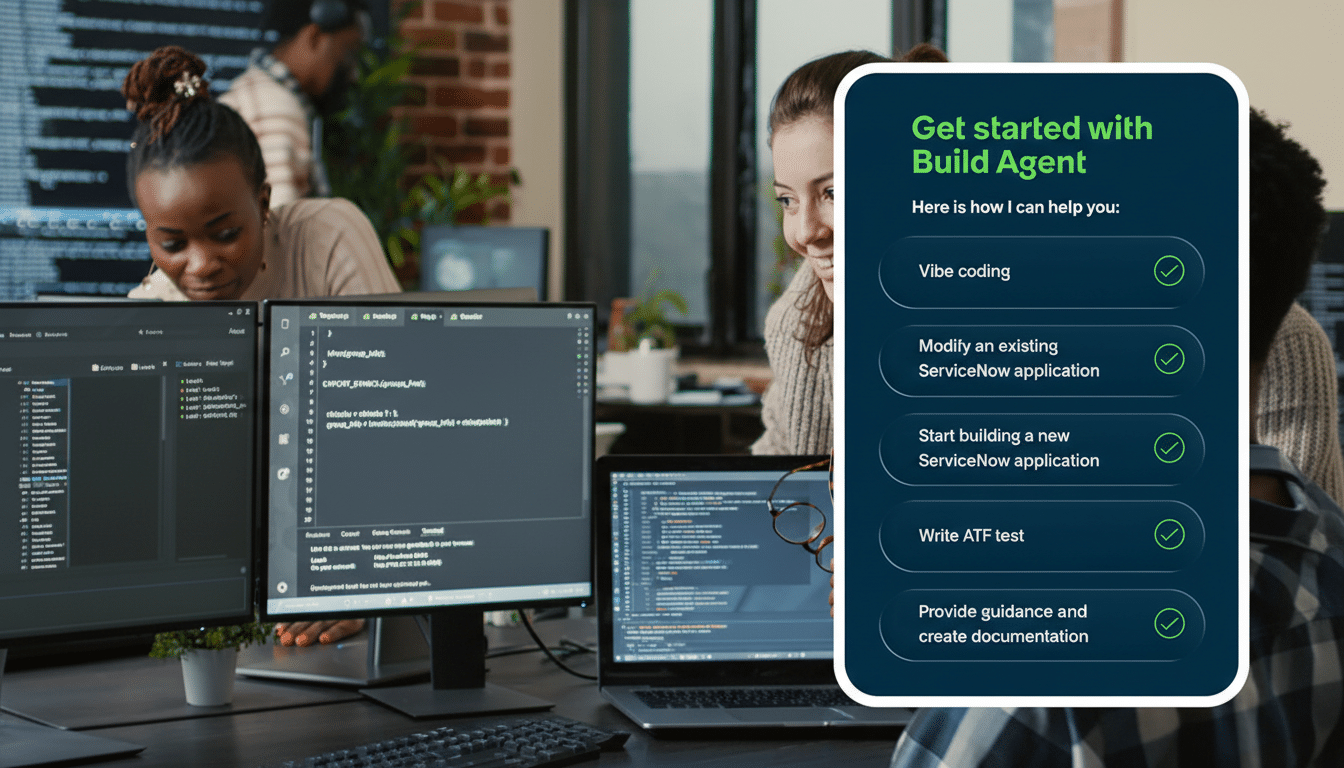

Build Agent, ServiceNow’s tool for creating autonomous process agents and apps, will now standardize on Claude out of the box. Developers can still invoke other models where they fit better, but the default lowers friction for teams that want reliable reasoning, structured outputs, and transparent controls without stitching together point integrations.

A Deliberate Multi-Model Strategy for Enterprise AI

The Anthropic agreement arrives on the heels of ServiceNow’s newly announced access to OpenAI’s models. Rather than picking a single winner, ServiceNow is pursuing a portfolio approach: route tasks to different models based on domain, data sensitivity, latency, or cost, while keeping governance, audit trails, and security consistent on the ServiceNow AI Platform.

That orchestration matters. Enterprises want model choice without operational sprawl: standardized prompts, model evaluations, RAG pipelines, and approvals that work the same whether a request hits Claude for reasoning, another model for code generation, or a lightweight option for summarization. Expect model routing, evaluation dashboards, and policy templates to become core differentiators.

The ROI Question and the Broader Industry Context

Despite intense interest, many enterprises are still searching for measurable AI impact. IDC projects global spending on generative AI will reach roughly $143 billion by 2027, while McKinsey has estimated generative AI could add $2.6–$4.4 trillion in value annually across sectors. Yet the gap between pilots and production remains wide.

Workflows are where ROI shows up—metrics like time to resolution, case deflection, change failure rate, and first-contact resolution. By baking models into the systems that already orchestrate work, ServiceNow is betting it can convert AI novelty into durable process gains. The Claude integration is designed to accelerate that shift from experiments to governed, auditable production use.

The Competitive Landscape for Enterprise AI Is Heating Up

ServiceNow’s move lands in a crowded field. Microsoft is weaving Copilot across 365 and GitHub, Salesforce is pushing Einstein with a growing roster of model options, Google is expanding Vertex AI for enterprise developers, and IBM is advancing watsonx for governed AI. Many of these players also partner with Anthropic, underscoring how model access is becoming table stakes.

ServiceNow’s differentiation remains the workflow fabric: incident management, employee services, customer operations, and application delivery that already run on the Now Platform. If Anthropic’s models improve reasoning and safety in those flows—while the OpenAI tie supplies breadth—ServiceNow strengthens its case as the system of action for AI at work.

What to Watch Next as ServiceNow Deploys Claude AI

Key questions include how ServiceNow will handle model routing and fallback between Anthropic and other providers, what the cost and latency profiles look like at scale, and how evaluation tooling will quantify quality and drift over time. Customers will also watch for data residency options, content filters, and industry-specific guardrails.

Near term, adoption of Build Agent with Claude as the default, uptake of Claude Code among ServiceNow engineers, and early customer case studies will signal whether this partnership translates into faster deployments and clearer ROI. The race is no longer just about access to frontier models—it’s about turning those models into dependable, measurable workflow outcomes.