While everyone is racing to get generative models into their apps, there’s a separate tsunami of regulation that will redefine how companies build and scale AI. Instead of a brake, well-used governance increasingly is becoming a competitive advantage. With stair-stepped obligations and fines that could amount to 7 percent of global turnover in the most serious instances, the EU AI Act announces new stakes. Both the NIST’s AI Risk Management Framework, laboring in the vineyards of pragmatics and best practices for application of guidance, and the emerging ISO/IEC 42001 management standard also provide useful road maps. Together, they indicate five specific ways in which rules and regulations can more actively steer smarter AI development.

Yes, Establish Guardrails That Speed Experimentation

Clean boundaries allow teams to experiment without inviting long-tail risk. But there are other options, including providing access to approved model “whitelists,” role-based access, and sandboxing environments with synthetic or de-identified data. That takes prototypes off production systems while keeping velocity high.

For many banks, insurers, and healthcare companies that are pioneering the use of AI, they start by running the early pilot projects through virtual sandboxes replicating regulated workflows.

I heard more than one technology provider talk about building these under the title of a “trust fabric,” giving them tools to rate which use case is ready to go into production based on checkboxes in bias, robustness, and privacy beforehand.

The upshot: faster iteration cycles and fewer late-stage reworks resulting from compliance surprises.

Turn Compliance Into a Product Strategy for Advantage

Regulation is what makes the places where innovation can create defensible value clear.

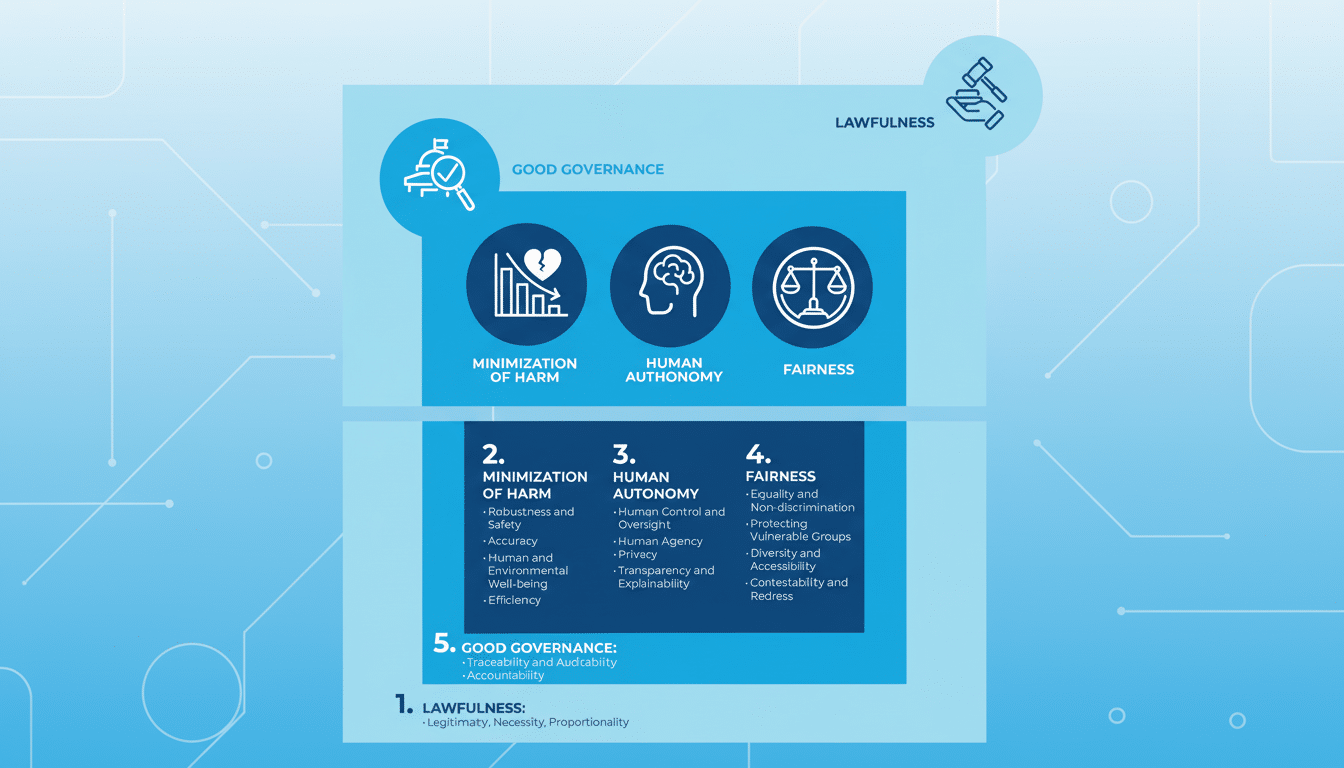

Connect each AI idea to risk levels—low, moderate, or high—using models like the EU AI Act’s. Combine that map with decisions on whether to “build, partner, or avoid.” For high-risk areas, such as credit, hiring, or medical triage, transparency features, human oversight, and extensive testing might need to be part of the MVP itself rather than something bolted on later.

Top teams treat compliance as a design constraint that fuels differentiation: explainer dashboards for high-impact decisions, opt-out controls for sensitive data, and clear model documentation. According to law firms monitoring global proposals such as Bird & Bird’s AI Horizon Tracker, regional requirements differ wildly from one another, so having a consistent base can help to prevent the fragmentation of products as they are developed and make it easier to roll them out globally.

Documenting Data to Preserve Signal and Trust

The effectiveness of models can be determined by data governance. Over-cleaning may strip away informative variation, and bias can be introduced; undergoverned pipelines will erode reproducibility. The middle ground is disciplined lineage: store raw data snapshots, version every transformation, and keep track of data documentation with “model cards” that document training sets, known limitations, and evaluation results.

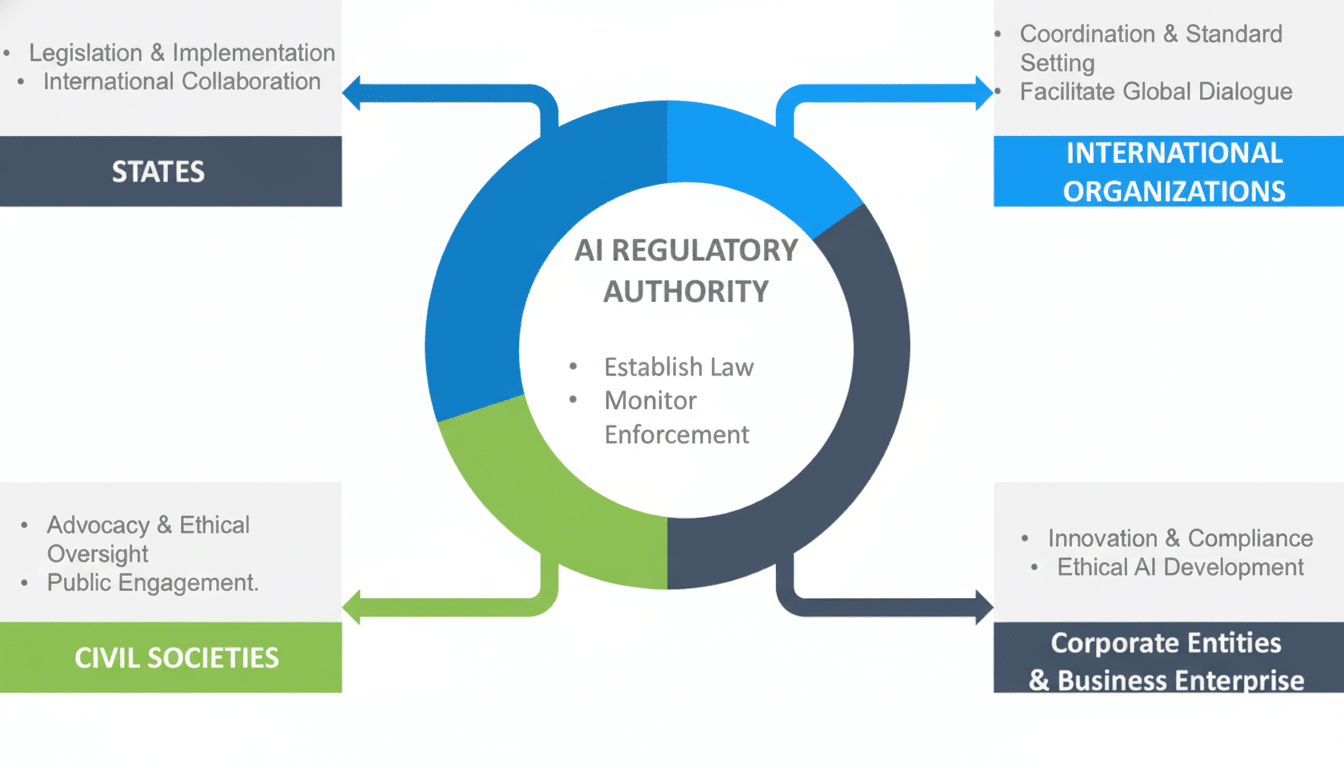

The NIST framework emphasizes traceability, but clinical researchers have warned that excessive preprocessing can corrupt results. When auditors or safety committees wonder why a model made a specific choice, detailed lineage and testing notes can explain exactly what changed—and help teams debug without going back to square one.

Bake Security and Red Teaming Into the Lifecycle

Expectations for security are growing as fast as model capabilities. Regulators and national cybersecurity agencies advocate continuous threat modeling, adversarial testing, as well as defense against prompt injection, data exfiltration, and model poisoning. In line with OWASP Top 10 for LLM Applications and advice from the UK National Cyber Security Centre, these expectations are translated into specific checklists.

One pragmatic approach is to apply AI to help create first-pass threat models from architecture diagrams and policy libraries, and let security architects focus on sector-specific edge cases. A cooling-off period is also good, as you shield sensitive inputs and choose enterprise-grade tooling: do not start seeding unmanaged systems with “blueprints” of your infra. Governance here isn’t hindering work—it is funneling a scarce number of experts to the highest-impact risks.

Leveraging Certification to Open Up Markets and Trust

Compliance can open doors. With the AI Act, many of those high-risk systems will need to go through a conformity assessment process and be monitored over time in a manner similar to what CE marking represents. Companies that adopt early ISO/IEC 42001 and NIST controls also have an easier time with procurement as public-sector buyers increasingly prioritize evidence of model governance, incident response, and post-deployment monitoring.

Assurance is a brand asset as well. Arm’s-length audits on bias, independent tests against representative datasets, and transparency of use reduce buyer hesitance and speed up legal review. For cross-border deals, a single well-documented assurance package can serve several jurisdictions with minor modifications and enable growth at scale without needing to revisit foundational engineering choices.

The upshot: rules and regulations don’t simply give us the contours of AI—they also light the way. Leaders make governance a flywheel by:

- Codifying safe sandboxes

- Bringing compliance into product design

- Documenting data rigorously

- Operationalizing security

- Seeking credible assurance

That strategy maintains human control, wins trust, and holds innovation on course between pilot and production.