Razer’s Project Ava was born as a strait-laced AI gaming coach. Now, in its latest incarnation, it’s something else altogether: an anime-styled holographic “companion” that lives inside a speaker-like cylinder and flirts, giggles, and sometimes coaches you through matches. The pivot is daring, technically ingenious, and supremely unsettling.

In a hands-on preview, Ava mixed helpful game-side advice with equal parts persona-driven theatricality. The result is firmly in the uncanny valley—cool at times, but hamstrung by a design that pushes users to feel emotionally connected rather than productive.

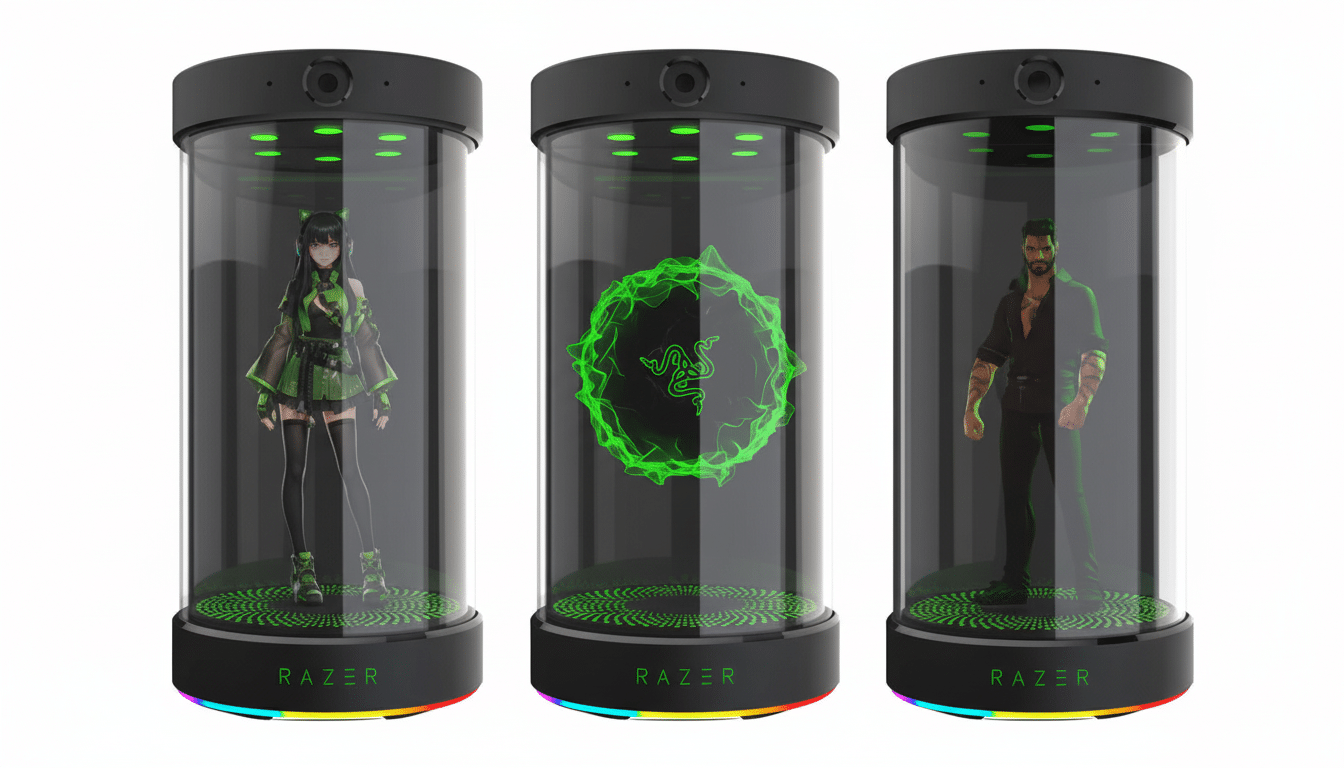

What Project Ava Is Now: A Hologram Companion Device

Ava appears as a hologram of sorts, projected onto what seems to be about a 5.5-inch image in the center of a clear cylinder.

It’s advertised as a 3D hologram; when you’re there it looks more like a crisp 2D projection with believable depth cues. A front-facing camera offers awareness of your surroundings—only with your permission, though—and hardware controls along the top edge can be used to adjust volume or mute noise detection.

The default persona, Kira, is one of five companions developed with the help of Animation, Inc., the studio responsible for Grok’s AI avatars. Razer promises that Ava can sit on top of different AI models; the demo unit ran on Grok. A desktop app presents settings and controls that include privacy toggles and voice options.

A Hands-On That Veers Into the Uncanny Valley

Ask Ava about the big show-floor announcements and it has fast, precise summaries—down to specifics such as where you can track down Lego’s Smart Brick booth. That’s useful, but it is also something your phone assistant would already do sans the anime polish.

Where Ava wants to rise above is during the game itself. Ask that question as “What sight should I use for medium-to-long range engagements in Battlefield 6?” and the system looks at what is in your current menu, lists through options, and recommends a loadout. In either case, it suggested I equip a 1.5x scope—a questionably bad choice for lengthier lanes—illustrating a larger issue: up-to-the-minute advice only helps to the degree to which that meta still applies.

The larger sticking point is that of tone. Kira giggles; she assigns cutesy nicknames upon seeing badges as she scans with the camera, and plays up flirty affectations. Razer’s own pitch goes even further, tagging Ava as a “friend for life” and a “24/7 digital partner.” It’s a deliberate leap beyond assistant into companionship, and it is sure to divide audiences.

The Tech Under the Theater: How Ava Parses Context

Ava’s winning party trick is context. By observing what’s on-screen and listening to what you say, it can close the distance between voice commands and the specific, click-by-click reality of PC gaming. That probably involves computer vision for parsing UI elements and model-driven reasoning to make suggestions and engage in conversation.

In practice, people experienced anything from quick responses to slow ones, depending on the query. The projection appeared nice and crispy with consistent frame pacing, and voice pickup sounded solid in a loud demo space. Even so, the value prop relies on reliable and high-confidence recommendations—especially for competitive ones with little wiggle room for such a marginal miscall.

Why the Companion Framing Is Suspicious for Gamers

Transforming a gaming coach into an intimate partner isn’t just a branding tweak—it changes the psychological contract. And there are concerns that anthropomorphic chatbots can take advantage of trust and direct users toward decisions they wouldn’t otherwise have made, according to a warning from the Federal Trade Commission. The EU’s AI Act also focuses on manipulative systems that have a significant impact on behavior, signaling additional regulatory attention for emotionally appealing designs.

There’s precedent. The Replika AI companion app came under regulatory fire in Europe for its youth protections and data practices, an episode that underscored just how rapidly parasocial bonds can develop with synthetic agents. Pew Research Center, meanwhile, has consistently found that most Americans say they are more worried than excited about AI—with fear peaking in the areas of surveillance, job displacement, and loss of human touch.

Ava’s camera, incessant presence, and cutesy persona only compound those fears. Certainly users will want unambiguous privacy controls, transparent data retention, and a straight “professional mode” that dials down flirtation in favor of direct, audit-friendly assistance. Without them, the product risks excluding the very gamers it is meant to support.

Early Verdict and Who It Is For in This Release

And as a notion, an AI that looks over your shoulder and tells you exactly which slider to flip or attachment to pick can be legitimately helpful. Ava will demonstrate flashes of what lies ahead. But the flirt-first approach overtakes the utility, which is going to be a cause of cognitive dissonance for a lot of players when they play in shared living rooms or streaming setups.

Pricing has yet to be revealed, but reservations are being accepted with a deposit of $20 (refundable). If Razer skews into a serious coaching mode, stern privacy assurances, and credible accuracy benchmarks—think published test scenarios and win-rate deltas—Ava could have a place on a desk. In its present shape, it’s technically interesting hardware, but leaning into a parasocial pitch most people don’t want in their playtime.