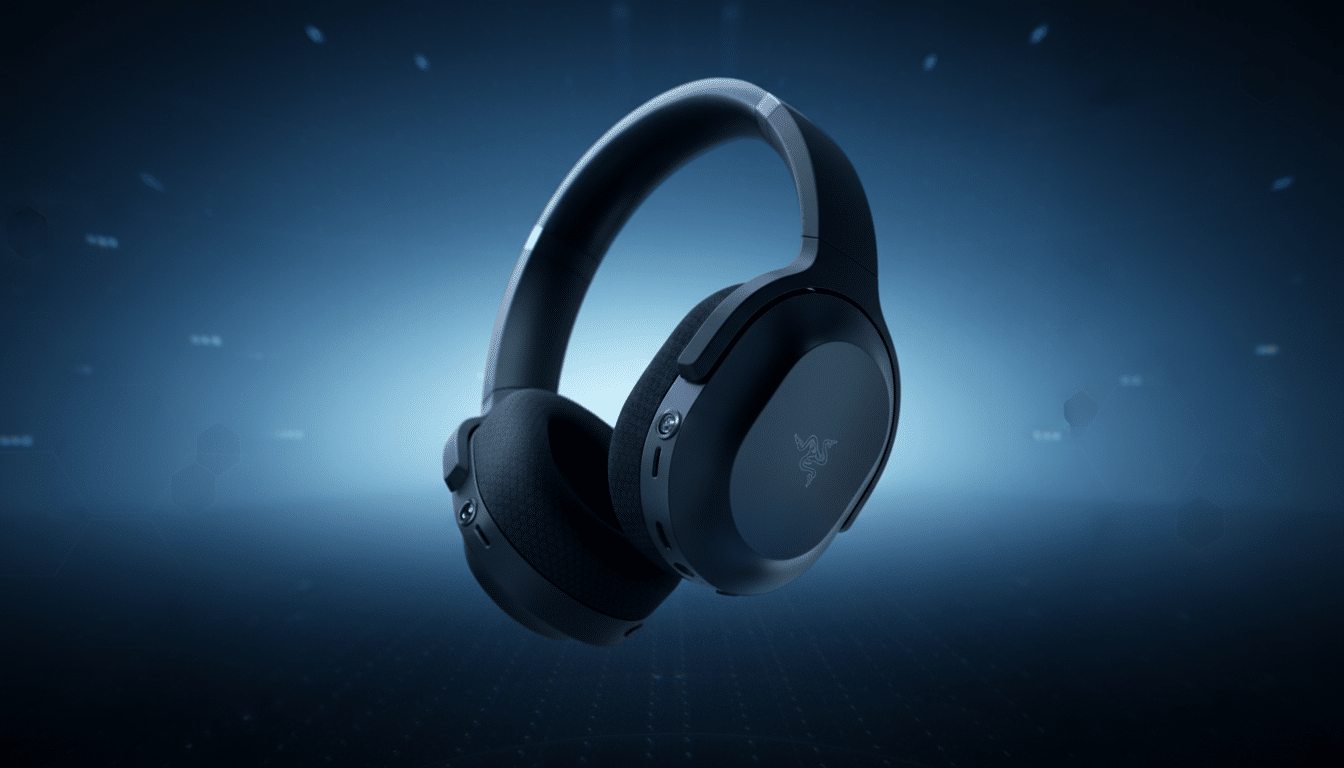

Razer is betting the next big AI wearable isn’t a piece of technology you strap to your face but something that already rests atop our craniums. Now the company has a new vision, Project Motoko: a multimodal AI assistant that can live in your headset with eye-level cameras for contextual awareness. The pitch is at once naive and shrewd: if you don’t wear glasses, why should AI ask you to start?

Motoko combines on-device processing with cloud-scale language models that are powered by the Qualcomm Snapdragon, in order to deliver speedy, private assistance with camera awareness — and minus the social friction smart glasses can produce. The thing is, in demos it acts like a familiar pair of headphones that just so happens to see and get the world around you.

Why Headphones Make Sense for AI Assistants

Headphones are already the most ubiquitous wearable product. As industry trackers at IDC continue to report, “earwear” leads the way in wearable shipments — by a long shot over watches and glasses. That adoption matters: The best AI assistant is going to be one that you’d actually wear all day, on a plane, at a desk, or while walking between meetings.

There’s also a privacy angle. Smart glasses typically use small external speakers, which can bleed out audio to surrounding people and make it easy for them to eavesdrop on your assistant. A headset will keep responses in your ears and take advantage of the superior microphones and noise isolation — both important for accurate speech recognition in a loud environment.

What Project Motoko Can Do in Razer’s Headset

Razer’s demo demonstrated how adding vision to voice will make useful everyday tasks possible. Turn your head toward a menu written in a language you don’t understand and Motoko reads, translates, and summarizes it through the headphones. Ask follow-ups — “Which are within my budget?” or “What are the hot options?” — and it reasons on what it sees to provide answers that are relevant. In one case, the headset identified a model of the Rosetta Stone and provided information about the object.

Most crucially, Motoko is model-agnostic, Razer says. That would allow users to select their favorite large language model providers from players such as OpenAI, Google, or Anthropic, or even run smaller models on their devices when latency, cost, or access issues make cloud calls untenable. This flexibility reflects a broader industry trend toward modular AI stacks rather than one-assistant-fits-all.

On the hardware side, a Snapdragon platform inside allows things like wake-word detection, speech processing, and early (low-level) computer vision pre-processing to be done on-device, while more intense reasoning can occur in the cloud. That hybrid technique is becoming more prevalent throughout AI hardware because it means low latency for simple actions and respect for privacy with sensitive context, while also being able to draw on big models when required.

Trade-offs and design challenges for AI headsets

It doesn’t have a display, so there’s no heads-up overlay for directions or captions, and the company isn’t trying to fake one with an even more obtrusive prism facing your eye. The experience is voice-first. That puts the assistant in the position of needing to be brief and context-sensitive — something many current AI agents can’t handle well without very careful prompt design, memory management, and smart interruption handling.

Then there’s power. Always-on vision is energy-hungry, and tacking cameras onto headphones will stretch their battery budgets, which have been traditionally engineered to optimize for audio playback. Be prepared for burst capture strategies, on-device filtering to throw away unnecessary frames, and low-power AI blocks to maintain race times against high-end headsets.

Privacy and transparency matter, too. Wearables armed with cameras raise the same questions that we’ve seen already about when recording is happening and how data is being stored — bars can roll out a patch identifying bracelets, or not, who knows — as well as what notifications there are for people nearby. From those authorities to the UK’s Information Commissioner’s Office and other watchdogs, regulators have called for clear disclosure and data minimization, with Motoko’s fate resting on getting those choices right.

How it stacks up against smart glasses and wearables

Already, there are Meta’s camera-adorned smart glasses that have normalized hands-free capture and multimodal AI; they just still use open speakers (and you have to already wear glasses). A headset like Motoko inverts that equation: higher-quality microphones, more isolating sound, and more general social acceptability anywhere headphones are common — offices, commutes, flights. The downsides are bulk and absence of the sort of visual layer that AR glasses can provide.

It also draws comparisons to lapel and pocket devices such as Humane’s AI Pin and Rabbit’s R1, all held back by their few microphones, unwieldy UIs, or unreliability. By placing the experience in a more mature hardware segment with tight audio basics, Razer lends its assistant a sturdier scaffold.

What to watch next for Razer’s Project Motoko launch

Project Motoko is only a concept for now and Razer has not announced pricing or availability. Key milestones to watch:

- Developer access and SDKs for multimodal apps

- Partnerships with AI model providers

- Privacy features (such as visible capture indicators)

- Language support scope

- Actionable battery life claims with cameras running

If Razer can deliver on what it’s showing — stable vision, fast voice, and some model choice in a headset people already adore — AI wearables could finally have landed on their everyday form factor.

Never mind prying computers onto our faces; the most viable AI assistant might wind up in our earbuds.