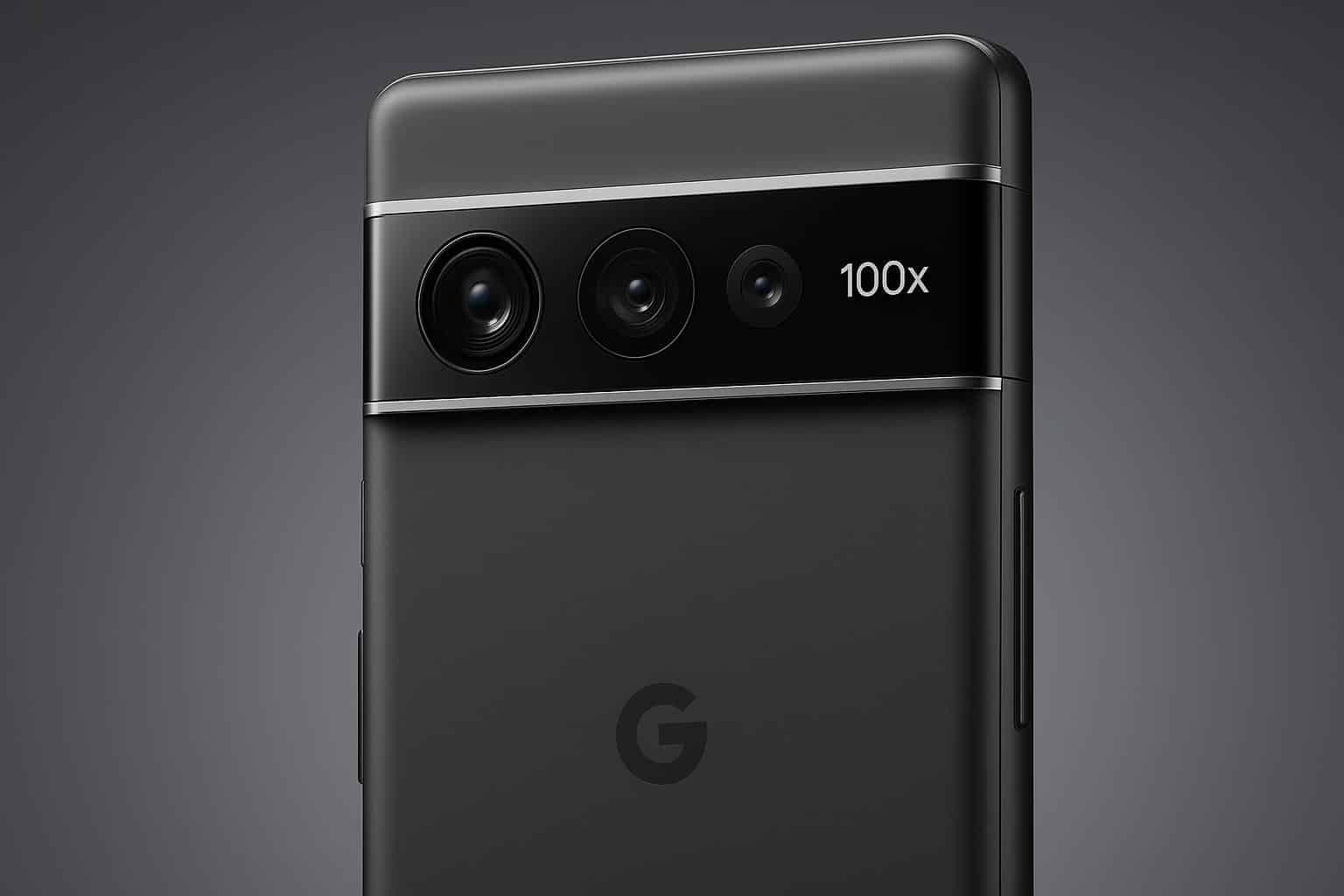

Google’s Pixel 10 Pro moves mobile photography conversation into alien territory with a 100x zoom that produces awesomely sharp images more often than not. From city skylines to faraway landmarks you can’t see with the naked eye, the phone lets me make out detail that seems impossible for a pocketable device. But there’s a catch: the final stretch of that reach relies heavily on AI reconstruction and sometimes the system makes well-intentioned guesses that range far from reality.

How 100x Zoom Works on the Google Pixel 10 Pro

At super-high magnifications, the phone is stacking many frames, stabilizing those and resizing them computationally in a way that seems similar in spirit (though not practice) to Google’s long-standing Super Res Zoom. The periscope telephoto gets you part of the way optically, then machine learning models fill in structure, edges and textures that the sensor can’t resolve from that distance.

Think of it as a multi-frame super-resolution pipeline: align, denoise, enhance, reconstruct. It can make things look as if you shot with a lens that’s hundreds of millimeters equivalent — mostly the feeling of using something in the 2,000–2,400mm range at 100x if you started with some wide base. The payoff is dramatic detail retention on things such as buildings, distant signage and even Moon surface patterns that would collapse into a mushy mess of pixels otherwise.

Where the Magic Fails at Extreme 100x Zoom Levels

The AI upscaling is excellent on lines, bricks, high-contrast edges. It is not as accurate with fine type, logos and animated billboards. In adjacent screen grabs, scrolling ads and brand marks occasionally appear warped or redrawn incompletely, while a comparison of the unaltered preview presents the appropriate, if pixelated, shapes. This mismatch is a dead giveaway that the model “finished” what it had in mind to be there.

Motion is another stress test. When traffic is in motion, leaves shine and heat haze shimmers throughout the frame, there’s less stable data for the system to merge — so you may notice texture repetition or mushy painted-looking areas after processing. These are not common failures, but if your priority is getting the facts right, they matter.

Trust and Transparency in the Picture at 100x

The debate isn’t new. Smartphone photos have been utilizing computational tricks for years — HDR bracketing, night stacking and scene-optimized tuning trained on huge image datasets. What’s new is just the scale: 100x zoom pushes into generative reconstruction, as content provenance questions go beyond mere tone mapping.

But to its credit, Google does flag these images. In the Photos grid, AI-tweaked zoom shots are indicated by a tiny magnifying glass with a sparkle icon above them, and the metadata includes an explicit note about the aid provided by computational photography. Such a disclosure is in the vein of recognizing broader industry work on content credentials from groups such as the C2PA, and aligns with calls for journalism standards bodies to describe AI-aided imagery so viewers can discern its origin.

When 100x Zoom Is Great for Travel and Daily Use

When traveling, wildlife at a good distance, architecture across a river or rapid personal journaling in which you are capturing what you saw that day, the Pixel 10 Pro’s 100x mode is magic. It neatly scrubs balcony railings, window frames and building stains that appear to blend into the scene, transforming impossible-to-get shots into ready-for-social keepers with surprisingly little effort.

Practical tips come to your rescue:

- Brace yourself against a railing or stable surface.

- Tap to focus to help the system lock onto edges.

- Fire off a short burst so the phone can pick the sharpest frame.

You will get the best results when shooting static objects in strong light and with clear edges.

And When You Should Dial It Back for Accuracy

If you’re documenting news, scientific observations or anything where text fidelity and accuracy are key, treat 100x as illustrative rather than evidentiary. Logo marks, micro copy and LED matrices can be reimagined discreetly. For professional work, many editors and ethical guides suggest keeping an optical baseline to original files for as long as they are retained — a standing position echoed by organizations like the NPPA.

No smartphone is going to be able to avoid physics: genuinely long optical lenses require space for glass and distance, which is one reason that sports photographers are still lugging around the giant primes. The Pixel 10 Pro closes that gap with smart software, except that it’s making educated guesses at what to leave in and cut out — good guesses most of the time, and questionable ones every so often.

Bottom Line on Pixel 10 Pro’s Extreme 100x Zoom

The 100x zoom on the Pixel 10 Pro is almost creepily good for everyday shooting, delivering detail that feels like cheating but without the heft of a super-telephoto lens. The catch is simple: toward the extreme, AI can beautify or fib a little bit, especially when it comes to text and fast-changing displays. With clear labeling and a little bit of user judgment about when to trust it, this is the most compelling long-reach camera experience ever stuffed into a phone — and a reminder that in 2025 “what is a photo” is now something that your pocket can ask.